正在加载图片...

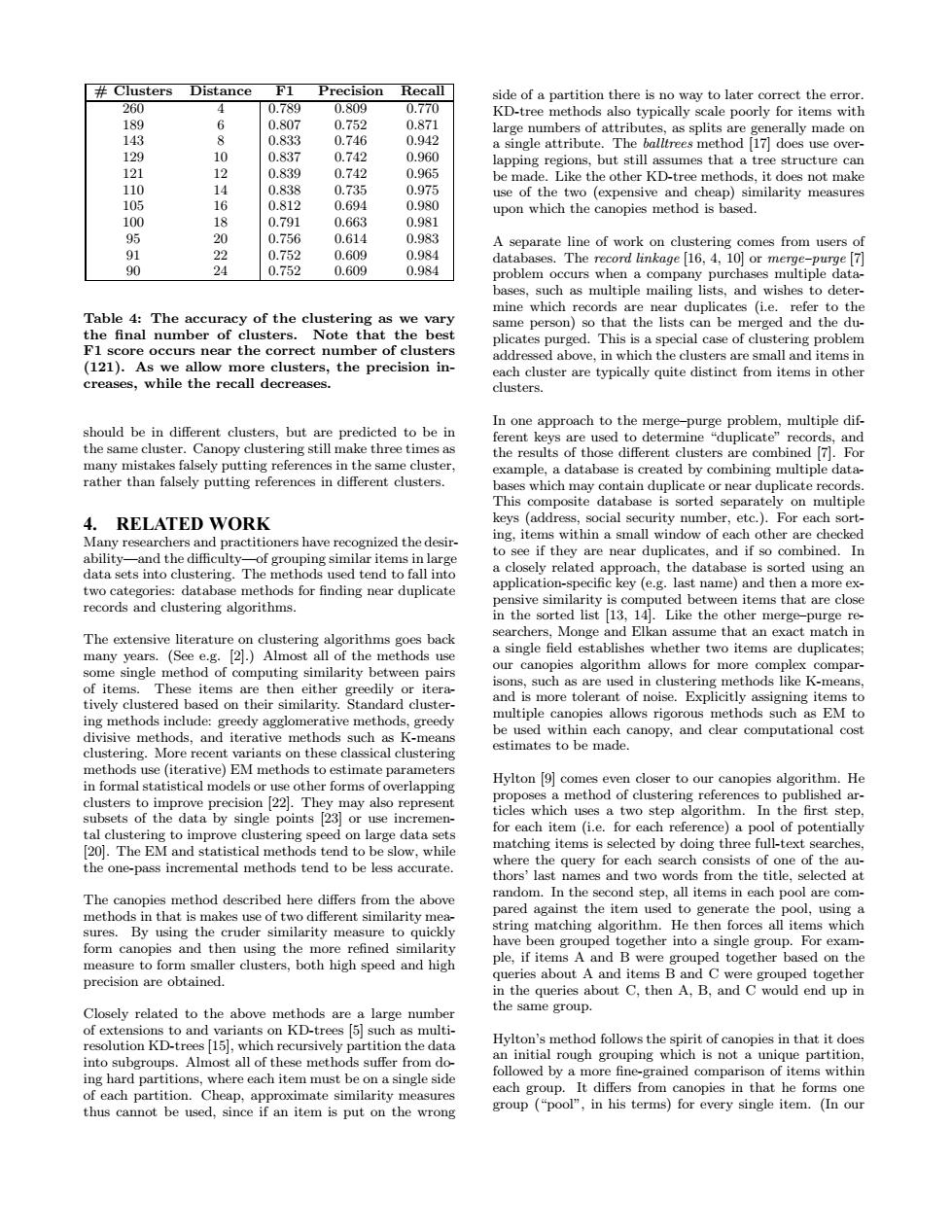

#Clusters Distance F1 Precision Recall side of a partition there is no way to later correct the error. 260 4 0.789 0.809 0.770 KD-tree methods also typically scale poorly for items with 189 6 0.807 0.752 0.871 large numbers of attributes,as splits are generally made on 143 8 0.833 0.746 0.942 a single attribute.The balltrees method [17]does use over- 129 10 0.837 0.742 0.960 lapping regions,but still assumes that a tree structure can 121 12 0.839 0.742 0.965 be made.Like the other KD-tree methods,it does not make 110 14 0.838 0.735 0.975 use of the two (expensive and cheap)similarity measures 105 16 0.812 0.694 0.980 upon which the canopies method is based. 100 18 0.791 0.663 0.981 95 20 0.756 0.614 0.983 A separate line of work on clustering comes from users of 91 22 0.752 0.609 0.984 databases.The record linkage [16,4,10]or merge-purge [7] 90 24 0.752 0.609 0.984 problem occurs when a company purchases multiple data- bases,such as multiple mailing lists,and wishes to deter- mine which records are near duplicates (i.e.refer to the Table 4:The accuracy of the clustering as we vary same person)so that the lists can be merged and the du- the final number of clusters.Note that the best plicates purged.This is a special case of clustering problem F1 score occurs near the correct number of clusters addressed above,in which the clusters are small and items in (121).As we allow more clusters,the precision in- each cluster are typically quite distinct from items in other creases,while the recall decreases. clusters. In one approach to the merge-purge problem,multiple dif- should be in different clusters,but are predicted to be in ferent keys are used to determine "duplicate"records.and the same cluster.Canopy clustering still make three times as the results of those different clusters are combined [7].For many mistakes falsely putting references in the same cluster. example,a database is created by combining multiple data- rather than falsely putting references in different clusters. bases which may contain duplicate or near duplicate records This composite database is sorted separately on multiple 4.RELATED WORK keys (address,social security number,etc.).For each sort- Many researchers and practitioners have recognized the desir- ing,items within a small window of each other are checked ability-and the difficulty-of grouping similar items in large to see if they are near duplicates,and if so combined.In data sets into clustering.The methods used tend to fall into a closely related approach,the database is sorted using an two categories:database methods for finding near duplicate application-specific key (e.g.last name)and then a more ex- records and clustering algorithms. pensive similarity is computed between items that are close in the sorted list [13,14.Like the other merge-purge re- The extensive literature on clustering algorithms goes back searchers,Monge and Elkan assume that an exact match in many years.(See e.g.[2].)Almost all of the methods use a single field establishes whether two items are duplicates: some single method of computing similarity between pairs our canopies algorithm allows for more complex compar- of items.These items are then either greedily or itera- isons,such as are used in clustering methods like K-means, tively clustered based on their similarity.Standard cluster- and is more tolerant of noise.Explicitly assigning items to multiple canopies allows rigorous methods such as EM to ing methods include:greedy agglomerative methods,greedy divisive methods,and iterative methods such as K-means be used within each canopy,and clear computational cost estimates to be made. clustering.More recent variants on these classical clustering methods use (iterative)EM methods to estimate parameters Hylton [9 comes even closer to our canopies algorithm.He in formal statistical models or use other forms of overlapping clusters to improve precision [22].They may also represent proposes a method of clustering references to published ar- subsets of the data by single points [23]or use incremen- ticles which uses a two step algorithm.In the first step. tal clustering to improve clustering speed on large data sets for each item (i.e.for each reference)a pool of potentially 20.The EM and statistical methods tend to be slow,while matching items is selected by doing three full-text searches the one-pass incremental methods tend to be less accurate. where the query for each search consists of one of the au- thors'last names and two words from the title,selected at The canopies method described here differs from the above random.In the second step,all items in each pool are com- methods in that is makes use of two different similarity mea- pared against the item used to generate the pool,using a sures. By using the cruder similarity measure to quickly string matching algorithm.He then forces all items which form canopies and then using the more refined similarity have been grouped together into a single group.For exam- measure to form smaller clusters,both high speed and high ple,if items A and B were grouped together based on the precision are obtained. queries about A and items B and C were grouped together in the queries about C.then A.B.and C would end up in Closely related to the above methods are a large number the same group. of extensions to and variants on KD-trees [5]such as multi- resolution KD-trees 15,which recursively partition the data Hylton's method follows the spirit of canopies in that it does into subgroups.Almost all of these methods suffer from do- an initial rough grouping which is not a unique partition ing hard partitions,where each item must be on a single side followed by a more fine-grained comparison of items within of each partition.Cheap,approximate similarity measures each group.It differs from canopies in that he forms one thus cannot be used,since if an item is put on the wrong group (pool",in his terms)for every single item.(In our# Clusters Distance F1 Precision Recall 260 4 0.789 0.809 0.770 189 6 0.807 0.752 0.871 143 8 0.833 0.746 0.942 129 10 0.837 0.742 0.960 121 12 0.839 0.742 0.965 110 14 0.838 0.735 0.975 105 16 0.812 0.694 0.980 100 18 0.791 0.663 0.981 95 20 0.756 0.614 0.983 91 22 0.752 0.609 0.984 90 24 0.752 0.609 0.984 Table 4: The accuracy of the clustering as we vary the final number of clusters. Note that the best F1 score occurs near the correct number of clusters (121). As we allow more clusters, the precision increases, while the recall decreases. should be in different clusters, but are predicted to be in the same cluster. Canopy clustering still make three times as many mistakes falsely putting references in the same cluster, rather than falsely putting references in different clusters. 4. RELATED WORK Many researchers and practitioners have recognized the desirability—and the difficulty—of grouping similar items in large data sets into clustering. The methods used tend to fall into two categories: database methods for finding near duplicate records and clustering algorithms. The extensive literature on clustering algorithms goes back many years. (See e.g. [2].) Almost all of the methods use some single method of computing similarity between pairs of items. These items are then either greedily or iteratively clustered based on their similarity. Standard clustering methods include: greedy agglomerative methods, greedy divisive methods, and iterative methods such as K-means clustering. More recent variants on these classical clustering methods use (iterative) EM methods to estimate parameters in formal statistical models or use other forms of overlapping clusters to improve precision [22]. They may also represent subsets of the data by single points [23] or use incremental clustering to improve clustering speed on large data sets [20]. The EM and statistical methods tend to be slow, while the one-pass incremental methods tend to be less accurate. The canopies method described here differs from the above methods in that is makes use of two different similarity measures. By using the cruder similarity measure to quickly form canopies and then using the more refined similarity measure to form smaller clusters, both high speed and high precision are obtained. Closely related to the above methods are a large number of extensions to and variants on KD-trees [5] such as multiresolution KD-trees [15], which recursively partition the data into subgroups. Almost all of these methods suffer from doing hard partitions, where each item must be on a single side of each partition. Cheap, approximate similarity measures thus cannot be used, since if an item is put on the wrong side of a partition there is no way to later correct the error. KD-tree methods also typically scale poorly for items with large numbers of attributes, as splits are generally made on a single attribute. The balltrees method [17] does use overlapping regions, but still assumes that a tree structure can be made. Like the other KD-tree methods, it does not make use of the two (expensive and cheap) similarity measures upon which the canopies method is based. A separate line of work on clustering comes from users of databases. The record linkage [16, 4, 10] or merge–purge [7] problem occurs when a company purchases multiple databases, such as multiple mailing lists, and wishes to determine which records are near duplicates (i.e. refer to the same person) so that the lists can be merged and the duplicates purged. This is a special case of clustering problem addressed above, in which the clusters are small and items in each cluster are typically quite distinct from items in other clusters. In one approach to the merge–purge problem, multiple different keys are used to determine “duplicate” records, and the results of those different clusters are combined [7]. For example, a database is created by combining multiple databases which may contain duplicate or near duplicate records. This composite database is sorted separately on multiple keys (address, social security number, etc.). For each sorting, items within a small window of each other are checked to see if they are near duplicates, and if so combined. In a closely related approach, the database is sorted using an application-specific key (e.g. last name) and then a more expensive similarity is computed between items that are close in the sorted list [13, 14]. Like the other merge–purge researchers, Monge and Elkan assume that an exact match in a single field establishes whether two items are duplicates; our canopies algorithm allows for more complex comparisons, such as are used in clustering methods like K-means, and is more tolerant of noise. Explicitly assigning items to multiple canopies allows rigorous methods such as EM to be used within each canopy, and clear computational cost estimates to be made. Hylton [9] comes even closer to our canopies algorithm. He proposes a method of clustering references to published articles which uses a two step algorithm. In the first step, for each item (i.e. for each reference) a pool of potentially matching items is selected by doing three full-text searches, where the query for each search consists of one of the authors’ last names and two words from the title, selected at random. In the second step, all items in each pool are compared against the item used to generate the pool, using a string matching algorithm. He then forces all items which have been grouped together into a single group. For example, if items A and B were grouped together based on the queries about A and items B and C were grouped together in the queries about C, then A, B, and C would end up in the same group. Hylton’s method follows the spirit of canopies in that it does an initial rough grouping which is not a unique partition, followed by a more fine-grained comparison of items within each group. It differs from canopies in that he forms one group (“pool”, in his terms) for every single item. (In our