正在加载图片...

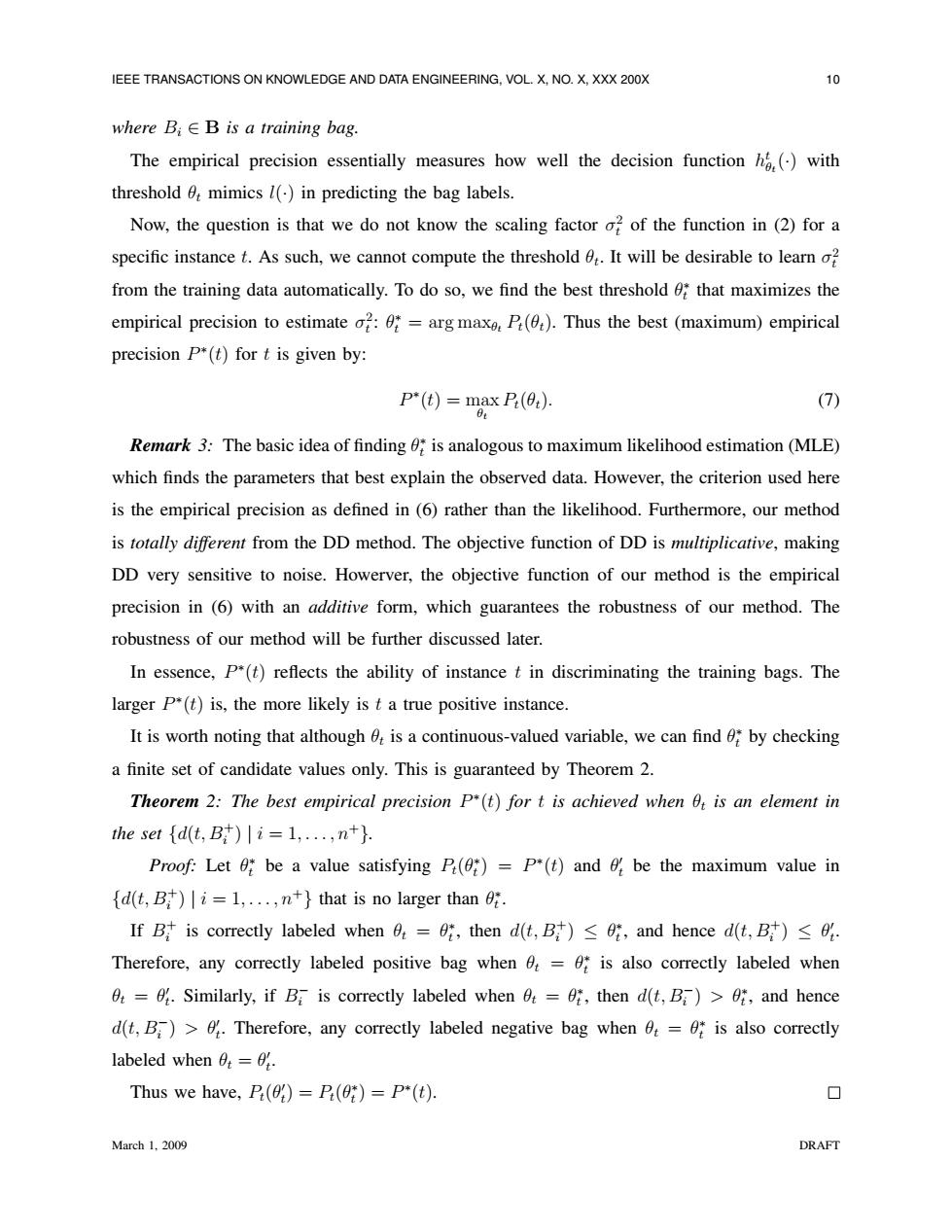

IEEE TRANSACTIONS ON KNOWLEDGE AND DATA ENGINEERING,VOL.X,NO.X,XXX 200X o where Bi E B is a training bag. The empirical precision essentially measures how well the decision function()with threshold mimics l()in predicting the bag labels. Now,the question is that we do not know the scaling factor o?of the function in(2)for a specific instance t.As such,we cannot compute the threshold 6t.It will be desirable to learn o? from the training data automatically.To do so,we find the best threshold that maximizes the empirical precision to estimate o:*=arg maxe,P().Thus the best (maximum)empirical precision P*(t)for t is given by: P*(t)=max P(0:). (7) Remark 3:The basic idea of finding 0;is analogous to maximum likelihood estimation (MLE) which finds the parameters that best explain the observed data.However,the criterion used here is the empirical precision as defined in(6)rather than the likelihood.Furthermore,our method is totally different from the DD method.The objective function of DD is multiplicative,making DD very sensitive to noise.Howerver,the objective function of our method is the empirical precision in (6)with an additive form,which guarantees the robustness of our method.The robustness of our method will be further discussed later. In essence,P*(t)reflects the ability of instance t in discriminating the training bags.The larger P(t)is,the more likely is t a true positive instance. It is worth noting that although 6:is a continuous-valued variable,we can find by checking a finite set of candidate values only.This is guaranteed by Theorem 2. Theorem 2:The best empirical precision P*(t)for t is achieved when Ot is an element in the set {d(t,B)i=1,...,n. Proof:Let 0:be a value satisfying P()=P*(t)and be the maximum value in d(t,B )i=1,...,n+}that is no larger than 0. If B is correctly labeled when 0=0:,then d(t,B)<0:,and hence d(t,B)<0. Therefore,any correctly labeled positive bag when is also correctly labeled when 0=0.Similarly,if B is correctly labeled when =0,then d(t,B)>0,and hence d(t,B)>.Therefore,any correctly labeled negative bag when 6t=0*is also correctly labeled when=. Thus we have,P()=P(0;)=P*(t). March 1,2009 DRAFTIEEE TRANSACTIONS ON KNOWLEDGE AND DATA ENGINEERING, VOL. X, NO. X, XXX 200X 10 where Bi ∈ B is a training bag. The empirical precision essentially measures how well the decision function h t θt (·) with threshold θt mimics l(·) in predicting the bag labels. Now, the question is that we do not know the scaling factor σ 2 t of the function in (2) for a specific instance t. As such, we cannot compute the threshold θt . It will be desirable to learn σ 2 t from the training data automatically. To do so, we find the best threshold θ ∗ t that maximizes the empirical precision to estimate σ 2 t : θ ∗ t = arg maxθt Pt(θt). Thus the best (maximum) empirical precision P ∗ (t) for t is given by: P ∗ (t) = max θt Pt(θt). (7) Remark 3: The basic idea of finding θ ∗ t is analogous to maximum likelihood estimation (MLE) which finds the parameters that best explain the observed data. However, the criterion used here is the empirical precision as defined in (6) rather than the likelihood. Furthermore, our method is totally different from the DD method. The objective function of DD is multiplicative, making DD very sensitive to noise. Howerver, the objective function of our method is the empirical precision in (6) with an additive form, which guarantees the robustness of our method. The robustness of our method will be further discussed later. In essence, P ∗ (t) reflects the ability of instance t in discriminating the training bags. The larger P ∗ (t) is, the more likely is t a true positive instance. It is worth noting that although θt is a continuous-valued variable, we can find θ ∗ t by checking a finite set of candidate values only. This is guaranteed by Theorem 2. Theorem 2: The best empirical precision P ∗ (t) for t is achieved when θt is an element in the set {d(t, B+ i ) | i = 1, . . . , n+}. Proof: Let θ ∗ t be a value satisfying Pt(θ ∗ t ) = P ∗ (t) and θ 0 t be the maximum value in {d(t, B+ i ) | i = 1, . . . , n+} that is no larger than θ ∗ t . If B + i is correctly labeled when θt = θ ∗ t , then d(t, B+ i ) ≤ θ ∗ t , and hence d(t, B+ i ) ≤ θ 0 t . Therefore, any correctly labeled positive bag when θt = θ ∗ t is also correctly labeled when θt = θ 0 t . Similarly, if B − i is correctly labeled when θt = θ ∗ t , then d(t, B− i ) > θ∗ t , and hence d(t, B− i ) > θ0 t . Therefore, any correctly labeled negative bag when θt = θ ∗ t is also correctly labeled when θt = θ 0 t . Thus we have, Pt(θ 0 t ) = Pt(θ ∗ t ) = P ∗ (t). March 1, 2009 DRAFT