正在加载图片...

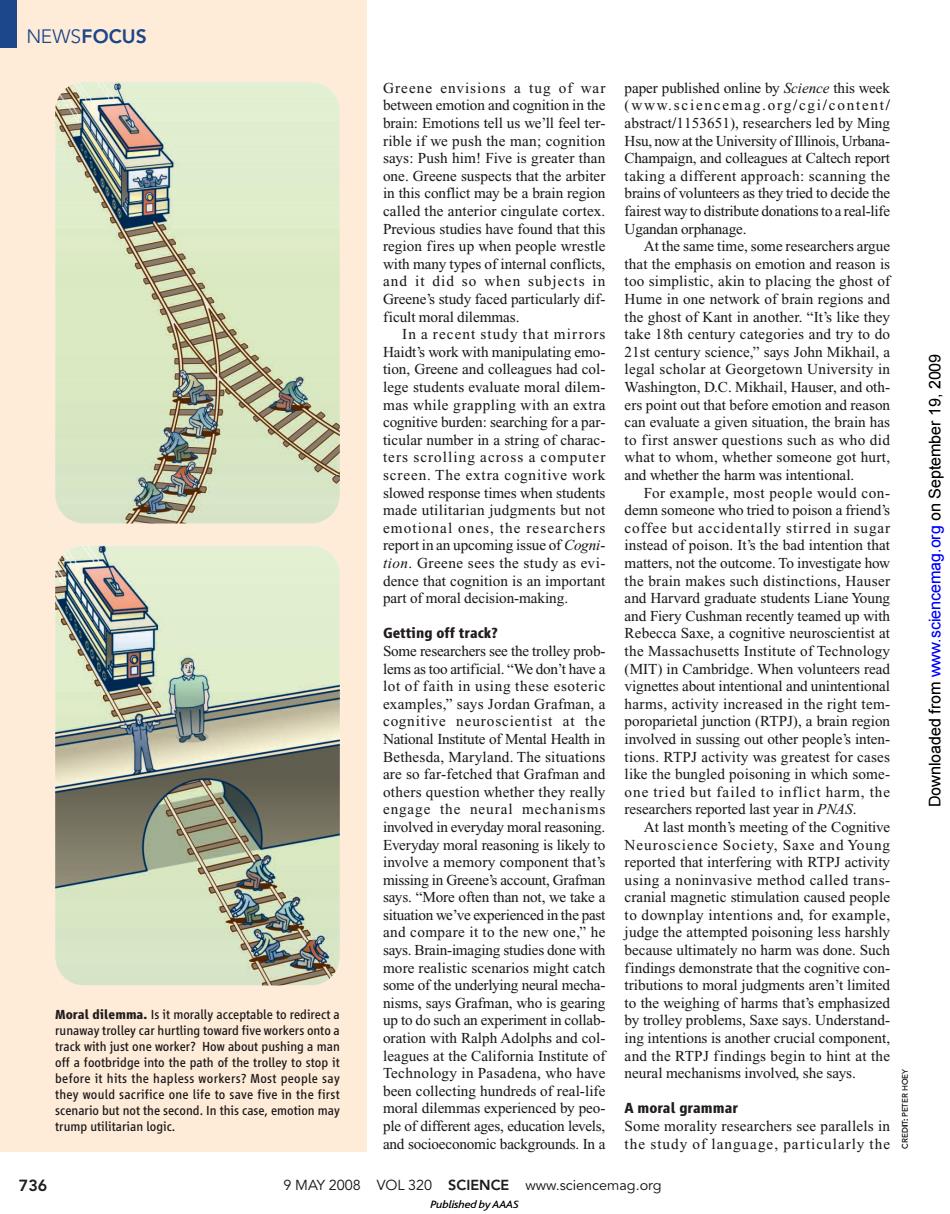

NEWSFOCUS oublished online by Science this weel (www.sciencemag.org/cgi/content/ the arbite in region Previous studies have found that this Ugandan orphanage. At the same time,some researchers arguc and it did so when subiects in Greene's study faced particularly dif- in one network of brain regions and udy that mirrors 21st century science," says John Mikhail, gues mas while grappling with an extra ers point out that before emotion and reason cognitive burde rching for a par- valuate a given situation e got hurt screen.The extra cognitive work and whether the harm was intention whe or example most people would emotional ones,the researchers coffee but a cidentally stirred in sugar an upcoming is of poison on dence that cognition is an part of moral decision-making and Harvard graduate students Liane Young Getting off track? "We don't h examples,"says Jordan Grafman. sed in the right tem neuro at the Bethesda,Mar situati ns RTPI actis for far-fetched that Grama like the bungled po rs qu they really one tried but fa ning At last month's meeting of the Cognitive Everyday moral soning is likely to Society, using a noninvasive method called tran says More often than not we take a al magnetic stimulation caused people ted ons an ing less says.Brai -imaging studies done with use ultimately no harm was done.Suc more re stic scenanos might catc findings demonst nisms,says Grafman.who is to the weighin t of ha ms that's e Moral dilemma.Is it morally acceptable to redirect nt in colla tp rkers onto up to do the RTPI findi nt hint at the Technology who ncural mechanisms involved.she savs ple of different age education l Some morality researchers see parallels in and socioeconomic backgrounds.In a the study of language,particularly the 736 9MAY2008 V0L320 SCIENCI www.sciencemag.org Greene envisions a tug of war between emotion and cognition in the brain: Emotions tell us we’ll feel terrible if we push the man; cognition says: Push him! Five is greater than one. Greene suspects that the arbiter in this conflict may be a brain region called the anterior cingulate cortex. Previous studies have found that this region fires up when people wrestle with many types of internal conflicts, and it did so when subjects in Greene’s study faced particularly difficult moral dilemmas. In a recent study that mirrors Haidt’s work with manipulating emotion, Greene and colleagues had college students evaluate moral dilemmas while grappling with an extra cognitive burden: searching for a particular number in a string of characters scrolling across a computer screen. The extra cognitive work slowed response times when students made utilitarian judgments but not emotional ones, the researchers report in an upcoming issue of Cognition. Greene sees the study as evidence that cognition is an important part of moral decision-making. Getting off track? Some researchers see the trolley problems as too artificial. “We don’t have a lot of faith in using these esoteric examples,” says Jordan Grafman, a cognitive neuroscientist at the National Institute of Mental Health in Bethesda, Maryland. The situations are so far-fetched that Grafman and others question whether they really engage the neural mechanisms involved in everyday moral reasoning. Everyday moral reasoning is likely to involve a memory component that’s missing in Greene’s account, Grafman says. “More often than not, we take a situation we’ve experienced in the past and compare it to the new one,” he says. Brain-imaging studies done with more realistic scenarios might catch some of the underlying neural mechanisms, says Grafman, who is gearing up to do such an experiment in collaboration with Ralph Adolphs and colleagues at the California Institute of Technology in Pasadena, who have been collecting hundreds of real-life moral dilemmas experienced by people of different ages, education levels, and socioeconomic backgrounds. In a paper published online by Science this week (www.sciencemag.org/cgi/content/ abstract/1153651), researchers led by Ming Hsu, now at the University of Illinois, UrbanaChampaign, and colleagues at Caltech report taking a different approach: scanning the brains of volunteers as they tried to decide the fairest way to distribute donations to a real-life Ugandan orphanage. At the same time, some researchers argue that the emphasis on emotion and reason is too simplistic, akin to placing the ghost of Hume in one network of brain regions and the ghost of Kant in another. “It’s like they take 18th century categories and try to do 21st century science,” says John Mikhail, a legal scholar at Georgetown University in Washington, D.C. Mikhail, Hauser, and others point out that before emotion and reason can evaluate a given situation, the brain has to first answer questions such as who did what to whom, whether someone got hurt, and whether the harm was intentional. For example, most people would condemn someone who tried to poison a friend’s coffee but accidentally stirred in sugar instead of poison. It’s the bad intention that matters, not the outcome. To investigate how the brain makes such distinctions, Hauser and Harvard graduate students Liane Young and Fiery Cushman recently teamed up with Rebecca Saxe, a cognitive neuroscientist at the Massachusetts Institute of Technology (MIT) in Cambridge. When volunteers read vignettes about intentional and unintentional harms, activity increased in the right temporoparietal junction (RTPJ), a brain region involved in sussing out other people’s intentions. RTPJ activity was greatest for cases like the bungled poisoning in which someone tried but failed to inflict harm, the researchers reported last year in PNAS. At last month’s meeting of the Cognitive Neuroscience Society, Saxe and Young reported that interfering with RTPJ activity using a noninvasive method called transcranial magnetic stimulation caused people to downplay intentions and, for example, judge the attempted poisoning less harshly because ultimately no harm was done. Such findings demonstrate that the cognitive contributions to moral judgments aren’t limited to the weighing of harms that’s emphasized by trolley problems, Saxe says. Understanding intentions is another crucial component, and the RTPJ findings begin to hint at the neural mechanisms involved, she says. A moral grammar Some morality researchers see parallels in the study of language, particularly the 736 9 MAY 2008 VOL 320 SCIENCE www.sciencemag.org CREDIT: PETER HOEY NEWSFOCUS Moral dilemma. Is it morally acceptable to redirect a runaway trolley car hurtling toward five workers onto a track with just one worker? How about pushing a man off a footbridge into the path of the trolley to stop it before it hits the hapless workers? Most people say they would sacrifice one life to save five in the first scenario but not the second. In this case, emotion may trump utilitarian logic. Published byAAAS on September 19, 2009 www.sciencemag.org Downloaded from