正在加载图片...

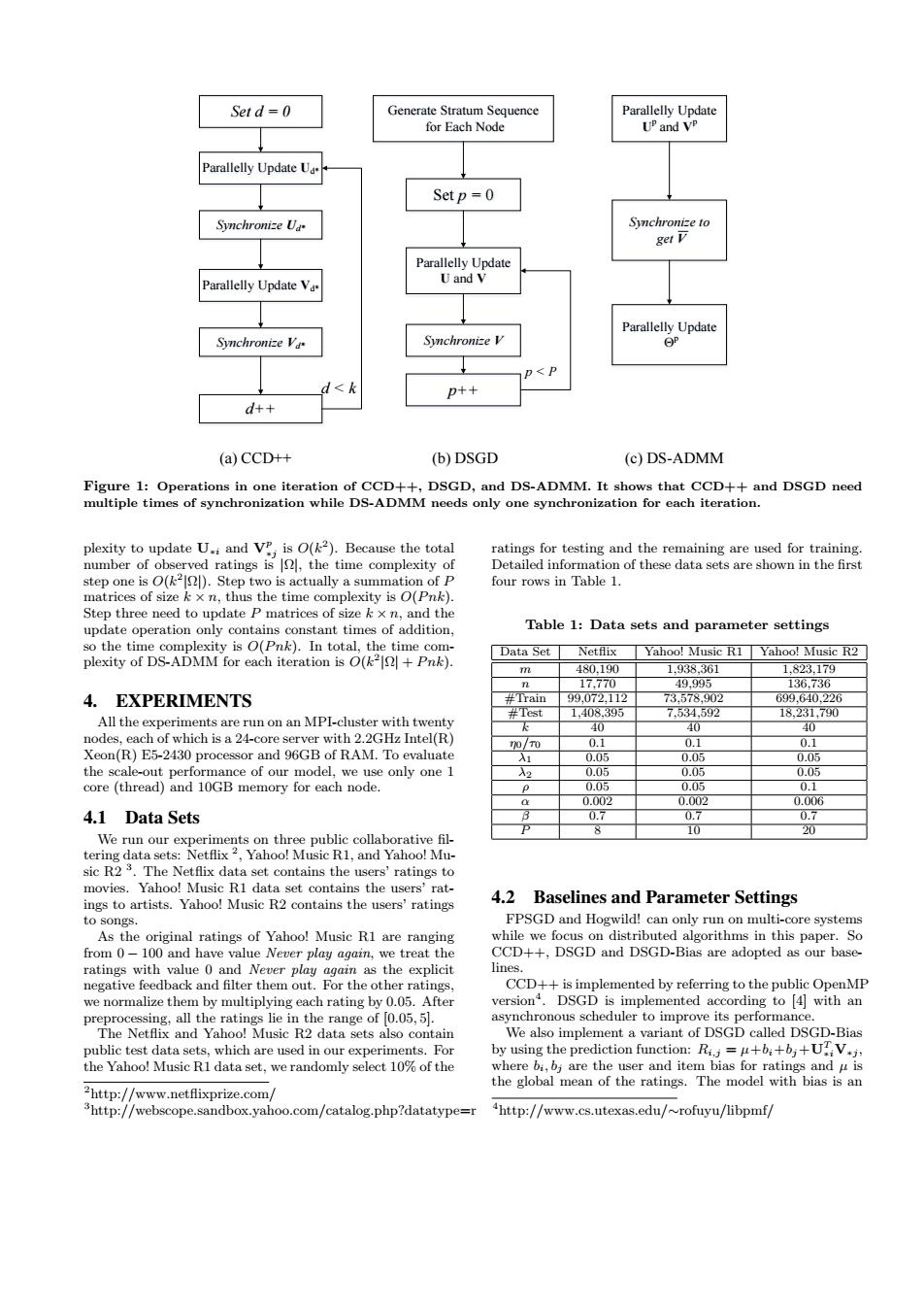

Setd=0 Generate Stratum Sequence Parallelly Update for Each Node UP and VP Parallelly Update U. Setp=0 Synchronize U Synchronize to get V Parallelly Update Parallelly Update Va U and V Parallelly Update Synchronize V Synchronize V ⊙A <P p++ d++ (a)CCD++ (b)DSGD (c)DS-ADMM Figure 1:Operations in one iteration of CCD++,DSGD,and DS-ADMM.It shows that CCD++and DSGD need multiple times of synchronization while DS-ADMM needs only one synchronization for each iteration. plexity to update U.and VP;is O(k2).Because the total ratings for testing and the remaining are used for training. number of observed ratings is the time complexity of Detailed information of these data sets are shown in the first step one is O().Step two is actually a summation of P four rows in Table 1. matrices of size k x n,thus the time complexity is O(Pnk). Step three need to update P matrices of size k x n,and the update operation only contains constant times of addition, Table 1:Data sets and parameter settings so the time complexity is O(Pnk).In total,the time com- Data Set Netflix Yahoo!Music R1 Yahoo!Music R2 plexity of DS-ADMM for each iteration is O(k2+Pnk). m 480.190 1.938,361 1,823.179 n 17.770 49.995 136.736 4.EXPERIMENTS #Train99,072,112 73.578,902 699.640.226 #Test 1.408,395 All the experiments are run on an MPI-cluster with twenty 7,534,592 18,231,790 40 40 40 nodes,each of which is a 24-core server with 2.2GHz Intel(R) 0/T0 0.1 0.1 0.1 Xeon(R)E5-2430 processor and 96GB of RAM.To evaluate 入1 0.05 0.05 0.05 the scale-out performance of our model,we use only one 1 A2 0.05 0.05 0.05 core (thread)and 10GB memory for each node. 005 0.05 0.1 0.002 0.002 0.006 4.1 Data Sets 0.7 0.7 0.7 8 10 20 We run our experiments on three public collaborative fil- tering data sets:Netflix2,Yahoo!Music R1,and Yahoo!Mu- sic R23.The Netflix data set contains the users'ratings to movies.Yahoo!Music RI data set contains the users'rat- ings to artists.Yahoo!Music R2 contains the users'ratings 4.2 Baselines and Parameter Settings to songs. FPSGD and Hogwild!can only run on multi-core systems As the original ratings of Yahoo!Music RI are ranging while we focus on distributed algorithms in this paper.So from 0-100 and have value Never play again,we treat the CCD++,DSGD and DSGD-Bias are adopted as our base- ratings with value 0 and Never play again as the explicit lines. negative feedback and filter them out.For the other ratings. CCD++is implemented by referring to the public OpenMP we normalize them by multiplying each rating by 0.05.After version.DSGD is implemented according to [4 with an preprocessing,all the ratings lie in the range of 0.05,5. asynchronous scheduler to improve its performance The Netflix and Yahoo!Music R2 data sets also contain We also implement a variant of DSGD called DSGD-Bias public test data sets,which are used in our experiments.For by using the prediction function:Ri.i=u+b:+6;+UV.i, the Yahoo!Music RI data set,we randomly select 10%of the where bi,bi are the user and item bias for ratings and u is the global mean of the ratings.The model with bias is an 2http://www.netflixprize.com/ 3http://webscope.sandbox.yahoo.com/catalog.php?datatype=r 4http://www.cs.utexas.edu/~rofuyu/libpmf/Set d = 0 Parallelly Update Ud* Synchronize Ud* d++ d < k Set p = 0 Parallelly Update U and V Synchronize V p++ p < P Generate Stratum Sequence for Each Node (a) CCD++ (b) DSGD (c) DS-ADMM Parallelly Update U p and V p Synchronize to get V Parallelly Update Θ p Parallelly Update Vd* Synchronize Vd* Figure 1: Operations in one iteration of CCD++, DSGD, and DS-ADMM. It shows that CCD++ and DSGD need multiple times of synchronization while DS-ADMM needs only one synchronization for each iteration. plexity to update U∗i and V p ∗j is O(k 2 ). Because the total number of observed ratings is |Ω|, the time complexity of step one is O(k 2 |Ω|). Step two is actually a summation of P matrices of size k × n, thus the time complexity is O(P nk). Step three need to update P matrices of size k × n, and the update operation only contains constant times of addition, so the time complexity is O(P nk). In total, the time complexity of DS-ADMM for each iteration is O(k 2 |Ω| + P nk). 4. EXPERIMENTS All the experiments are run on an MPI-cluster with twenty nodes, each of which is a 24-core server with 2.2GHz Intel(R) Xeon(R) E5-2430 processor and 96GB of RAM. To evaluate the scale-out performance of our model, we use only one 1 core (thread) and 10GB memory for each node. 4.1 Data Sets We run our experiments on three public collaborative filtering data sets: Netflix 2 , Yahoo! Music R1, and Yahoo! Music R2 3 . The Netflix data set contains the users’ ratings to movies. Yahoo! Music R1 data set contains the users’ ratings to artists. Yahoo! Music R2 contains the users’ ratings to songs. As the original ratings of Yahoo! Music R1 are ranging from 0 − 100 and have value Never play again, we treat the ratings with value 0 and Never play again as the explicit negative feedback and filter them out. For the other ratings, we normalize them by multiplying each rating by 0.05. After preprocessing, all the ratings lie in the range of [0.05, 5]. The Netflix and Yahoo! Music R2 data sets also contain public test data sets, which are used in our experiments. For the Yahoo! Music R1 data set, we randomly select 10% of the 2http://www.netflixprize.com/ 3http://webscope.sandbox.yahoo.com/catalog.php?datatype=r ratings for testing and the remaining are used for training. Detailed information of these data sets are shown in the first four rows in Table 1. Table 1: Data sets and parameter settings Data Set Netflix Yahoo! Music R1 Yahoo! Music R2 m 480,190 1,938,361 1,823,179 n 17,770 49,995 136,736 #Train 99,072,112 73,578,902 699,640,226 #Test 1,408,395 7,534,592 18,231,790 k 40 40 40 η0/τ0 0.1 0.1 0.1 λ1 0.05 0.05 0.05 λ2 0.05 0.05 0.05 ρ 0.05 0.05 0.1 α 0.002 0.002 0.006 β 0.7 0.7 0.7 P 8 10 20 4.2 Baselines and Parameter Settings FPSGD and Hogwild! can only run on multi-core systems while we focus on distributed algorithms in this paper. So CCD++, DSGD and DSGD-Bias are adopted as our baselines. CCD++ is implemented by referring to the public OpenMP version4 . DSGD is implemented according to [4] with an asynchronous scheduler to improve its performance. We also implement a variant of DSGD called DSGD-Bias by using the prediction function: Ri,j = µ+bi+bj+UT ∗iV∗j , where bi, bj are the user and item bias for ratings and µ is the global mean of the ratings. The model with bias is an 4http://www.cs.utexas.edu/∼rofuyu/libpmf/