正在加载图片...

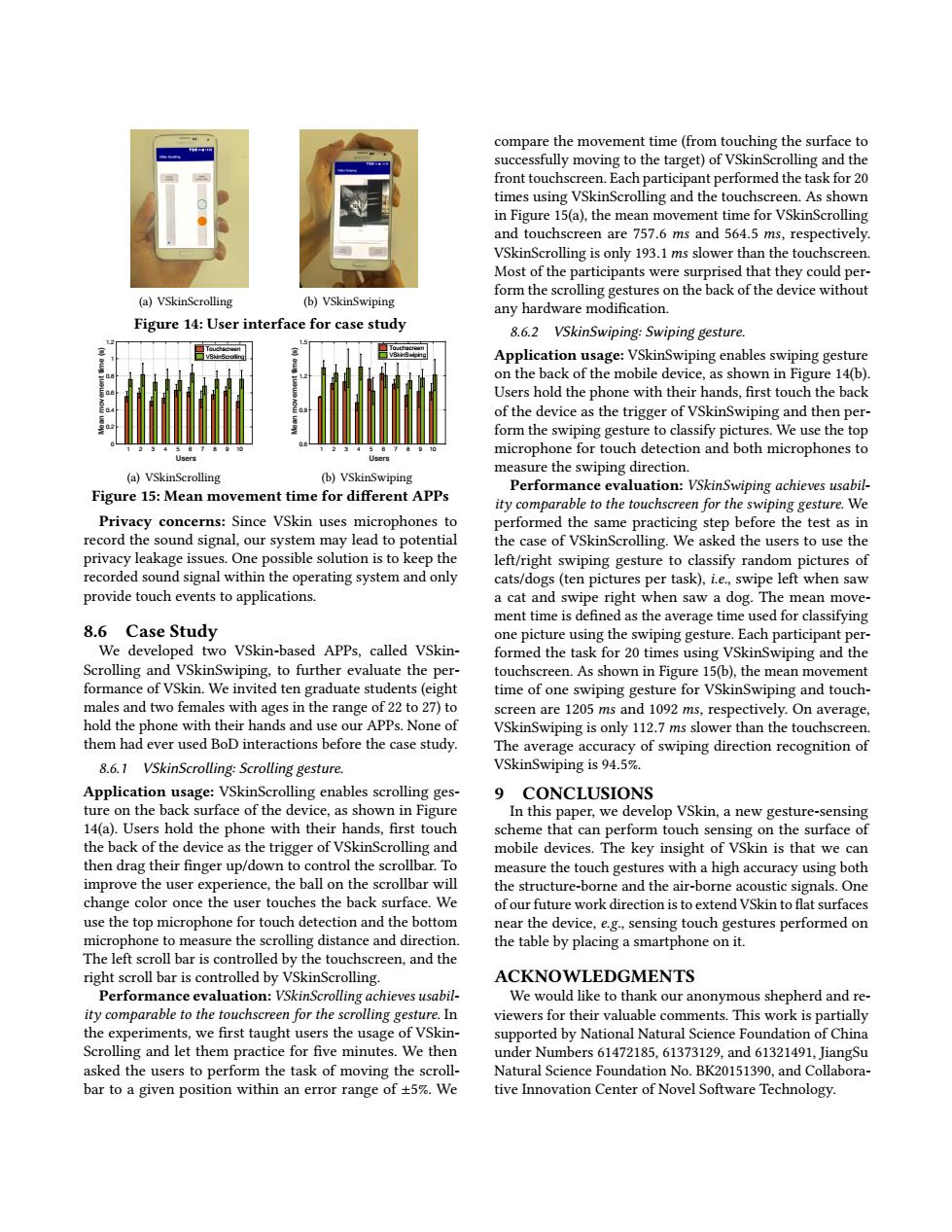

compare the movement time(from touching the surface to successfully moving to the target)of VSkinScrolling and the front touchscreen.Each participant performed the task for 20 times using VSkinScrolling and the touchscreen.As shown in Figure 15(a),the mean movement time for VSkinScrolling and touchscreen are 757.6 ms and 564.5 ms,respectively VSkinScrolling is only 193.1 ms slower than the touchscreen Most of the participants were surprised that they could per- form the scrolling gestures on the back of the device without (a)VSkinScrolling (b)VSkinSwiping any hardware modification. Figure 14:User interface for case study 8.6.2 VSkinSwiping:Swiping gesture. Application usage:VSkinSwiping enables swiping gesture on the back of the mobile device,as shown in Figure 14(b). Users hold the phone with their hands,first touch the back of the device as the trigger of VSkinSwiping and then per- form the swiping gesture to classify pictures.We use the top microphone for touch detection and both microphones to measure the swiping direction. (a)VSkinScrolling (b)VSkinSwiping Performance evaluation:VSkinSwiping achieves usabil- Figure 15:Mean movement time for different APPs ity comparable to the touchscreen for the swiping gesture.We Privacy concerns:Since VSkin uses microphones to performed the same practicing step before the test as in record the sound signal,our system may lead to potential the case of VSkinScrolling.We asked the users to use the privacy leakage issues.One possible solution is to keep the left/right swiping gesture to classify random pictures of recorded sound signal within the operating system and only cats/dogs(ten pictures per task),i.e.,swipe left when saw provide touch events to applications. a cat and swipe right when saw a dog.The mean move- ment time is defined as the average time used for classifying 8.6 Case Study one picture using the swiping gesture.Each participant per- We developed two VSkin-based APPs,called VSkin- formed the task for 20 times using VSkinSwiping and the Scrolling and VSkinSwiping,to further evaluate the per- touchscreen.As shown in Figure 15(b),the mean movement formance of VSkin.We invited ten graduate students(eight time of one swiping gesture for VSkinSwiping and touch- males and two females with ages in the range of 22 to 27)to screen are 1205 ms and 1092 ms,respectively.On average, hold the phone with their hands and use our APPs.None of VSkinSwiping is only 112.7 ms slower than the touchscreen. them had ever used BoD interactions before the case study. The average accuracy of swiping direction recognition of 8.6.1 VSkinScrolling:Scrolling gesture. VSkinSwiping is 94.5%. Application usage:VSkinScrolling enables scrolling ges- 9 CONCLUSIONS ture on the back surface of the device,as shown in Figure In this paper,we develop VSkin,a new gesture-sensing 14(a).Users hold the phone with their hands,first touch scheme that can perform touch sensing on the surface of the back of the device as the trigger of VSkinScrolling and mobile devices.The key insight of VSkin is that we can then drag their finger up/down to control the scrollbar.To measure the touch gestures with a high accuracy using both improve the user experience,the ball on the scrollbar will the structure-borne and the air-borne acoustic signals.One change color once the user touches the back surface.We of our future work direction is to extend VSkin to flat surfaces use the top microphone for touch detection and the bottom near the device,e.g.,sensing touch gestures performed on microphone to measure the scrolling distance and direction. the table by placing a smartphone on it. The left scroll bar is controlled by the touchscreen,and the right scroll bar is controlled by VSkinScrolling. ACKNOWLEDGMENTS Performance evaluation:VSkinScrolling achieves usabil- We would like to thank our anonymous shepherd and re- ity comparable to the touchscreen for the scrolling gesture.In viewers for their valuable comments.This work is partially the experiments,we first taught users the usage of VSkin- supported by National Natural Science Foundation of China Scrolling and let them practice for five minutes.We then under Numbers 61472185,61373129,and 61321491,JiangSu asked the users to perform the task of moving the scroll- Natural Science Foundation No.BK20151390,and Collabora- bar to a given position within an error range of +5%.We tive Innovation Center of Novel Software Technology.(a) VSkinScrolling (b) VSkinSwiping Figure 14: User interface for case study 1 2 3 4 5 6 7 8 9 10 Users 0 0.2 0.4 0.6 0.8 1 1.2 Mean movement time (s) Touchscreen VSkinScrolling (a) VSkinScrolling 1 2 3 4 5 6 7 8 9 10 Users 0.6 0.9 1.2 1.5 Mean movement time (s) Touchscreen VSkinSwiping (b) VSkinSwiping Figure 15: Mean movement time for different APPs Privacy concerns: Since VSkin uses microphones to record the sound signal, our system may lead to potential privacy leakage issues. One possible solution is to keep the recorded sound signal within the operating system and only provide touch events to applications. 8.6 Case Study We developed two VSkin-based APPs, called VSkinScrolling and VSkinSwiping, to further evaluate the performance of VSkin. We invited ten graduate students (eight males and two females with ages in the range of 22 to 27) to hold the phone with their hands and use our APPs. None of them had ever used BoD interactions before the case study. 8.6.1 VSkinScrolling: Scrolling gesture. Application usage: VSkinScrolling enables scrolling gesture on the back surface of the device, as shown in Figure 14(a). Users hold the phone with their hands, first touch the back of the device as the trigger of VSkinScrolling and then drag their finger up/down to control the scrollbar. To improve the user experience, the ball on the scrollbar will change color once the user touches the back surface. We use the top microphone for touch detection and the bottom microphone to measure the scrolling distance and direction. The left scroll bar is controlled by the touchscreen, and the right scroll bar is controlled by VSkinScrolling. Performance evaluation: VSkinScrolling achieves usability comparable to the touchscreen for the scrolling gesture. In the experiments, we first taught users the usage of VSkinScrolling and let them practice for five minutes. We then asked the users to perform the task of moving the scrollbar to a given position within an error range of ±5%. We compare the movement time (from touching the surface to successfully moving to the target) of VSkinScrolling and the front touchscreen. Each participant performed the task for 20 times using VSkinScrolling and the touchscreen. As shown in Figure 15(a), the mean movement time for VSkinScrolling and touchscreen are 757.6 ms and 564.5 ms, respectively. VSkinScrolling is only 193.1 ms slower than the touchscreen. Most of the participants were surprised that they could perform the scrolling gestures on the back of the device without any hardware modification. 8.6.2 VSkinSwiping: Swiping gesture. Application usage: VSkinSwiping enables swiping gesture on the back of the mobile device, as shown in Figure 14(b). Users hold the phone with their hands, first touch the back of the device as the trigger of VSkinSwiping and then perform the swiping gesture to classify pictures. We use the top microphone for touch detection and both microphones to measure the swiping direction. Performance evaluation: VSkinSwiping achieves usability comparable to the touchscreen for the swiping gesture. We performed the same practicing step before the test as in the case of VSkinScrolling. We asked the users to use the left/right swiping gesture to classify random pictures of cats/dogs (ten pictures per task), i.e., swipe left when saw a cat and swipe right when saw a dog. The mean movement time is defined as the average time used for classifying one picture using the swiping gesture. Each participant performed the task for 20 times using VSkinSwiping and the touchscreen. As shown in Figure 15(b), the mean movement time of one swiping gesture for VSkinSwiping and touchscreen are 1205 ms and 1092 ms, respectively. On average, VSkinSwiping is only 112.7 ms slower than the touchscreen. The average accuracy of swiping direction recognition of VSkinSwiping is 94.5%. 9 CONCLUSIONS In this paper, we develop VSkin, a new gesture-sensing scheme that can perform touch sensing on the surface of mobile devices. The key insight of VSkin is that we can measure the touch gestures with a high accuracy using both the structure-borne and the air-borne acoustic signals. One of our future work direction is to extend VSkin to flat surfaces near the device, e.g., sensing touch gestures performed on the table by placing a smartphone on it. ACKNOWLEDGMENTS We would like to thank our anonymous shepherd and reviewers for their valuable comments. This work is partially supported by National Natural Science Foundation of China under Numbers 61472185, 61373129, and 61321491, JiangSu Natural Science Foundation No. BK20151390, and Collaborative Innovation Center of Novel Software Technology