正在加载图片...

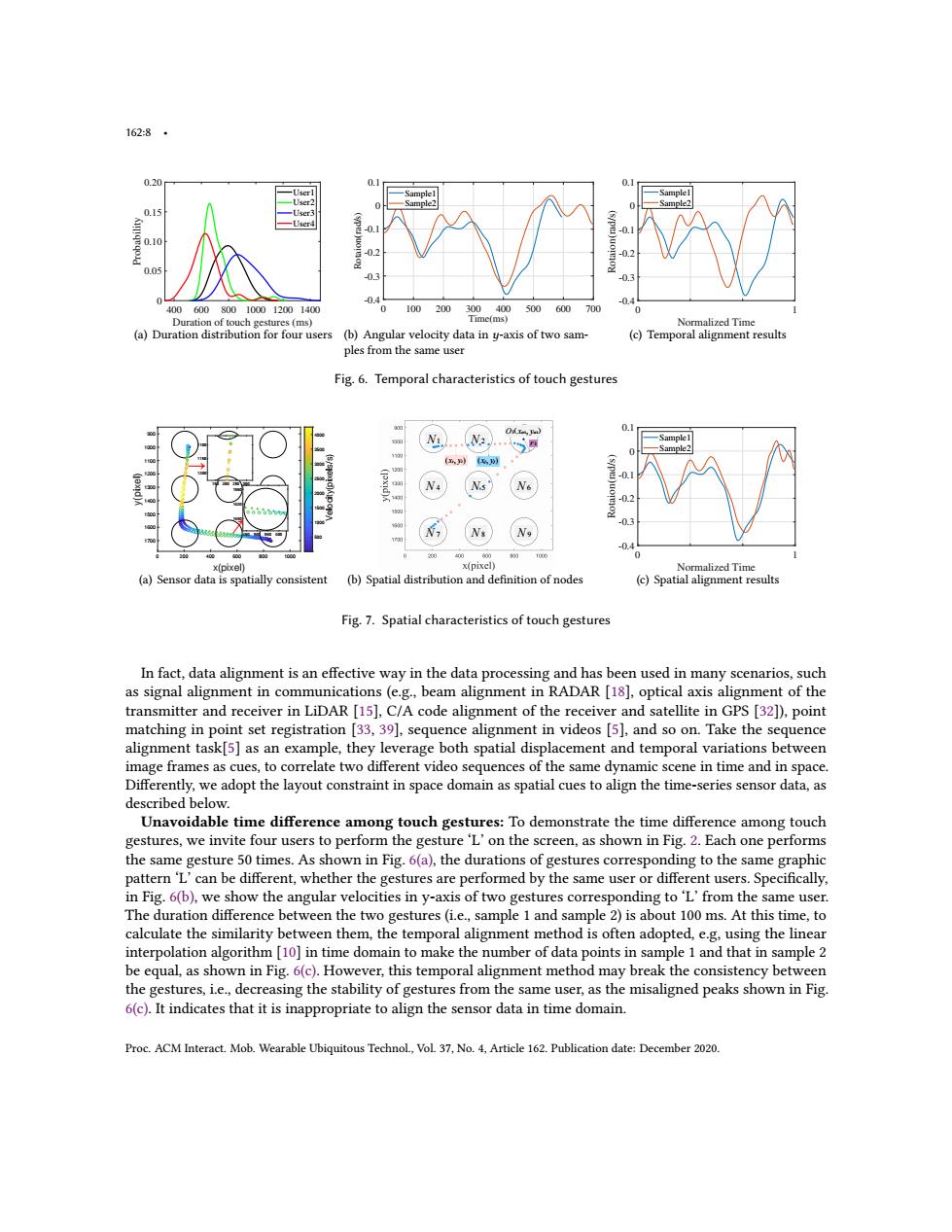

1628 0.20 0.1 0. 0.15 Userd 0. -0.2 0.05 0.3 03 04 400600800100012001400 0 100200300400500600700 Duration of touch gestures(ms) Time(ms) Normalized Time (a)Duration distribution for four users (b)Angular velocity data in y-axis of two sam- (c)Temporal alignment results ples from the same user Fig.6.Temporal characteristics of touch gestures 00 0减xh 0.1 -Sample2 1前 Ns' -02 03 Ns -04 0 0 x(pixel) x(pixel) Normalized Time (a)Sensor data is spatially consistent (b)Spatial distribution and definition of nodes (c)Spatial alignment results Fig.7.Spatial characteristics of touch gestures In fact,data alignment is an effective way in the data processing and has been used in many scenarios,such as signal alignment in communications(e.g.,beam alignment in RADAR [18],optical axis alignment of the transmitter and receiver in LiDAR [15],C/A code alignment of the receiver and satellite in GPS [32]),point matching in point set registration [33,39],sequence alignment in videos [5],and so on.Take the sequence alignment task[5]as an example,they leverage both spatial displacement and temporal variations between image frames as cues,to correlate two different video sequences of the same dynamic scene in time and in space Differently,we adopt the layout constraint in space domain as spatial cues to align the time-series sensor data,as described below. Unavoidable time difference among touch gestures:To demonstrate the time difference among touch gestures,we invite four users to perform the gesture 'L'on the screen,as shown in Fig.2.Each one performs the same gesture 50 times.As shown in Fig.6(a),the durations of gestures corresponding to the same graphic pattern 'L'can be different,whether the gestures are performed by the same user or different users.Specifically, in Fig.6(b),we show the angular velocities in y-axis of two gestures corresponding to 'L'from the same user. The duration difference between the two gestures(i.e.,sample 1 and sample 2)is about 100 ms.At this time,to calculate the similarity between them,the temporal alignment method is often adopted,e.g,using the linear interpolation algorithm [10]in time domain to make the number of data points in sample 1 and that in sample 2 be equal,as shown in Fig.6(c).However,this temporal alignment method may break the consistency between the gestures,i.e.,decreasing the stability of gestures from the same user,as the misaligned peaks shown in Fig. 6(c).It indicates that it is inappropriate to align the sensor data in time domain Proc.ACM Interact.Mob.Wearable Ubiquitous Technol.,Vol.37,No.4,Article 162.Publication date:December 2020.162:8 • 400 600 800 1000 1200 1400 Duration of touch gestures (ms) 0 0.05 0.10 0.15 0.20 Probability User1 User2 User3 User4 (a) Duration distribution for four users 0 100 200 300 400 500 600 700 Time(ms) -0.4 -0.3 -0.2 -0.1 0 0.1 Rotaion(rad/s) Sample1 Sample2 (b) Angular velocity data in 𝑦-axis of two samples from the same user 0 1 Normalized Time -0.4 -0.3 -0.2 -0.1 0 0.1 Rotaion(rad/s) Sample1 Sample2 (c) Temporal alignment results Fig. 6. Temporal characteristics of touch gestures 0 200 400 600 800 1000 x(pixel) 900 1000 1100 1200 1300 1400 1500 1600 1700 y(pixel) 500 1000 1500 2000 2500 3000 3500 4000 Velocity(pixels/s) 450 500 550 600 1550 1620 1690 150 200 250 300 1100 1150 → 1200 → (a) Sensor data is spatially consistent N1 N4 N2 N5 N7 N8 N9 N6 O(3 xo3, yo3) r 3 (xi, yi) (xj, yj) (b) Spatial distribution and definition of nodes 0 1 Normalized Time -0.4 -0.3 -0.2 -0.1 0 0.1 Rotaion(rad/s) Sample1 Sample2 (c) Spatial alignment results Fig. 7. Spatial characteristics of touch gestures In fact, data alignment is an effective way in the data processing and has been used in many scenarios, such as signal alignment in communications (e.g., beam alignment in RADAR [18], optical axis alignment of the transmitter and receiver in LiDAR [15], C/A code alignment of the receiver and satellite in GPS [32]), point matching in point set registration [33, 39], sequence alignment in videos [5], and so on. Take the sequence alignment task[5] as an example, they leverage both spatial displacement and temporal variations between image frames as cues, to correlate two different video sequences of the same dynamic scene in time and in space. Differently, we adopt the layout constraint in space domain as spatial cues to align the time-series sensor data, as described below. Unavoidable time difference among touch gestures: To demonstrate the time difference among touch gestures, we invite four users to perform the gesture ‘L’ on the screen, as shown in Fig. 2. Each one performs the same gesture 50 times. As shown in Fig. 6(a), the durations of gestures corresponding to the same graphic pattern ‘L’ can be different, whether the gestures are performed by the same user or different users. Specifically, in Fig. 6(b), we show the angular velocities in y-axis of two gestures corresponding to ‘L’ from the same user. The duration difference between the two gestures (i.e., sample 1 and sample 2) is about 100 ms. At this time, to calculate the similarity between them, the temporal alignment method is often adopted, e.g, using the linear interpolation algorithm [10] in time domain to make the number of data points in sample 1 and that in sample 2 be equal, as shown in Fig. 6(c). However, this temporal alignment method may break the consistency between the gestures, i.e., decreasing the stability of gestures from the same user, as the misaligned peaks shown in Fig. 6(c). It indicates that it is inappropriate to align the sensor data in time domain. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol., Vol. 37, No. 4, Article 162. Publication date: December 2020