正在加载图片...

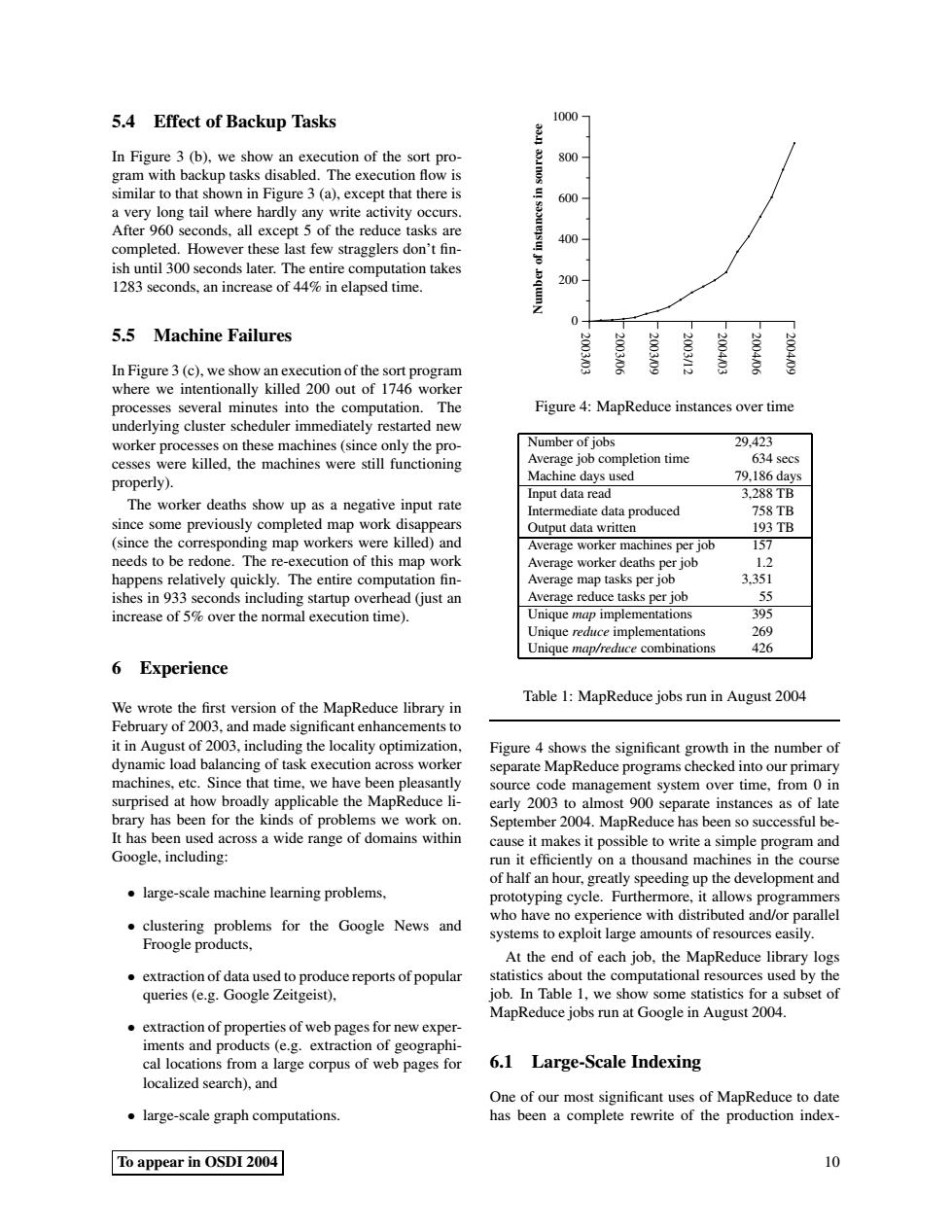

5.4 Effect of Backup Tasks 1000 In Figure 3(b),we show an execution of the sort pro- 800 gram with backup tasks disabled.The execution flow is similar to that shown in Figure 3 (a),except that there is 600 a very long tail where hardly any write activity occurs. After 960 seconds,all except 5 of the reduce tasks are 400 completed.However these last few stragglers don't fin- ish until 300 seconds later.The entire computation takes 1283 seconds,an increase of 44%in elapsed time. qu 200 5.5 Machine Failures 2003/09 200403 2004006 2004009 In Figure 3(c),we show an execution of the sort program 3/06 200312 where we intentionally killed 200 out of 1746 worker processes several minutes into the computation.The Figure 4:MapReduce instances over time underlying cluster scheduler immediately restarted new worker processes on these machines (since only the pro- Number of jobs 29.423 cesses were killed,the machines were still functioning Average job completion time 634 secs properly). Machine days used 79,186days Input data read 3.288TB The worker deaths show up as a negative input rate Intermediate data produced 758TB since some previously completed map work disappears Output data written 193TB (since the corresponding map workers were killed)and Average worker machines per job 157 needs to be redone.The re-execution of this map work Average worker deaths per job 1.2 happens relatively quickly.The entire computation fin- Average map tasks per job 3.351 ishes in 933 seconds including startup overhead (just an Average reduce tasks per job 55 increase of 5%over the normal execution time). Unique map implementations 395 Unique reduce implementations 269 Unique map/reduce combinations 426 Experience Table 1:MapReduce jobs run in August 2004 We wrote the first version of the MapReduce library in February of 2003,and made significant enhancements to it in August of 2003,including the locality optimization, Figure 4 shows the significant growth in the number of dynamic load balancing of task execution across worker separate MapReduce programs checked into our primary machines,etc.Since that time,we have been pleasantly source code management system over time,from 0 in surprised at how broadly applicable the MapReduce li- early 2003 to almost 900 separate instances as of late brary has been for the kinds of problems we work on. September 2004.MapReduce has been so successful be- It has been used across a wide range of domains within cause it makes it possible to write a simple program and Google,including: run it efficiently on a thousand machines in the course of half an hour,greatly speeding up the development and large-scale machine learning problems, prototyping cycle.Furthermore,it allows programmers who have no experience with distributed and/or parallel .clustering problems for the Google News and systems to exploit large amounts of resources easily. Froogle products, At the end of each job.the MapReduce library logs .extraction of data used to produce reports of popular statistics about the computational resources used by the queries (e.g.Google Zeitgeist), job.In Table 1,we show some statistics for a subset of MapReduce jobs run at Google in August 2004. extraction of properties of web pages for new exper- iments and products (e.g.extraction of geographi- cal locations from a large corpus of web pages for 6.1 Large-Scale Indexing localized search),and One of our most significant uses of MapReduce to date large-scale graph computations has been a complete rewrite of the production index- To appear in OSDI 2004 105.4 Effect of Backup Tasks In Figure 3 (b), we show an execution of the sort program with backup tasks disabled. The execution flow is similar to that shown in Figure 3 (a), except that there is a very long tail where hardly any write activity occurs. After 960 seconds, all except 5 of the reduce tasks are completed. However these last few stragglers don’t finish until 300 seconds later. The entire computation takes 1283 seconds, an increase of 44% in elapsed time. 5.5 Machine Failures In Figure 3 (c), we show an execution of the sort program where we intentionally killed 200 out of 1746 worker processes several minutes into the computation. The underlying cluster scheduler immediately restarted new worker processes on these machines (since only the processes were killed, the machines were still functioning properly). The worker deaths show up as a negative input rate since some previously completed map work disappears (since the corresponding map workers were killed) and needs to be redone. The re-execution of this map work happens relatively quickly. The entire computation finishes in 933 seconds including startup overhead (just an increase of 5% over the normal execution time). 6 Experience We wrote the first version of the MapReduce library in February of 2003, and made significant enhancements to it in August of 2003, including the locality optimization, dynamic load balancing of task execution across worker machines, etc. Since that time, we have been pleasantly surprised at how broadly applicable the MapReduce library has been for the kinds of problems we work on. It has been used across a wide range of domains within Google, including: • large-scale machine learning problems, • clustering problems for the Google News and Froogle products, • extraction of data used to produce reports of popular queries (e.g. Google Zeitgeist), • extraction of properties of web pages for new experiments and products (e.g. extraction of geographical locations from a large corpus of web pages for localized search), and • large-scale graph computations. 2003/03 2003/06 2003/09 2003/12 2004/03 2004/06 2004/09 0 200 400 600 800 1000 Number of instances in source tree Figure 4: MapReduce instances over time Number of jobs 29,423 Average job completion time 634 secs Machine days used 79,186 days Input data read 3,288 TB Intermediate data produced 758 TB Output data written 193 TB Average worker machines per job 157 Average worker deaths per job 1.2 Average map tasks per job 3,351 Average reduce tasks per job 55 Unique map implementations 395 Unique reduce implementations 269 Unique map/reduce combinations 426 Table 1: MapReduce jobs run in August 2004 Figure 4 shows the significant growth in the number of separate MapReduce programs checked into our primary source code management system over time, from 0 in early 2003 to almost 900 separate instances as of late September 2004. MapReduce has been so successful because it makes it possible to write a simple program and run it efficiently on a thousand machines in the course of half an hour, greatly speeding up the development and prototyping cycle. Furthermore, it allows programmers who have no experience with distributed and/or parallel systems to exploit large amounts of resources easily. At the end of each job, the MapReduce library logs statistics about the computational resources used by the job. In Table 1, we show some statistics for a subset of MapReduce jobs run at Google in August 2004. 6.1 Large-Scale Indexing One of our most significant uses of MapReduce to date has been a complete rewrite of the production indexTo appear in OSDI 2004 10