正在加载图片...

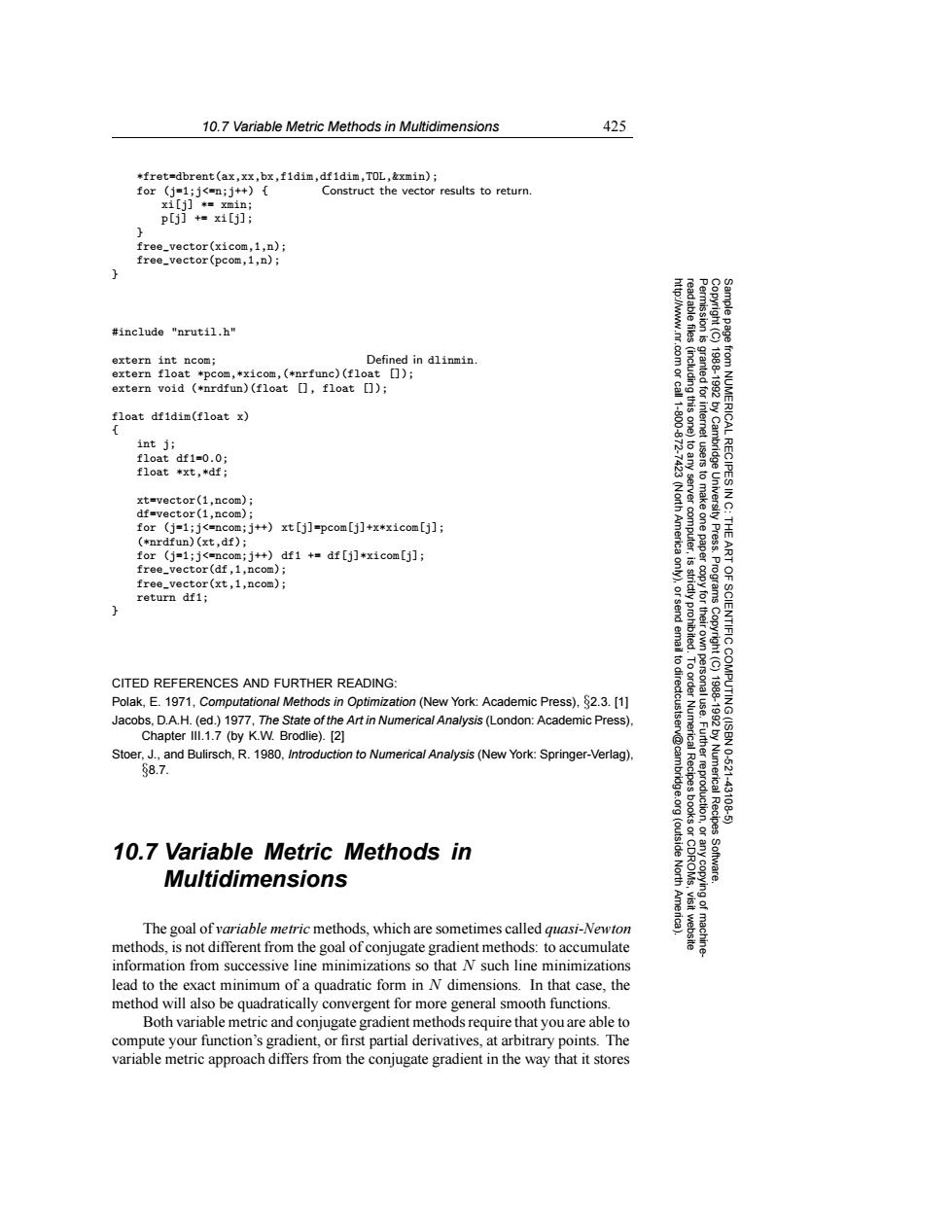

10.7 Variable Metric Methods in Multidimensions 425 *fret=dbrent(ax,xx,bx,fidim,df1dim,TOL,&xmin); for(j=1;j<=n;j++){ Construct the vector results to return. xi[j]*xmin; p[j]+=x1[j]; 2 free_vector(xicom,1,n); free_vector(pcom,1,n); #include "nrutil.h" http://www.r. Permission is read able files extern int ncom; Defined in dlinmin. extern float *pcom,*xicom,(*nrfunc)(float [) extern void (*nrdfun)(float []float []) .com or call float dfidim(float x) 11-800-872 (including this one) granted for 19881992 int j; float df1=0.0; float *xt,*df; xt=vector(1,ncom): dfsvector(1,ncom); for (j=1;j<=ncom;j++)xt [j]=pcom[j]+x*xicom[j]; -7423(North America (*nrdfun)(xt,df); 电r:1s t tusers to make one paper by Cambridge University Press. from NUMERICAL RECIPES IN C: THE for (j=1;j<=ncom;j++)df1 +df[j]*xicom[j]; 是 ART free_vector(df,1,ncom); free_vector(xt,1,ncom); return df1; strictly proh Programs to dir Copyright (C) CITED REFERENCES AND FURTHER READING: Polak,E.1971,Computational Methods in Optimization(New York:Academic Press),82.3.[1] OF SCIENTIFIC COMPUTING(ISBN Jacobs,D.A.H.(ed.)1977,The State of the Art in Numerical Analysis (London:Academic Press) ectcustser Chapter Ill.1.7 (by K.W.Brodlie).[2] Stoer,J.,and Bulirsch,R.1980,Introduction to Numerical Analysis(New York:Springer-Verlag). v@cam 88.7. 1988-1992 by Numerical Recipes 10-:6211 43108 10.7 Variable Metric Methods in (outside Multidimensions Software. Amer The goal of variable metric methods,which are sometimes called quasi-Newton visit website methods,is not different from the goal of conjugate gradient methods:to accumulate machine information from successive line minimizations so that N such line minimizations lead to the exact minimum of a quadratic form in N dimensions.In that case,the method will also be quadratically convergent for more general smooth functions. Both variable metric and conjugate gradient methods require that you are able to compute your function's gradient,or first partial derivatives,at arbitrary points.The variable metric approach differs from the conjugate gradient in the way that it stores10.7 Variable Metric Methods in Multidimensions 425 Permission is granted for internet users to make one paper copy for their own personal use. Further reproduction, or any copyin Copyright (C) 1988-1992 by Cambridge University Press. Programs Copyright (C) 1988-1992 by Numerical Recipes Software. Sample page from NUMERICAL RECIPES IN C: THE ART OF SCIENTIFIC COMPUTING (ISBN 0-521-43108-5) g of machinereadable files (including this one) to any server computer, is strictly prohibited. To order Numerical Recipes books or CDROMs, visit website http://www.nr.com or call 1-800-872-7423 (North America only), or send email to directcustserv@cambridge.org (outside North America). *fret=dbrent(ax,xx,bx,f1dim,df1dim,TOL,&xmin); for (j=1;j<=n;j++) { Construct the vector results to return. xi[j] *= xmin; p[j] += xi[j]; } free_vector(xicom,1,n); free_vector(pcom,1,n); } #include "nrutil.h" extern int ncom; Defined in dlinmin. extern float *pcom,*xicom,(*nrfunc)(float []); extern void (*nrdfun)(float [], float []); float df1dim(float x) { int j; float df1=0.0; float *xt,*df; xt=vector(1,ncom); df=vector(1,ncom); for (j=1;j<=ncom;j++) xt[j]=pcom[j]+x*xicom[j]; (*nrdfun)(xt,df); for (j=1;j<=ncom;j++) df1 += df[j]*xicom[j]; free_vector(df,1,ncom); free_vector(xt,1,ncom); return df1; } CITED REFERENCES AND FURTHER READING: Polak, E. 1971, Computational Methods in Optimization (New York: Academic Press), §2.3. [1] Jacobs, D.A.H. (ed.) 1977, The State of the Art in Numerical Analysis (London: Academic Press), Chapter III.1.7 (by K.W. Brodlie). [2] Stoer, J., and Bulirsch, R. 1980, Introduction to Numerical Analysis (New York: Springer-Verlag), §8.7. 10.7 Variable Metric Methods in Multidimensions The goal of variable metric methods, which are sometimes called quasi-Newton methods, is not different from the goal of conjugate gradient methods: to accumulate information from successive line minimizations so that N such line minimizations lead to the exact minimum of a quadratic form in N dimensions. In that case, the method will also be quadratically convergent for more general smooth functions. Both variable metric and conjugate gradient methods require that you are able to compute your function’s gradient, or first partial derivatives, at arbitrary points. The variable metric approach differs from the conjugate gradient in the way that it stores