正在加载图片...

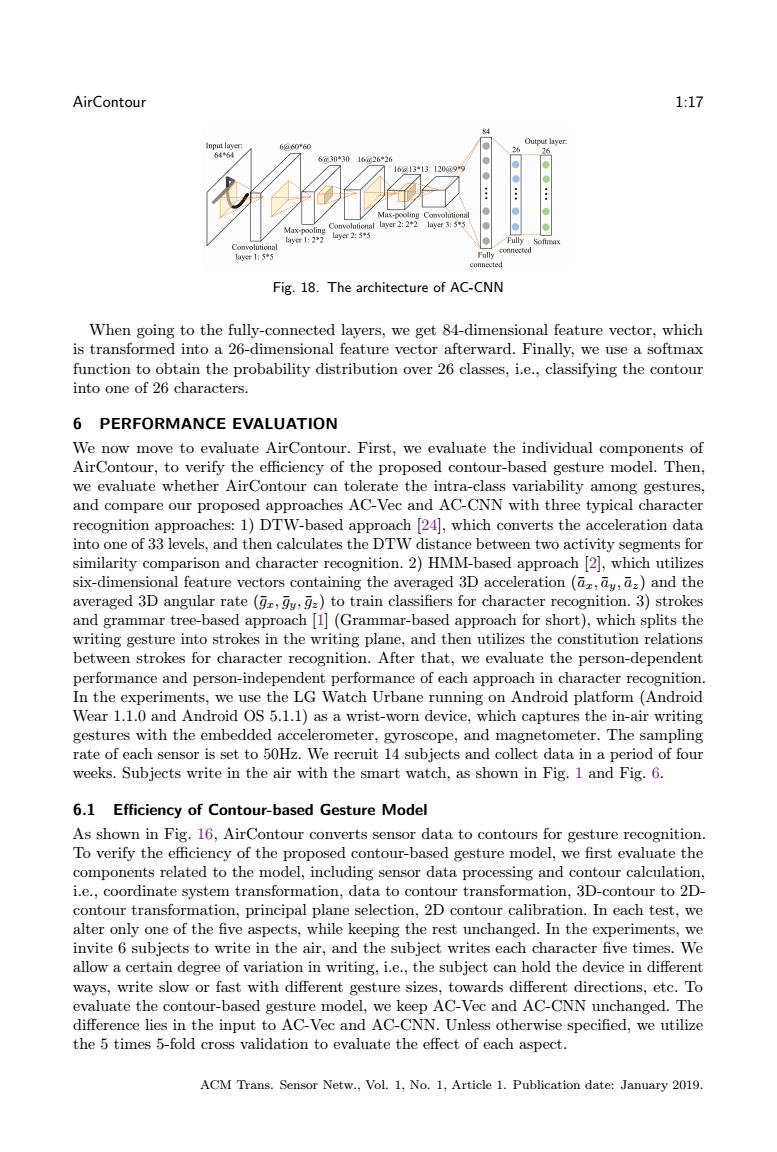

AirContour 1:17 Input layer 6@60*6d 646 126中26 Max-pooling Convolutional layer 2:2 6yt1:22 layer 2:5*5 Fully Fully connected connected Fig.18.The architecture of AC-CNN When going to the fully-connected layers,we get 84-dimensional feature vector,which is transformed into a 26-dimensional feature vector afterward.Finally,we use a softmax function to obtain the probability distribution over 26 classes,i.e.,classifying the contour into one of 26 characters. 6 PERFORMANCE EVALUATION We now move to evaluate AirContour.First,we evaluate the individual components of AirContour,to verify the efficiency of the proposed contour-based gesture model.Then, we evaluate whether AirContour can tolerate the intra-class variability among gestures and compare our proposed approaches AC-Vec and AC-CNN with three typical character recognition approaches:1)DTW-based approach [24],which converts the acceleration data into one of 33 levels,and then calculates the DTW distance between two activity segments for similarity comparison and character recognition.2)HMM-based approach [2],which utilizes six-dimensional feature vectors containing the averaged 3D acceleration(az,ay,a)and the averaged 3D angular rate (g,y,g)to train classifiers for character recognition.3)strokes and grammar tree-based approach [1](Grammar-based approach for short),which splits the writing gesture into strokes in the writing plane,and then utilizes the constitution relations between strokes for character recognition.After that,we evaluate the person-dependent performance and person-independent performance of each approach in character recognition In the experiments,we use the LG Watch Urbane running on Android platform (Android Wear 1.1.0 and Android OS 5.1.1)as a wrist-worn device,which captures the in-air writing gestures with the embedded accelerometer,gyroscope,and magnetometer.The sampling rate of each sensor is set to 50Hz.We recruit 14 subjects and collect data in a period of four weeks.Subjects write in the air with the smart watch,as shown in Fig.1 and Fig.6. 6.1 Efficiency of Contour-based Gesture Model As shown in Fig.16,AirContour converts sensor data to contours for gesture recognition. To verify the efficiency of the proposed contour-based gesture model,we first evaluate the components related to the model,including sensor data processing and contour calculation, i.e.,coordinate system transformation,data to contour transformation,3D-contour to 2D- contour transformation,principal plane selection,2D contour calibration.In each test,we alter only one of the five aspects,while keeping the rest unchanged.In the experiments,we invite 6 subjects to write in the air,and the subject writes each character five times.We allow a certain degree of variation in writing,i.e.,the subject can hold the device in different ways,write slow or fast with different gesture sizes,towards different directions,etc.To evaluate the contour-based gesture model,we keep AC-Vec and AC-CNN unchanged.The difference lies in the input to AC-Vec and AC-CNN.Unless otherwise specified,we utilize the 5 times 5-fold cross validation to evaluate the effect of each aspect. ACM Trans.Sensor Netw.,Vol.1,No.1,Article 1.Publication date:January 2019.AirContour 1:17 Fig. 18. The architecture of AC-CNN When going to the fully-connected layers, we get 84-dimensional feature vector, which is transformed into a 26-dimensional feature vector afterward. Finally, we use a softmax function to obtain the probability distribution over 26 classes, i.e., classifying the contour into one of 26 characters. 6 PERFORMANCE EVALUATION We now move to evaluate AirContour. First, we evaluate the individual components of AirContour, to verify the efficiency of the proposed contour-based gesture model. Then, we evaluate whether AirContour can tolerate the intra-class variability among gestures, and compare our proposed approaches AC-Vec and AC-CNN with three typical character recognition approaches: 1) DTW-based approach [24], which converts the acceleration data into one of 33 levels, and then calculates the DTW distance between two activity segments for similarity comparison and character recognition. 2) HMM-based approach [2], which utilizes six-dimensional feature vectors containing the averaged 3D acceleration (𝑎¯𝑥, 𝑎¯𝑦, 𝑎¯𝑧) and the averaged 3D angular rate (𝑔¯𝑥, 𝑔¯𝑦, 𝑔¯𝑧) to train classifiers for character recognition. 3) strokes and grammar tree-based approach [1] (Grammar-based approach for short), which splits the writing gesture into strokes in the writing plane, and then utilizes the constitution relations between strokes for character recognition. After that, we evaluate the person-dependent performance and person-independent performance of each approach in character recognition. In the experiments, we use the LG Watch Urbane running on Android platform (Android Wear 1.1.0 and Android OS 5.1.1) as a wrist-worn device, which captures the in-air writing gestures with the embedded accelerometer, gyroscope, and magnetometer. The sampling rate of each sensor is set to 50Hz. We recruit 14 subjects and collect data in a period of four weeks. Subjects write in the air with the smart watch, as shown in Fig. 1 and Fig. 6. 6.1 Efficiency of Contour-based Gesture Model As shown in Fig. 16, AirContour converts sensor data to contours for gesture recognition. To verify the efficiency of the proposed contour-based gesture model, we first evaluate the components related to the model, including sensor data processing and contour calculation, i.e., coordinate system transformation, data to contour transformation, 3D-contour to 2Dcontour transformation, principal plane selection, 2D contour calibration. In each test, we alter only one of the five aspects, while keeping the rest unchanged. In the experiments, we invite 6 subjects to write in the air, and the subject writes each character five times. We allow a certain degree of variation in writing, i.e., the subject can hold the device in different ways, write slow or fast with different gesture sizes, towards different directions, etc. To evaluate the contour-based gesture model, we keep AC-Vec and AC-CNN unchanged. The difference lies in the input to AC-Vec and AC-CNN. Unless otherwise specified, we utilize the 5 times 5-fold cross validation to evaluate the effect of each aspect. ACM Trans. Sensor Netw., Vol. 1, No. 1, Article 1. Publication date: January 2019