正在加载图片...

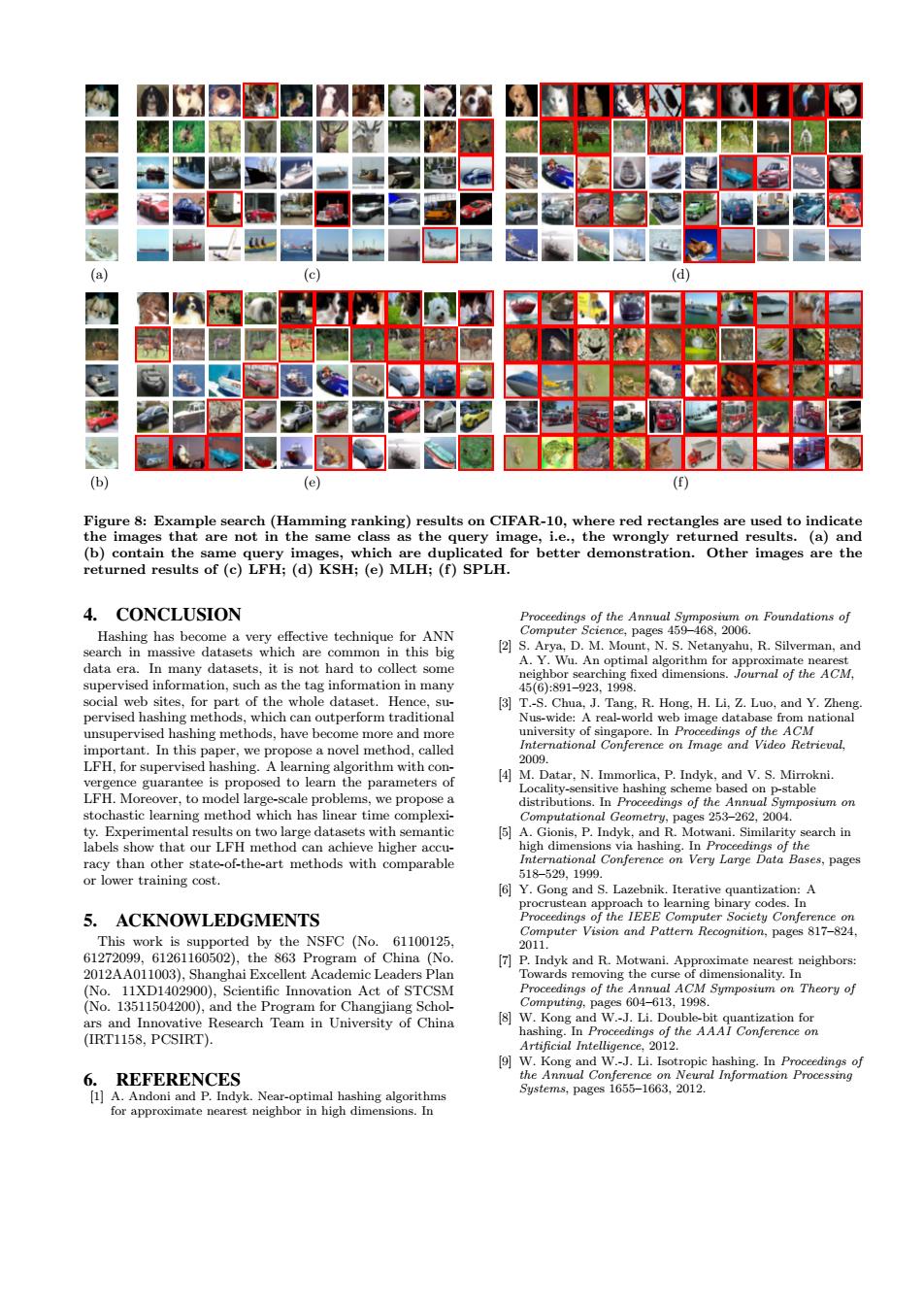

(b) (e) (f) Figure 8:Example search(Hamming ranking)results on CIFAR-10,where red rectangles are used to indicate the images that are not in the same class as the query image,i.e.,the wrongly returned results.(a)and (b)contain the same query images,which are duplicated for better demonstration.Other images are the returned results of(c)LFH;(d)KSH;(e)MLH;(f)SPLH. 4.CONCLUSION Proceedings of the Annual Sumposium on Foundations of Hashing has become a very effective technique for ANN Computer Science,pages 459-468,2006. search in massive datasets which are common in this big [2]S.Arya,D.M.Mount,N.S.Netanyahu,R.Silverman,and A.Y.Wu.An optimal algorithm for approximate nearest data era.In many datasets,it is not hard to collect some neighbor searching fixed dimensions.Journal of the ACM. supervised information,such as the tag information in many 45(6):891-923.1998. social web sites,for part of the whole dataset.Hence,su- 3 T.-S.Chua,J.Tang,R.Hong,H.Li,Z.Luo,and Y.Zheng. pervised hashing methods,which can outperform traditional Nus-wide:A real-world web image database from national unsupervised hashing methods,have become more and more university of singapore.In Proceedings of the ACM important.In this paper,we propose a novel method,called International Conference on Image and Video Retrieval 2009 LFH,for supervised hashing.A learning algorithm with con- vergence guarantee is proposed to learn the parameters of [4 M.Datar,N.Immorlica,P.Indyk,and V.S.Mirrokni Locality-sensitive hashing scheme based on p-stable LFH.Moreover,to model large-scale problems,we propose a distributions.In Proceedings of the Annual Symposium on stochastic learning method which has linear time complexi- Computational Geometry,pages 253-262,2004. ty.Experimental results on two large datasets with semantic [5]A.Gionis,P.Indyk,and R.Motwani.Similarity search in labels show that our LFH method can achieve higher accu- high dimensions via hashing.In Proceedings of the racy than other state-of-the-art methods with comparable International Conference on Very Large Data Bases,pages 518-529,1999. or lower training cost 6 Y.Gong and S.Lazebnik.Iterative quantization:A procrustean approach to learning binary codes.In 5.ACKNOWLEDGMENTS Proceedings of the IEEE Computer Society Conference on This work is supported by the NSFC (No.61100125 Computer Vision and Pattern Recognition,pages 817-824 2011 61272099,61261160502),the 863 Program of China (No. [7]P.Indyk and R.Motwani.Approximate nearest neighbors: 2012AA011003).Shanghai Excellent Academic Leaders Plan Towards removing the curse of dimensionality.In (No.11XD1402900),Scientific Innovation Act of STCSM Proceedings of the Annual ACM Symposium on Theory of (No.13511504200),and the Program for Changjiang Schol- Computing,pages 604-613,1998. ars and Innovative Research Team in University of China [8]W.Kong and W.-J.Li.Double-bit quantization for hashing.In Proceedings of the AAAI Conference on (IRT1158,PCSIRT). Artificial Intelligence,2012. 9]W.Kong and W.-J.Li.Isotropic hashing.In Proceedings of 6. REFERENCES the Annual Conference on Neural Information Processing [1]A.Andoni and P.Indyk.Near-optimal hashing algorithms Systems,pages 1655-1663,2012. for approximate nearest neighbor in high dimensions.In(a) (b) (c) (d) (e) (f) Figure 8: Example search (Hamming ranking) results on CIFAR-10, where red rectangles are used to indicate the images that are not in the same class as the query image, i.e., the wrongly returned results. (a) and (b) contain the same query images, which are duplicated for better demonstration. Other images are the returned results of (c) LFH; (d) KSH; (e) MLH; (f) SPLH. 4. CONCLUSION Hashing has become a very effective technique for ANN search in massive datasets which are common in this big data era. In many datasets, it is not hard to collect some supervised information, such as the tag information in many social web sites, for part of the whole dataset. Hence, supervised hashing methods, which can outperform traditional unsupervised hashing methods, have become more and more important. In this paper, we propose a novel method, called LFH, for supervised hashing. A learning algorithm with convergence guarantee is proposed to learn the parameters of LFH. Moreover, to model large-scale problems, we propose a stochastic learning method which has linear time complexity. Experimental results on two large datasets with semantic labels show that our LFH method can achieve higher accuracy than other state-of-the-art methods with comparable or lower training cost. 5. ACKNOWLEDGMENTS This work is supported by the NSFC (No. 61100125, 61272099, 61261160502), the 863 Program of China (No. 2012AA011003), Shanghai Excellent Academic Leaders Plan (No. 11XD1402900), Scientific Innovation Act of STCSM (No. 13511504200), and the Program for Changjiang Scholars and Innovative Research Team in University of China (IRT1158, PCSIRT). 6. REFERENCES [1] A. Andoni and P. Indyk. Near-optimal hashing algorithms for approximate nearest neighbor in high dimensions. In Proceedings of the Annual Symposium on Foundations of Computer Science, pages 459–468, 2006. [2] S. Arya, D. M. Mount, N. S. Netanyahu, R. Silverman, and A. Y. Wu. An optimal algorithm for approximate nearest neighbor searching fixed dimensions. Journal of the ACM, 45(6):891–923, 1998. [3] T.-S. Chua, J. Tang, R. Hong, H. Li, Z. Luo, and Y. Zheng. Nus-wide: A real-world web image database from national university of singapore. In Proceedings of the ACM International Conference on Image and Video Retrieval, 2009. [4] M. Datar, N. Immorlica, P. Indyk, and V. S. Mirrokni. Locality-sensitive hashing scheme based on p-stable distributions. In Proceedings of the Annual Symposium on Computational Geometry, pages 253–262, 2004. [5] A. Gionis, P. Indyk, and R. Motwani. Similarity search in high dimensions via hashing. In Proceedings of the International Conference on Very Large Data Bases, pages 518–529, 1999. [6] Y. Gong and S. Lazebnik. Iterative quantization: A procrustean approach to learning binary codes. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, pages 817–824, 2011. [7] P. Indyk and R. Motwani. Approximate nearest neighbors: Towards removing the curse of dimensionality. In Proceedings of the Annual ACM Symposium on Theory of Computing, pages 604–613, 1998. [8] W. Kong and W.-J. Li. Double-bit quantization for hashing. In Proceedings of the AAAI Conference on Artificial Intelligence, 2012. [9] W. Kong and W.-J. Li. Isotropic hashing. In Proceedings of the Annual Conference on Neural Information Processing Systems, pages 1655–1663, 2012