正在加载图片...

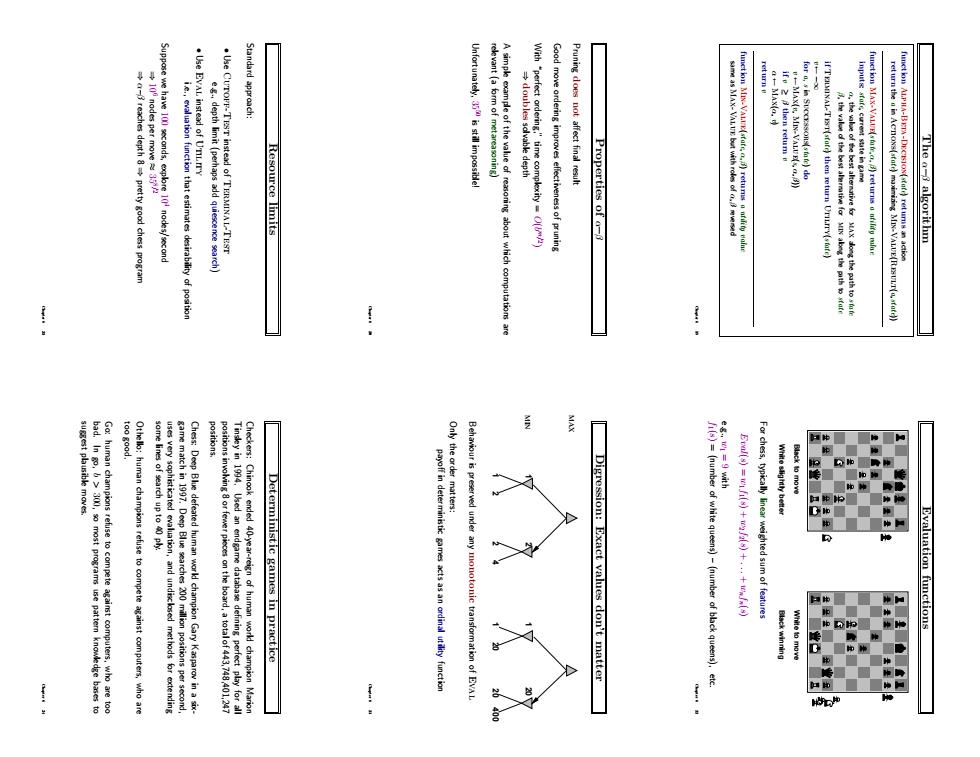

reaches depth 8 pretty good chess program 4欧 Suppose we have 10 seconds,explre 1 nodes/second Standard approach: ie.evaluation functin that estimates desirability of position .Use EVAL instead of UTLIrY e.g..depth limit (perhaps add quiescence search) Use CUTOFF-TEST instead of TERMINAL-TEST Unfortunately,is still impossiblel relevant (a form of metareasoning) Resource limits A simple example of the value of reasoning about which computations are With "perfect ordering."time complexity() Good move ordering improves effectiveness of pruning Pruning does not affect final result ife 2 then retum e -MAX(B.MIN-VALUEs.0.B)) for a,s in te)do Properties of o- if TEsnXAL-TET(sfatd then return UriLrry(state) inpiits afur6 curet stite in gamd funct ion MAX-VALUEsfufr,o.B)returns a nfifify mfue return the a in AcnONs(sfute)maximinng MIX-VALUE(RESULT a,sfate)) The a-algorithm MAX suggest plausible moves. 1008994. positions. bad.Ing.>300.so most programs use pattern knowledge bases to Go:human champions refuse to compete against computers.who are too Othello:human champions refuse to compete against computers,who are some lines of search up to 40 ply. uses very sophisticated evaluation,and undisclsed methods for extending game match in 1997.Deep Blue searches 200 million positions per second Chess:Deep Blue defeated human workd champion Gary Kasparov in a six- positions involingorfewer piooes on the board,a totalof 443,74801,247 Tinsley in 1994.Used an endgame database defining perfect play for all Checkers:Chinook ended 40-year-reign of human world champion Marion Deterministic games in practice payoff in deter ministicgamesctsasan ordinal utlity function Behaviour is preserved under any monotonic transformation of EVAL Digression:Exact values don't matter (s)=(number of white queens)-(number of black queens),etc. 初是E总十月息十:十惠 For chess,typically linear weighted sum of features Black to move Evaluation fuctions Black winning White to move 市市 #睡的The α–β algorithm function Alpha-Beta-Decision(state) returns an action return the a in Actions(state) maximizing Min-Value(Result(a,state)) function Max-Value(state,α, β) returns a utility value inputs: state, current state in game α, the value of the best alternative for max along the path to state β, the value of the best alternative for min along the path to state if Terminal-Test(state) then return Utility(state) v ← −∞ for a, s in Successors(state) do v ← Max(v, Min-Value(s,α, β)) if v ≥ β then return v α ← Max(α, v) return v function Min-Value(state,α, β) returns a utility value same as Max-Value but with roles of α, β reversed Chapter 6 19 Properties of α–β Pruning does not affect final result Good move ordering improves effectiveness of pruning With “perfect ordering,” time complexity = O(b m/2 ) ⇒ doubles solvable depth A simple example of the value of reasoning about which computations are relevant (a form of metareasoning) Unfortunately, 35 50 is still impossible! Chapter 6 20 Resource limits Standard approach: • Use Cutoff-Test instead of Terminal-Test e.g., depth limit (perhaps add quiescence search) • Use Eval instead of Utility i.e., evaluation function that estimates desirability of position Suppose we have 100 seconds, explore 10 4 nodes/second ⇒ 10 6 nodes per move ≈ 35 8/2 ⇒ α–β reaches depth 8 ⇒ pretty good chess program Chapter 6 21 Evaluation functions White slightly better Black to move Black winning White to move For chess, typically linear weighted sum of features Eval(s) = w1f1(s) + w2f2(s) + . . . + wnfn(s) e.g., w1 = 9 with f1(s) = (number of white queens) – (number of black queens), etc. Chapter 6 22 Digression: Exact values don’t matter MIN MAX 2 1 1 4 2 2 20 1 1 400 20 20 Behaviour is preserved under any monotonic transformation of Eval Only the order matters: payoff in deterministic games acts as an ordinal utility function Chapter 6 23 Deterministic games in practice Checkers: Chinook ended 40-year-reign of human world champion Marion Tinsley in 1994. Used an endgame database defining perfect play for all positions involving 8 or fewer pieces on the board, a total of 443,748,401,247 positions. Chess: Deep Blue defeated human world champion Gary Kasparov in a sixgame match in 1997. Deep Blue searches 200 million positions per second, uses very sophisticated evaluation, and undisclosed methods for extending some lines of search up to 40 ply. Othello: human champions refuse to compete against computers, who are too good. Go: human champions refuse to compete against computers, who are too bad. In go, b > 300, so most programs use pattern knowledge bases to suggest plausible moves. Chapter 6 24