正在加载图片...

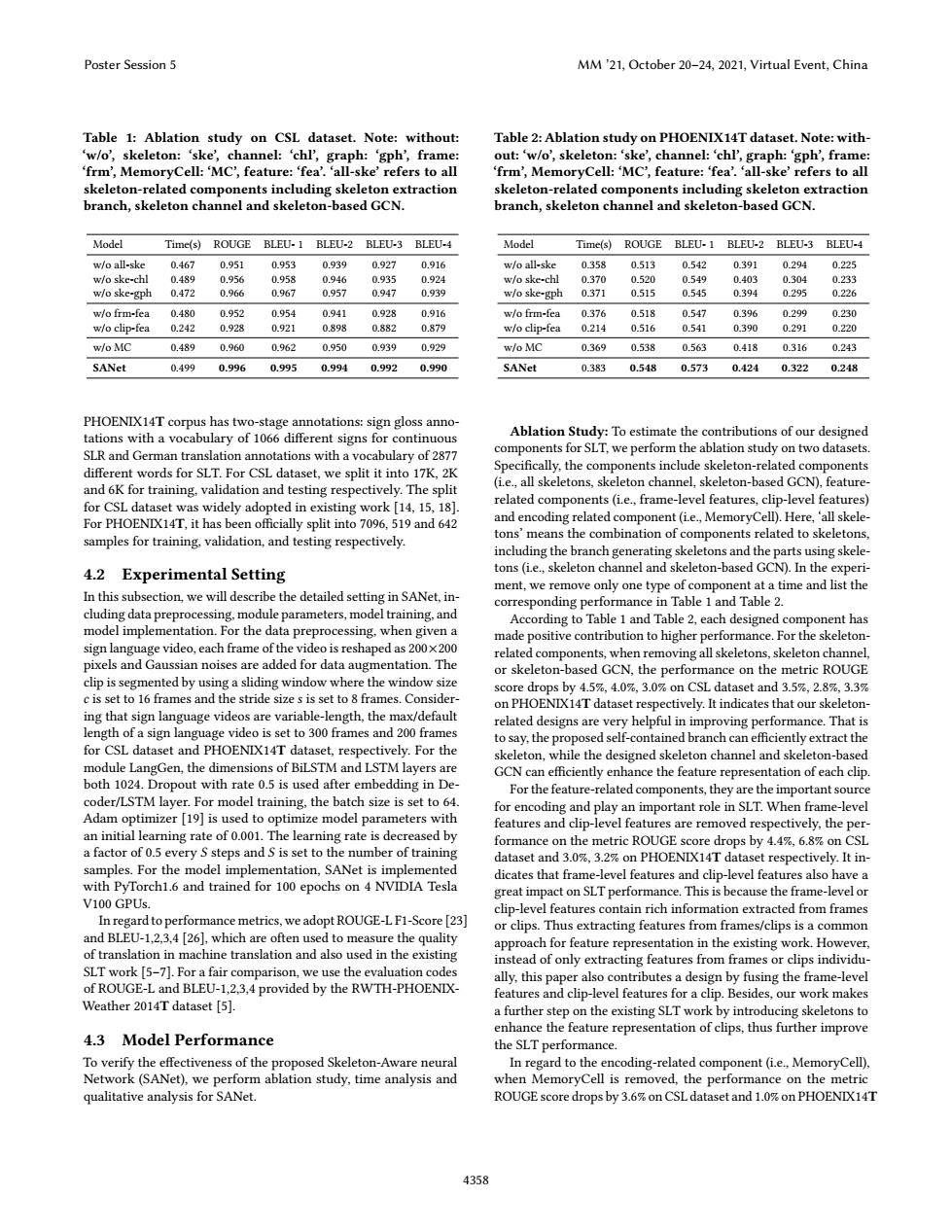

Poster Session 5 MM'21.October 20-24,2021,Virtual Event,China Table 1:Ablation study on CSL dataset.Note:without: Table 2:Ablation study on PHOENIX14T dataset.Note:with- 'w/o',skeleton:'ske',channel:'chl',graph:'gph',frame: out:'w/o',skeleton:'ske',channel:chl',graph:'gph',frame: frm',MemoryCell:'MC',feature:'fea'.all-ske'refers to all 'frm',MemoryCell:'MC',feature:fea'.'all-ske'refers to all skeleton-related components including skeleton extraction skeleton-related components including skeleton extraction branch,skeleton channel and skeleton-based GCN. branch,skeleton channel and skeleton-based GCN. Model Time(s) ROUGE BLEU-1 BLEU-2 BLEU-3 BLEU-4 Model Time(s) ROUGE BLEU-1 BLEU-2 BLEU-3 BLEU-4 w/o all-ske 0.467 0.951 0.953 0.939 0.927 0.916 w/o all-ske 0.358 0.513 0.542 0391 0.294 0.225 w/o ske-chl 0.489 0.956 0.958 0.946 0.935 0.924 w/o ske-chl 0.370 0.520 0.549 0.403 0.304 0.233 w/o ske-gph 0.472 0.966 0.967 0.957 0.947 0.939 w/o ske-gph 0.371 0.515 0.545 0394 0295 0.226 w/o frmfea 0.480 0.952 0.954 0.941 0.928 0.916 wlo frm-fea 0376 0518 0.547 0396 0.299 0.230 w/o clip-fea 0.242 0.928 0.921 0.898 0.882 0.879 w/o clip-fea 0.214 0.516 0.541 0.390 0.291 0.220 w/o MC 0.489 0.960 0.962 0.950 0.939 0.929 w/oMC 0.369 0.538 0.563 0.418 0.316 0.243 SANet 0.499 0.996 0.995 0.994 0.992 0.990 SANet 0.383 0.548 0.573 0.424 0.322 0.248 PHOENIX14T corpus has two-stage annotations:sign gloss anno- tations with a vocabulary of 1066 different signs for continuous Ablation Study:To estimate the contributions of our designed SLR and German translation annotations with a vocabulary of 2877 components for SLT,we perform the ablation study on two datasets. different words for SLT.For CSL dataset,we split it into 17K,2K Specifically,the components include skeleton-related components and 6K for training.validation and testing respectively.The split (i.e.,all skeletons,skeleton channel,skeleton-based GCN),feature- for CSL dataset was widely adopted in existing work [14,15,18] related components(i.e.,frame-level features,clip-level features) For PHOENIX14T,it has been officially split into 7096,519 and 642 and encoding related component(i.e.,MemoryCell).Here,'all skele- samples for training.validation,and testing respectively tons'means the combination of components related to skeletons, including the branch generating skeletons and the parts using skele- 4.2 Experimental Setting tons (i.e.,skeleton channel and skeleton-based GCN).In the experi- ment,we remove only one type of component at a time and list the In this subsection,we will describe the detailed setting in SANet,in- corresponding performance in Table 1 and Table 2. cluding data preprocessing.module parameters,model training.and According to Table 1 and Table 2,each designed component has model implementation.For the data preprocessing,when given a made positive contribution to higher performance.For the skeleton- sign language video,each frame of the video is reshaped as 200x200 related components,when removing all skeletons,skeleton channel, pixels and Gaussian noises are added for data augmentation.The or skeleton-based GCN,the performance on the metric ROUGE clip is segmented by using a sliding window where the window size score drops by 4.5%,4.0%,3.0%on CSL dataset and 3.5%,2.8%,3.3% c is set to 16 frames and the stride size s is set to 8 frames.Consider- on PHOENIX14T dataset respectively.It indicates that our skeleton- ing that sign language videos are variable-length,the max/default related designs are very helpful in improving performance.That is length of a sign language video is set to 300 frames and 200 frames to say,the proposed self-contained branch can efficiently extract the for CSL dataset and PHOENIX14T dataset,respectively.For the skeleton,while the designed skeleton channel and skeleton-based module LangGen,the dimensions of BiLSTM and LSTM layers are GCN can efficiently enhance the feature representation of each clip both 1024.Dropout with rate 0.5 is used after embedding in De- For the feature-related components,they are the important source coder/LSTM layer.For model training,the batch size is set to 64. for encoding and play an important role in SLT.When frame-level Adam optimizer [19]is used to optimize model parameters with features and clip-level features are removed respectively,the per an initial learning rate of 0.001.The learning rate is decreased by formance on the metric ROUGE score drops by 4.4%,6.8%on CSL a factor of 0.5 every S steps and S is set to the number of training dataset and 3.0%,3.2%on PHOENIX14T dataset respectively.It in- samples.For the model implementation,SANet is implemented dicates that frame-level features and clip-level features also have a with PyTorch1.6 and trained for 100 epochs on 4 NVIDIA Tesla great impact on SLT performance.This is because the frame-level or V100 GPUs. clip-level features contain rich information extracted from frames In regard to performance metrics,we adopt ROUGE-L F1-Score [23] or clips.Thus extracting features from frames/clips is a common and BLEU-1,2,3,4 [26],which are often used to measure the quality approach for feature representation in the existing work.However, of translation in machine translation and also used in the existing instead of only extracting features from frames or clips individu- SLT work [5-7].For a fair comparison,we use the evaluation codes ally,this paper also contributes a design by fusing the frame-level of ROUGE-L and BLEU-1,2,3,4 provided by the RWTH-PHOENIX- features and clip-level features for a clip.Besides,our work makes Weather 2014T dataset [5]. a further step on the existing SLT work by introducing skeletons to enhance the feature representation of clips,thus further improve 4.3 Model Performance the SLT performance. To verify the effectiveness of the proposed Skeleton-Aware neural In regard to the encoding-related component (i.e.,MemoryCell). Network(SANet),we perform ablation study,time analysis and when MemoryCell is removed,the performance on the metric qualitative analysis for SANet. ROUGE score drops by 3.6%on CSL dataset and 1.0%on PHOENIX14T 4358Table 1: Ablation study on CSL dataset. Note: without: ‘w/o’, skeleton: ‘ske’, channel: ‘chl’, graph: ‘gph’, frame: ‘frm’, MemoryCell: ‘MC’, feature: ‘fea’. ‘all-ske’ refers to all skeleton-related components including skeleton extraction branch, skeleton channel and skeleton-based GCN. Model Time(s) ROUGE BLEU- 1 BLEU-2 BLEU-3 BLEU-4 w/o all-ske 0.467 0.951 0.953 0.939 0.927 0.916 w/o ske-chl 0.489 0.956 0.958 0.946 0.935 0.924 w/o ske-gph 0.472 0.966 0.967 0.957 0.947 0.939 w/o frm-fea 0.480 0.952 0.954 0.941 0.928 0.916 w/o clip-fea 0.242 0.928 0.921 0.898 0.882 0.879 w/o MC 0.489 0.960 0.962 0.950 0.939 0.929 SANet 0.499 0.996 0.995 0.994 0.992 0.990 PHOENIX14T corpus has two-stage annotations: sign gloss annotations with a vocabulary of 1066 different signs for continuous SLR and German translation annotations with a vocabulary of 2877 different words for SLT. For CSL dataset, we split it into 17K, 2K and 6K for training, validation and testing respectively. The split for CSL dataset was widely adopted in existing work [14, 15, 18]. For PHOENIX14T, it has been officially split into 7096, 519 and 642 samples for training, validation, and testing respectively. 4.2 Experimental Setting In this subsection, we will describe the detailed setting in SANet, including data preprocessing, module parameters, model training, and model implementation. For the data preprocessing, when given a sign language video, each frame of the video is reshaped as 200×200 pixels and Gaussian noises are added for data augmentation. The clip is segmented by using a sliding window where the window size c is set to 16 frames and the stride size s is set to 8 frames. Considering that sign language videos are variable-length, the max/default length of a sign language video is set to 300 frames and 200 frames for CSL dataset and PHOENIX14T dataset, respectively. For the module LangGen, the dimensions of BiLSTM and LSTM layers are both 1024. Dropout with rate 0.5 is used after embedding in Decoder/LSTM layer. For model training, the batch size is set to 64. Adam optimizer [19] is used to optimize model parameters with an initial learning rate of 0.001. The learning rate is decreased by a factor of 0.5 every S steps and S is set to the number of training samples. For the model implementation, SANet is implemented with PyTorch1.6 and trained for 100 epochs on 4 NVIDIA Tesla V100 GPUs. In regard to performance metrics, we adopt ROUGE-L F1-Score [23] and BLEU-1,2,3,4 [26], which are often used to measure the quality of translation in machine translation and also used in the existing SLT work [5–7]. For a fair comparison, we use the evaluation codes of ROUGE-L and BLEU-1,2,3,4 provided by the RWTH-PHOENIXWeather 2014T dataset [5]. 4.3 Model Performance To verify the effectiveness of the proposed Skeleton-Aware neural Network (SANet), we perform ablation study, time analysis and qualitative analysis for SANet. Table 2: Ablation study on PHOENIX14T dataset. Note: without: ‘w/o’, skeleton: ‘ske’, channel: ‘chl’, graph: ‘gph’, frame: ‘frm’, MemoryCell: ‘MC’, feature: ‘fea’. ‘all-ske’ refers to all skeleton-related components including skeleton extraction branch, skeleton channel and skeleton-based GCN. Model Time(s) ROUGE BLEU- 1 BLEU-2 BLEU-3 BLEU-4 w/o all-ske 0.358 0.513 0.542 0.391 0.294 0.225 w/o ske-chl 0.370 0.520 0.549 0.403 0.304 0.233 w/o ske-gph 0.371 0.515 0.545 0.394 0.295 0.226 w/o frm-fea 0.376 0.518 0.547 0.396 0.299 0.230 w/o clip-fea 0.214 0.516 0.541 0.390 0.291 0.220 w/o MC 0.369 0.538 0.563 0.418 0.316 0.243 SANet 0.383 0.548 0.573 0.424 0.322 0.248 Ablation Study: To estimate the contributions of our designed components for SLT, we perform the ablation study on two datasets. Specifically, the components include skeleton-related components (i.e., all skeletons, skeleton channel, skeleton-based GCN), featurerelated components (i.e., frame-level features, clip-level features) and encoding related component (i.e., MemoryCell). Here, ‘all skeletons’ means the combination of components related to skeletons, including the branch generating skeletons and the parts using skeletons (i.e., skeleton channel and skeleton-based GCN). In the experiment, we remove only one type of component at a time and list the corresponding performance in Table 1 and Table 2. According to Table 1 and Table 2, each designed component has made positive contribution to higher performance. For the skeletonrelated components, when removing all skeletons, skeleton channel, or skeleton-based GCN, the performance on the metric ROUGE score drops by 4.5%, 4.0%, 3.0% on CSL dataset and 3.5%, 2.8%, 3.3% on PHOENIX14T dataset respectively. It indicates that our skeletonrelated designs are very helpful in improving performance. That is to say, the proposed self-contained branch can efficiently extract the skeleton, while the designed skeleton channel and skeleton-based GCN can efficiently enhance the feature representation of each clip. For the feature-related components, they are the important source for encoding and play an important role in SLT. When frame-level features and clip-level features are removed respectively, the performance on the metric ROUGE score drops by 4.4%, 6.8% on CSL dataset and 3.0%, 3.2% on PHOENIX14T dataset respectively. It indicates that frame-level features and clip-level features also have a great impact on SLT performance. This is because the frame-level or clip-level features contain rich information extracted from frames or clips. Thus extracting features from frames/clips is a common approach for feature representation in the existing work. However, instead of only extracting features from frames or clips individually, this paper also contributes a design by fusing the frame-level features and clip-level features for a clip. Besides, our work makes a further step on the existing SLT work by introducing skeletons to enhance the feature representation of clips, thus further improve the SLT performance. In regard to the encoding-related component (i.e., MemoryCell), when MemoryCell is removed, the performance on the metric ROUGE score drops by 3.6% on CSL dataset and 1.0% on PHOENIX14T Poster Session 5 MM ’21, October 20–24, 2021, Virtual Event, China 4358