正在加载图片...

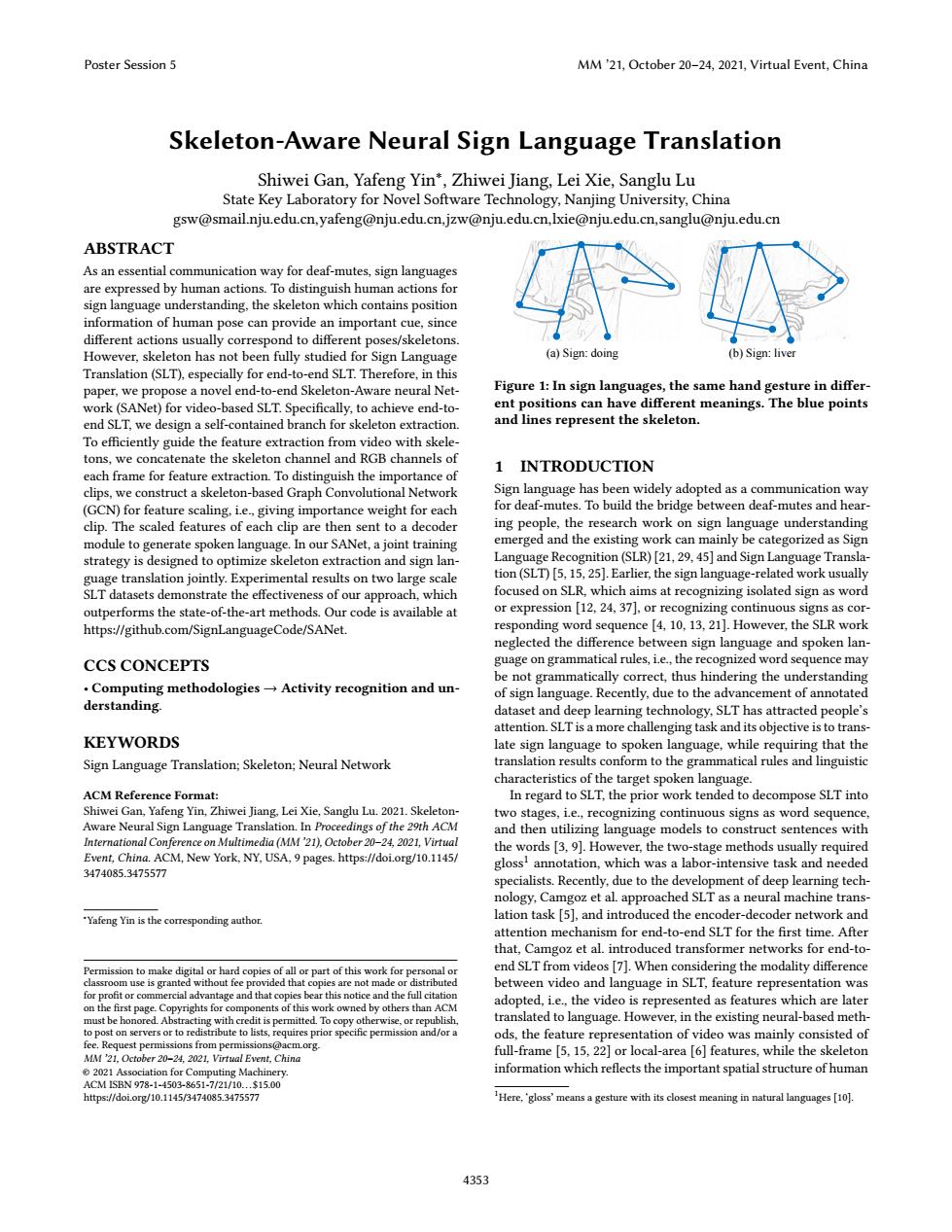

Poster Session 5 MM'21,October 20-24,2021,Virtual Event,China Skeleton-Aware Neural Sign Language Translation Shiwei Gan,Yafeng Yin*,Zhiwei Jiang,Lei Xie,Sanglu Lu State Key Laboratory for Novel Software Technology,Nanjing University,China gsw@smail.nju.edu.cn.yafeng@nju.edu.cn.jzw@nju.edu.cn,lxie@nju.edu.cn,sanglu@nju.edu.cn ABSTRACT As an essential communication way for deaf-mutes,sign languages are expressed by human actions.To distinguish human actions for sign language understanding.the skeleton which contains position information of human pose can provide an important cue,since different actions usually correspond to different poses/skeletons. However,skeleton has not been fully studied for Sign Language (a)Sign:doing (b)Sign:liver Translation (SLT),especially for end-to-end SLT.Therefore,in this paper,we propose a novel end-to-end Skeleton-Aware neural Net- Figure 1:In sign languages,the same hand gesture in differ- work(SANet)for video-based SLT.Specifically,to achieve end-to- ent positions can have different meanings.The blue points end SLT,we design a self-contained branch for skeleton extraction. and lines represent the skeleton. To efficiently guide the feature extraction from video with skele- tons,we concatenate the skeleton channel and RGB channels of 1 INTRODUCTION each frame for feature extraction.To distinguish the importance of clips,we construct a skeleton-based Graph Convolutional Network Sign language has been widely adopted as a communication way (GCN)for feature scaling.ie..giving importance weight for each for deaf-mutes.To build the bridge between deaf-mutes and hear- clip.The scaled features of each clip are then sent to a decoder ing people,the research work on sign language understanding module to generate spoken language.In our SANet,a joint training emerged and the existing work can mainly be categorized as Sign strategy is designed to optimize skeleton extraction and sign lan- Language Recognition(SLR)[21,29,45]and Sign Language Transla- guage translation jointly.Experimental results on two large scale tion(SLT)[5,15,25].Earlier,the sign language-related work usually SLT datasets demonstrate the effectiveness of our approach,which focused on SLR,which aims at recognizing isolated sign as word outperforms the state-of-the-art methods.Our code is available at or expression [12,24,37],or recognizing continuous signs as cor- https://github.com/SignLanguageCode/SANet. responding word sequence [4,10,13,21].However,the SLR work neglected the difference between sign language and spoken lan- CCS CONCEPTS guage on grammatical rules,i.e.,the recognized word sequence may be not grammatically correct,thus hindering the understanding Computing methodologies-Activity recognition and un- of sign language.Recently,due to the advancement of annotated derstanding. dataset and deep learning technology.SLT has attracted people's attention.SLT is a more challenging task and its objective is to trans- KEYWORDS late sign language to spoken language,while requiring that the Sign Language Translation;Skeleton;Neural Network translation results conform to the grammatical rules and linguistic characteristics of the target spoken language. ACM Reference Format: In regard to SLT,the prior work tended to decompose SLT into Shiwei Gan,Yafeng Yin,Zhiwei Jiang,Lei Xie,Sanglu Lu.2021.Skeleton- two stages,i.e.,recognizing continuous signs as word sequence Aware Neural Sign Language Translation.In Proceedings of the 29th ACM and then utilizing language models to construct sentences with International Conference on Multimedia(MM'21).October 20-24,2021.Virtual the words [3,9].However,the two-stage methods usually required Event,China.ACM,New York,NY,USA,9 pages.https://doi.org/10.1145/ gloss!annotation,which was a labor-intensive task and needed 3474085.3475577 specialists.Recently,due to the development of deep learning tech- nology,Camgoz et al.approached SLT as a neural machine trans- Yafeng Yin is the corresponding author. lation task [5],and introduced the encoder-decoder network and attention mechanism for end-to-end SLT for the first time.After that,Camgoz et al.introduced transformer networks for end-to- Permission to make digital or hard copies of all or part of this work for personal or end SLT from videos [7].When considering the modality difference classroom use is granted without fee p ovided that copies are not made or distributed between video and language in SLT,feature representation was for profit or commercial advantage and that copies bear this notice and the full citation on the first page.Copyrights for components of this work owned by others than ACM adopted,i.e.,the video is represented as features which are later must be honored.Abstracting with credit is permitted.To copy otherwise,or republish translated to language.However,in the existing neural-based meth- to post on servers or to redistribute to lists,requires prior specific permission and/or a ods,the feature representation of video was mainly consisted of fee.Request permissions from permissions@acm.org. MM'21,October 20-24,2021,Virtual Event,China full-frame [5,15,22]or local-area [6]features,while the skeleton 2021 Association for Computing Machinery. information which reflects the important spatial structure of human ACM1SBN978-1-4503-8651-7/21/10..$15.00 https:/doi.org/10.1145/3474085.3475577 Here,'gloss'means a gesture with its closest meaning in natural languages [101. 4353Skeleton-Aware Neural Sign Language Translation Shiwei Gan, Yafeng Yin∗ , Zhiwei Jiang, Lei Xie, Sanglu Lu State Key Laboratory for Novel Software Technology, Nanjing University, China gsw@smail.nju.edu.cn,yafeng@nju.edu.cn,jzw@nju.edu.cn,lxie@nju.edu.cn,sanglu@nju.edu.cn ABSTRACT As an essential communication way for deaf-mutes, sign languages are expressed by human actions. To distinguish human actions for sign language understanding, the skeleton which contains position information of human pose can provide an important cue, since different actions usually correspond to different poses/skeletons. However, skeleton has not been fully studied for Sign Language Translation (SLT), especially for end-to-end SLT. Therefore, in this paper, we propose a novel end-to-end Skeleton-Aware neural Network (SANet) for video-based SLT. Specifically, to achieve end-toend SLT, we design a self-contained branch for skeleton extraction. To efficiently guide the feature extraction from video with skeletons, we concatenate the skeleton channel and RGB channels of each frame for feature extraction. To distinguish the importance of clips, we construct a skeleton-based Graph Convolutional Network (GCN) for feature scaling, i.e., giving importance weight for each clip. The scaled features of each clip are then sent to a decoder module to generate spoken language. In our SANet, a joint training strategy is designed to optimize skeleton extraction and sign language translation jointly. Experimental results on two large scale SLT datasets demonstrate the effectiveness of our approach, which outperforms the state-of-the-art methods. Our code is available at https://github.com/SignLanguageCode/SANet. CCS CONCEPTS • Computing methodologies → Activity recognition and understanding. KEYWORDS Sign Language Translation; Skeleton; Neural Network ACM Reference Format: Shiwei Gan, Yafeng Yin, Zhiwei Jiang, Lei Xie, Sanglu Lu. 2021. SkeletonAware Neural Sign Language Translation. In Proceedings of the 29th ACM International Conference on Multimedia (MM ’21), October 20–24, 2021, Virtual Event, China. ACM, New York, NY, USA, 9 pages. https://doi.org/10.1145/ 3474085.3475577 ∗Yafeng Yin is the corresponding author. Permission to make digital or hard copies of all or part of this work for personal or classroom use is granted without fee provided that copies are not made or distributed for profit or commercial advantage and that copies bear this notice and the full citation on the first page. Copyrights for components of this work owned by others than ACM must be honored. Abstracting with credit is permitted. To copy otherwise, or republish, to post on servers or to redistribute to lists, requires prior specific permission and/or a fee. Request permissions from permissions@acm.org. MM ’21, October 20–24, 2021, Virtual Event, China © 2021 Association for Computing Machinery. ACM ISBN 978-1-4503-8651-7/21/10. . . $15.00 https://doi.org/10.1145/3474085.3475577 (a) Sign: doing (b) Sign: liver Figure 1: In sign languages, the same hand gesture in different positions can have different meanings. The blue points and lines represent the skeleton. 1 INTRODUCTION Sign language has been widely adopted as a communication way for deaf-mutes. To build the bridge between deaf-mutes and hearing people, the research work on sign language understanding emerged and the existing work can mainly be categorized as Sign Language Recognition (SLR) [21, 29, 45] and Sign Language Translation (SLT) [5, 15, 25]. Earlier, the sign language-related work usually focused on SLR, which aims at recognizing isolated sign as word or expression [12, 24, 37], or recognizing continuous signs as corresponding word sequence [4, 10, 13, 21]. However, the SLR work neglected the difference between sign language and spoken language on grammatical rules, i.e., the recognized word sequence may be not grammatically correct, thus hindering the understanding of sign language. Recently, due to the advancement of annotated dataset and deep learning technology, SLT has attracted people’s attention. SLT is a more challenging task and its objective is to translate sign language to spoken language, while requiring that the translation results conform to the grammatical rules and linguistic characteristics of the target spoken language. In regard to SLT, the prior work tended to decompose SLT into two stages, i.e., recognizing continuous signs as word sequence, and then utilizing language models to construct sentences with the words [3, 9]. However, the two-stage methods usually required gloss1 annotation, which was a labor-intensive task and needed specialists. Recently, due to the development of deep learning technology, Camgoz et al. approached SLT as a neural machine translation task [5], and introduced the encoder-decoder network and attention mechanism for end-to-end SLT for the first time. After that, Camgoz et al. introduced transformer networks for end-toend SLT from videos [7]. When considering the modality difference between video and language in SLT, feature representation was adopted, i.e., the video is represented as features which are later translated to language. However, in the existing neural-based methods, the feature representation of video was mainly consisted of full-frame [5, 15, 22] or local-area [6] features, while the skeleton information which reflects the important spatial structure of human 1Here, ‘gloss’ means a gesture with its closest meaning in natural languages [10]. Poster Session 5 MM ’21, October 20–24, 2021, Virtual Event, China 4353