正在加载图片...

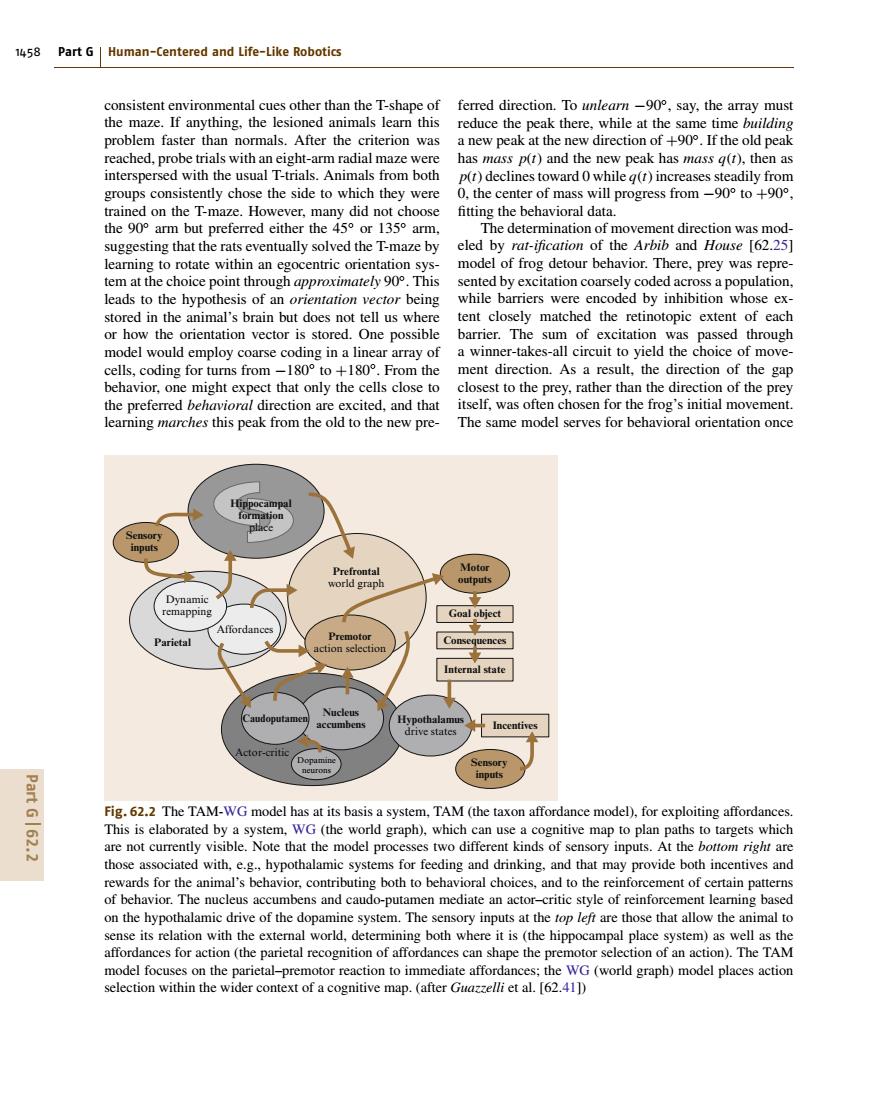

1458 Part G Human-Centered and Life-Like Robotics consistent environmental cues other than the T-shape of ferred direction.To unlearn-90,say,the array must the maze.If anything,the lesioned animals learn this reduce the peak there,while at the same time building problem faster than normals.After the criterion was a new peak at the new direction of+90.If the old peak reached,probe trials with an eight-arm radial maze were has mass p(t)and the new peak has mass g(t),then as interspersed with the usual T-trials.Animals from both p(t)declines toward 0 while g(t)increases steadily from groups consistently chose the side to which they were 0,the center of mass will progress from-90°to+90°, trained on the T-maze.However,many did not choose fitting the behavioral data. the 90 arm but preferred either the 45 or 135 arm, The determination of movement direction was mod- suggesting that the rats eventually solved the T-maze by eled by rat-ification of the Arbib and House [62.25] learning to rotate within an egocentric orientation sys-model of frog detour behavior.There,prey was repre- tem at the choice point through approximately 90.This sented by excitation coarsely coded across a population. leads to the hypothesis of an orientation vector being while barriers were encoded by inhibition whose ex- stored in the animal's brain but does not tell us where tent closely matched the retinotopic extent of each or how the orientation vector is stored.One possible barrier.The sum of excitation was passed through model would employ coarse coding in a linear array of a winner-takes-all circuit to yield the choice of move- cells,coding for turns from-180 to +180.From the ment direction.As a result,the direction of the gap behavior,one might expect that only the cells close to closest to the prey,rather than the direction of the prey the preferred behavioral direction are excited,and that itself,was often chosen for the frog's initial movement. learning marches this peak from the old to the new pre- The same model serves for behavioral orientation once Hippocampal formation place Sensory inputs Prefrontal Motor world graph outputs Dynamic remapping Goal object Affordances Parietal Premotor Consequences action selection Internal state Caudoputamen Nucleus accumbens Hypothalamu Incentives drive states Actor-critic Sensory inputs Part G162.2 Fig.62.2 The TAM-WG model has at its basis a system,TAM(the taxon affordance model),for exploiting affordances. This is elaborated by a system,WG(the world graph),which can use a cognitive map to plan paths to targets which are not currently visible.Note that the model processes two different kinds of sensory inputs.At the bottom right are those associated with,e.g.,hypothalamic systems for feeding and drinking,and that may provide both incentives and rewards for the animal's behavior,contributing both to behavioral choices.and to the reinforcement of certain patterns of behavior.The nucleus accumbens and caudo-putamen mediate an actor-critic style of reinforcement learning based on the hypothalamic drive of the dopamine system.The sensory inputs at the top left are those that allow the animal to sense its relation with the external world,determining both where it is(the hippocampal place system)as well as the affordances for action (the parietal recognition of affordances can shape the premotor selection of an action).The TAM model focuses on the parietal-premotor reaction to immediate affordances;the WG(world graph)model places action selection within the wider context of a cognitive map.(after Guazzelli et al.[62.41])1458 Part G Human-Centered and Life-Like Robotics consistent environmental cues other than the T-shape of the maze. If anything, the lesioned animals learn this problem faster than normals. After the criterion was reached, probe trials with an eight-arm radial maze were interspersed with the usual T-trials. Animals from both groups consistently chose the side to which they were trained on the T-maze. However, many did not choose the 90◦ arm but preferred either the 45◦ or 135◦ arm, suggesting that the rats eventually solved the T-maze by learning to rotate within an egocentric orientation system at the choice point through approximately 90◦. This leads to the hypothesis of an orientation vector being stored in the animal’s brain but does not tell us where or how the orientation vector is stored. One possible model would employ coarse coding in a linear array of cells, coding for turns from −180◦ to +180◦. From the behavior, one might expect that only the cells close to the preferred behavioral direction are excited, and that learning marches this peak from the old to the new preHippocampal formation place Hypothalamus drive states Prefrontal world graph Premotor action selection Affordances Actor-critic Dopamine neurons Parietal Caudoputamen Nucleus accumbens Sensory inputs Sensory inputs Motor outputs Goal object Consequences Internal state Incentives Dynamic remapping Fig. 62.2 The TAM-WG model has at its basis a system, TAM (the taxon affordance model), for exploiting affordances. This is elaborated by a system, WG (the world graph), which can use a cognitive map to plan paths to targets which are not currently visible. Note that the model processes two different kinds of sensory inputs. At the bottom right are those associated with, e.g., hypothalamic systems for feeding and drinking, and that may provide both incentives and rewards for the animal’s behavior, contributing both to behavioral choices, and to the reinforcement of certain patterns of behavior. The nucleus accumbens and caudo-putamen mediate an actor–critic style of reinforcement learning based on the hypothalamic drive of the dopamine system. The sensory inputs at the top left are those that allow the animal to sense its relation with the external world, determining both where it is (the hippocampal place system) as well as the affordances for action (the parietal recognition of affordances can shape the premotor selection of an action). The TAM model focuses on the parietal–premotor reaction to immediate affordances; the WG (world graph) model places action selection within the wider context of a cognitive map. (after Guazzelli et al. [62.41]) ferred direction. To unlearn −90◦, say, the array must reduce the peak there, while at the same time building a new peak at the new direction of +90◦. If the old peak has mass p(t) and the new peak has mass q(t), then as p(t) declines toward 0 while q(t) increases steadily from 0, the center of mass will progress from −90◦ to +90◦, fitting the behavioral data. The determination of movement direction was modeled by rat-ification of the Arbib and House [62.25] model of frog detour behavior. There, prey was represented by excitation coarsely coded across a population, while barriers were encoded by inhibition whose extent closely matched the retinotopic extent of each barrier. The sum of excitation was passed through a winner-takes-all circuit to yield the choice of movement direction. As a result, the direction of the gap closest to the prey, rather than the direction of the prey itself, was often chosen for the frog’s initial movement. The same model serves for behavioral orientation once Part G 62.2