正在加载图片...

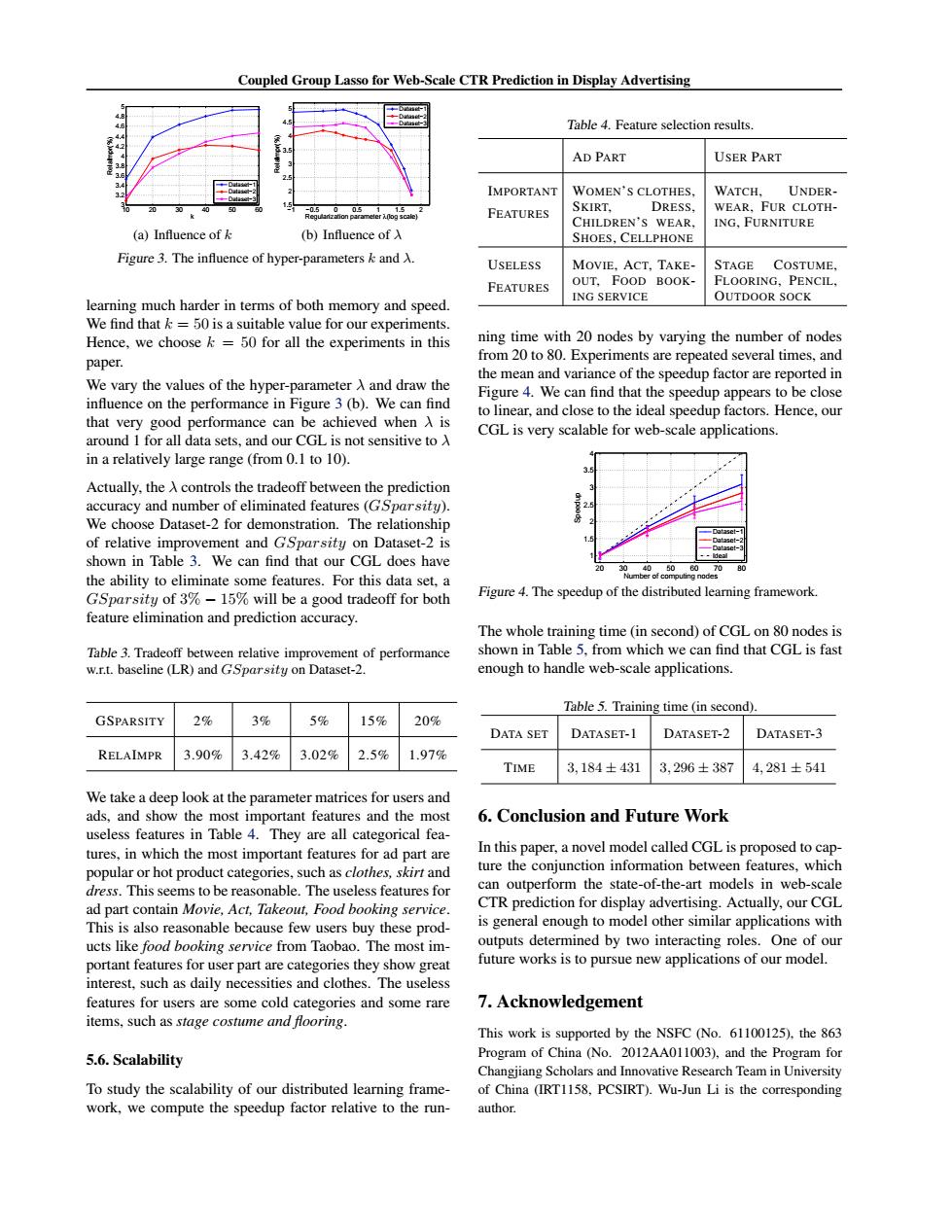

Coupled Group Lasso for Web-Scale CTR Prediction in Display Advertising Table 4.Feature selection results AD PART USER PART B.E IMPORTANT WOMEN'S CLOTHES WATCH UNDER- FEATURES SKIRT, DRESS WEAR,FUR CLOTH- CHILDREN'S WEAR ING,FURNITURE (a)Influence of k (b)Influence of入 SHOES.CELLPHONE Figure 3.The influence of hyper-parameters k and A. USELESS MOVIE,ACT,TAKE- STAGE COSTUME, FEATURES OUT.FOOD BOOK- FLOORING,PENCIL, ING SERVICE OUTDOOR SOCK learning much harder in terms of both memory and speed. We find that k =50 is a suitable value for our experiments Hence,we choose k =50 for all the experiments in this ning time with 20 nodes by varying the number of nodes paper. from 20 to 80.Experiments are repeated several times,and the mean and variance of the speedup factor are reported in We vary the values of the hyper-parameter A and draw the Figure 4.We can find that the speedup appears to be close influence on the performance in Figure 3(b).We can find to linear,and close to the ideal speedup factors.Hence,our that very good performance can be achieved when A is around 1 for all data sets,and our CGL is not sensitive to A CGL is very scalable for web-scale applications. in a relatively large range(from 0.1 to 10). Actually.the A controls the tradeoff between the prediction accuracy and number of eliminated features(GSparsity). We choose Dataset-2 for demonstration.The relationship of relative improvement and GSparsity on Dataset-2 is shown in Table 3.We can find that our CGL does have --deal the ability to eliminate some features.For this data set,a GSparsity of 3%-15%will be a good tradeoff for both Figure 4.The speedup of the distributed learning framework. feature elimination and prediction accuracy. The whole training time (in second)of CGL on 80 nodes is Table 3.Tradeoff between relative improvement of performance shown in Table 5,from which we can find that CGL is fast w.r.t.baseline (LR)and GSparsity on Dataset-2. enough to handle web-scale applications. Table 5.Training time (in second). GSPARSITY 2% 3% 5% 15% 20% DATA SET DATASET-1 DATASET-2 DATASET-3 RELAIMPR 3.90% 3.42% 3.02% 2.5% 1.97% TIME 3,184士431 3,296±387 4,281±541 We take a deep look at the parameter matrices for users and ads,and show the most important features and the most 6.Conclusion and Future Work useless features in Table 4.They are all categorical fea- tures,in which the most important features for ad part are In this paper,a novel model called CGL is proposed to cap- popular or hot product categories,such as clothes,skirt and ture the conjunction information between features,which dress.This seems to be reasonable.The useless features for can outperform the state-of-the-art models in web-scale ad part contain Movie,Act,Takeout,Food booking service. CTR prediction for display advertising.Actually,our CGL This is also reasonable because few users buy these prod- is general enough to model other similar applications with ucts like food booking service from Taobao.The most im- outputs determined by two interacting roles.One of our portant features for user part are categories they show great future works is to pursue new applications of our model. interest,such as daily necessities and clothes.The useless features for users are some cold categories and some rare 7.Acknowledgement items,such as stage costume and flooring. This work is supported by the NSFC (No.61100125),the 863 5.6.Scalability Program of China (No.2012AA011003),and the Program for Changjiang Scholars and Innovative Research Team in University To study the scalability of our distributed learning frame- of China (IRT1158,PCSIRT).Wu-Jun Li is the corresponding work,we compute the speedup factor relative to the run- author.Coupled Group Lasso for Web-Scale CTR Prediction in Display Advertising 10 20 30 40 50 60 3 3.2 3.4 3.6 3.8 4 4.2 4.4 4.6 4.8 5 k RelaImpr(%) Dataset−1 Dataset−2 Dataset−3 (a) Influence of k −1 −0.5 0 0.5 1 1.5 2 1.5 2 2.5 3 3.5 4 4.5 5 Regularization parameter λ(log scale) RelaImpr(%) Dataset−1 Dataset−2 Dataset−3 (b) Influence of λ Figure 3. The influence of hyper-parameters k and λ. learning much harder in terms of both memory and speed. We find that k = 50 is a suitable value for our experiments. Hence, we choose k = 50 for all the experiments in this paper. We vary the values of the hyper-parameter λ and draw the influence on the performance in Figure 3 (b). We can find that very good performance can be achieved when λ is around 1 for all data sets, and our CGL is not sensitive to λ in a relatively large range (from 0.1 to 10). Actually, the λ controls the tradeoff between the prediction accuracy and number of eliminated features (GSparsity). We choose Dataset-2 for demonstration. The relationship of relative improvement and GSparsity on Dataset-2 is shown in Table 3. We can find that our CGL does have the ability to eliminate some features. For this data set, a GSparsity of 3% − 15% will be a good tradeoff for both feature elimination and prediction accuracy. Table 3. Tradeoff between relative improvement of performance w.r.t. baseline (LR) and GSparsity on Dataset-2. GSPARSITY 2% 3% 5% 15% 20% RELAIMPR 3.90% 3.42% 3.02% 2.5% 1.97% We take a deep look at the parameter matrices for users and ads, and show the most important features and the most useless features in Table 4. They are all categorical features, in which the most important features for ad part are popular or hot product categories, such as clothes, skirt and dress. This seems to be reasonable. The useless features for ad part contain Movie, Act, Takeout, Food booking service. This is also reasonable because few users buy these products like food booking service from Taobao. The most important features for user part are categories they show great interest, such as daily necessities and clothes. The useless features for users are some cold categories and some rare items, such as stage costume and flooring. 5.6. Scalability To study the scalability of our distributed learning framework, we compute the speedup factor relative to the runTable 4. Feature selection results. AD PART USER PART IMPORTANT FEATURES WOMEN’S CLOTHES, SKIRT, DRESS, CHILDREN’S WEAR, SHOES, CELLPHONE WATCH, UNDERWEAR, FUR CLOTHING, FURNITURE USELESS FEATURES MOVIE, ACT, TAKEOUT, FOOD BOOKING SERVICE STAGE COSTUME, FLOORING, PENCIL, OUTDOOR SOCK ning time with 20 nodes by varying the number of nodes from 20 to 80. Experiments are repeated several times, and the mean and variance of the speedup factor are reported in Figure 4. We can find that the speedup appears to be close to linear, and close to the ideal speedup factors. Hence, our CGL is very scalable for web-scale applications. 20 30 40 50 60 70 80 1 1.5 2 2.5 3 3.5 4 Number of computing nodes Speedup Dataset−1 Dataset−2 Dataset−3 Ideal Figure 4. The speedup of the distributed learning framework. The whole training time (in second) of CGL on 80 nodes is shown in Table 5, from which we can find that CGL is fast enough to handle web-scale applications. Table 5. Training time (in second). DATA SET DATASET-1 DATASET-2 DATASET-3 TIME 3, 184 ± 431 3, 296 ± 387 4, 281 ± 541 6. Conclusion and Future Work In this paper, a novel model called CGL is proposed to capture the conjunction information between features, which can outperform the state-of-the-art models in web-scale CTR prediction for display advertising. Actually, our CGL is general enough to model other similar applications with outputs determined by two interacting roles. One of our future works is to pursue new applications of our model. 7. Acknowledgement This work is supported by the NSFC (No. 61100125), the 863 Program of China (No. 2012AA011003), and the Program for Changjiang Scholars and Innovative Research Team in University of China (IRT1158, PCSIRT). Wu-Jun Li is the corresponding author