正在加载图片...

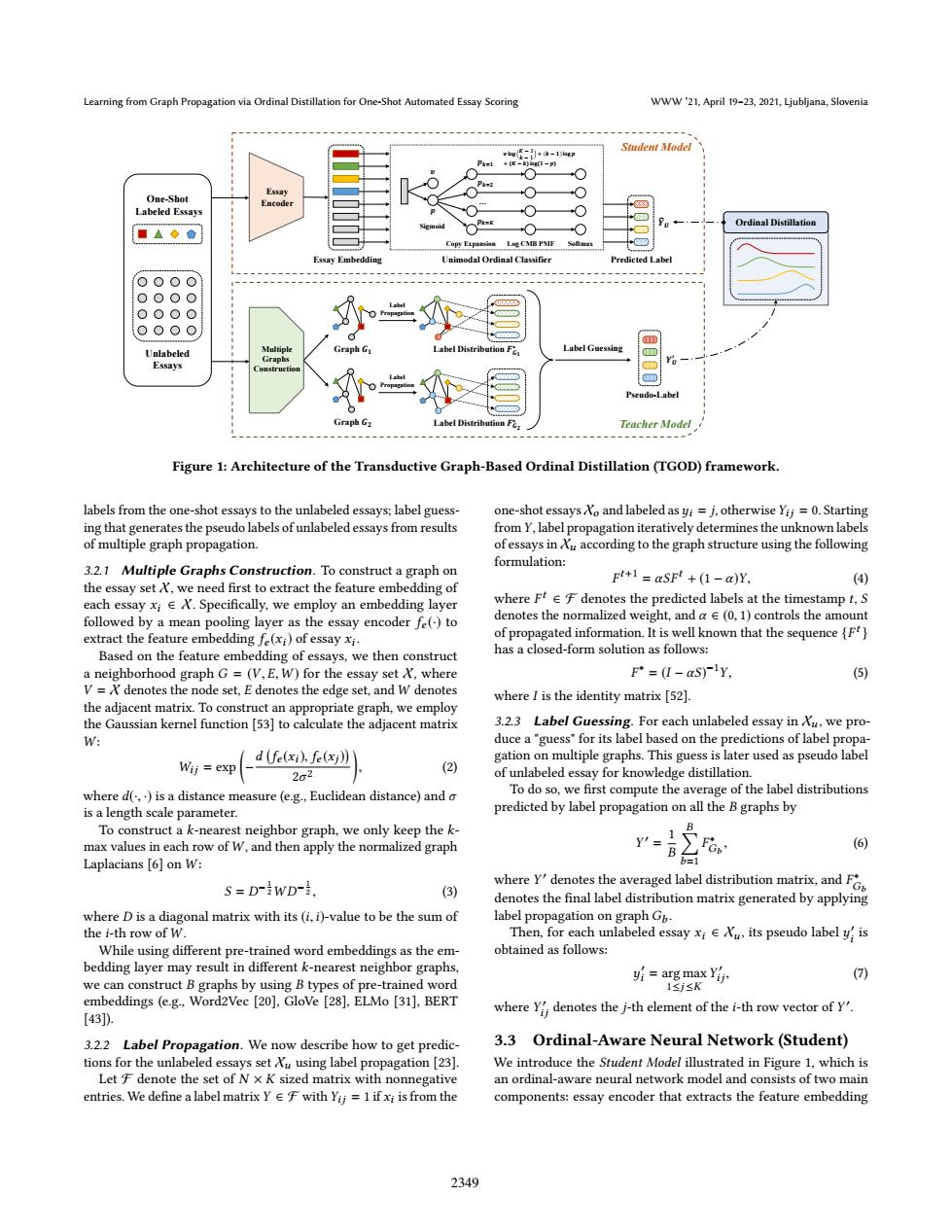

Learning from Graph Propagation via Ordinal Distillation for One-Shot Automated Essay Scoring WWW'21,April 19-23,2021,Ljubljana,Slovenia -+k-1h Student Model m-P Essay One-Shot Labeled Essays Ordinal Distillation ■△◆鱼 Leg CMB PMF Unimodal Ordinal Classifier edicted Label ooOO oOOO oOO 0000 D Unlabeled Multiple Graph Gi Label Guessing Graphs Essays Pseudo-Label Graph Gz Teacher Model Figure 1:Architecture of the Transductive Graph-Based Ordinal Distillation(TGOD)framework. labels from the one-shot essays to the unlabeled essays;label guess- one-shot essays No and labeled as yi j,otherwise Yii =0.Starting ing that generates the pseudo labels of unlabeled essays from results from Y,label propagation iteratively determines the unknown labels of multiple graph propagation. of essays in Au according to the graph structure using the following formulation: 3.2.1 Multiple Graphs Construction.To construct a graph on F+1=aSF+(1-a)Y, 4) the essay set A,we need first to extract the feature embedding of each essay xiE X.Specifically,we employ an embedding layer where Ft eFdenotes the predicted labels at the timestamp t,S followed by a mean pooling layer as the essay encoder fe()to denotes the normalized weight,and a e(0,1)controls the amount extract the feature embedding fe(xi)of essay xi. of propagated information.It is well known that the sequence(F) Based on the feature embedding of essays,we then construct has a closed-form solution as follows: a neighborhood graph G=(V,E,W)for the essay set X,where F=(I-aS)-1Y, (5) V=X denotes the node set,E denotes the edge set,and W denotes where I is the identity matrix [52] the adjacent matrix.To construct an appropriate graph,we employ the Gaussian kernel function [53]to calculate the adjacent matrix 3.23 Label Guessing.For each unlabeled essay in Xu,we pro- W: duce a"guess"for its label based on the predictions of label propa- gation on multiple graphs.This guess is later used as pseudo label Wij exp d (fe(xi).fe(xj)) 2o2 (2) of unlabeled essay for knowledge distillation where d(,)is a distance measure (e.g.,Euclidean distance)and o To do so,we first compute the average of the label distributions is a length scale parameter. predicted by label propagation on all the B graphs by To construct a k-nearest neighbor graph,we only keep the k- B Y'= 1 max values in each row of W,and then apply the normalized graph B」 (6) Laplacians [6]on W: S=D-WD-i. (3) where ydenotes the averaged label distribution matrixand denotes the final label distribution matrix generated by applying where D is a diagonal matrix with its (i,i)-value to be the sum of label propagation on graph G. the i-th row of W. Then,for each unlabeled essay xiXu,its pseudo labelyis While using different pre-trained word embeddings as the em- obtained as follows: bedding layer may result in different k-nearest neighbor graphs, () we can construct B graphs by using B types of pre-trained word yi=arg max Yij. 1≤j≤K embeddings (e.g,Word2Vec [20],GloVe [28],ELMo [31],BERT where Y denotes the j-th element of the i-th row vector of Y. [43]). 3.2.2 Label Propagation.We now describe how to get predic- 3.3 Ordinal-Aware Neural Network (Student) tions for the unlabeled essays set Xu using label propagation [23]. We introduce the Student Model illustrated in Figure 1,which is Let F denote the set of N x K sized matrix with nonnegative an ordinal-aware neural network model and consists of two main entries.We define a label matrix Ye F with Yij=1 if xi is from the components:essay encoder that extracts the feature embedding 2349Learning from Graph Propagation via Ordinal Distillation for One-Shot Automated Essay Scoring WWW ’21, April 19–23, 2021, Ljubljana, Slovenia Multiple Graphs Construction Essay Encoder Essay Embedding Predicted Label 𝒀𝑼 Ordinal Distillation Pseudo-Label Label Guessing 𝒀𝑼 ′ Unimodal Ordinal Classifier Graph 𝑮𝟏 Label Distribution 𝑭𝑮𝟏 ∗ Label Propagation Graph 𝑮𝟐 Label Distribution 𝑭𝑮𝟐 ∗ Copy Expansion Softmax Sigmoid 𝒑 𝒑𝒌=𝟏 𝒑𝒌=𝟐 𝒑𝒌=𝑲 𝝊 log 𝐾 − 1 𝑘 − 1 + 𝒌 − 𝟏 log 𝒑 + (𝑲 − 𝒌) log(𝟏 − 𝒑) 𝝊 Log CMB PMF … Label Propagation Teacher Model Student Model One-Shot Labeled Essays Unlabeled Essays Figure 1: Architecture of the Transductive Graph-Based Ordinal Distillation (TGOD) framework. labels from the one-shot essays to the unlabeled essays; label guessing that generates the pseudo labels of unlabeled essays from results of multiple graph propagation. 3.2.1 Multiple Graphs Construction. To construct a graph on the essay set X, we need first to extract the feature embedding of each essay xi ∈ X. Specifically, we employ an embedding layer followed by a mean pooling layer as the essay encoder fe (·) to extract the feature embedding fe (xi) of essay xi . Based on the feature embedding of essays, we then construct a neighborhood graph G = (V, E,W ) for the essay set X, where V = X denotes the node set, E denotes the edge set, and W denotes the adjacent matrix. To construct an appropriate graph, we employ the Gaussian kernel function [53] to calculate the adjacent matrix W : Wij = exp − d fe (xi), fe (xj) 2σ 2 , (2) where d(·, ·) is a distance measure (e.g., Euclidean distance) and σ is a length scale parameter. To construct a k-nearest neighbor graph, we only keep the kmax values in each row ofW , and then apply the normalized graph Laplacians [6] on W : S = D − 1 2W D− 1 2 , (3) where D is a diagonal matrix with its (i,i)-value to be the sum of the i-th row of W . While using different pre-trained word embeddings as the embedding layer may result in different k-nearest neighbor graphs, we can construct B graphs by using B types of pre-trained word embeddings (e.g., Word2Vec [20], GloVe [28], ELMo [31], BERT [43]). 3.2.2 Label Propagation. We now describe how to get predictions for the unlabeled essays set Xu using label propagation [23]. Let F denote the set of N × K sized matrix with nonnegative entries. We define a label matrix Y ∈ F with Yij = 1 if xi is from the one-shot essays Xo and labeled asyi = j, otherwise Yij = 0. Starting fromY, label propagation iteratively determines the unknown labels of essays in Xu according to the graph structure using the following formulation: F t+1 = αSFt + (1 − α)Y, (4) where F t ∈ F denotes the predicted labels at the timestamp t, S denotes the normalized weight, and α ∈ (0, 1) controls the amount of propagated information. It is well known that the sequence {F t } has a closed-form solution as follows: F ∗ = (I − αS) −1Y, (5) where I is the identity matrix [52]. 3.2.3 Label Guessing. For each unlabeled essay in Xu , we produce a "guess" for its label based on the predictions of label propagation on multiple graphs. This guess is later used as pseudo label of unlabeled essay for knowledge distillation. To do so, we first compute the average of the label distributions predicted by label propagation on all the B graphs by Y ′ = 1 B Õ B b=1 F ∗ Gb , (6) where Y ′ denotes the averaged label distribution matrix, and F ∗ Gb denotes the final label distribution matrix generated by applying label propagation on graph Gb . Then, for each unlabeled essay xi ∈ Xu , its pseudo label y ′ i is obtained as follows: y ′ i = arg max 1≤j ≤K Y ′ ij , (7) where Y ′ ij denotes the j-th element of the i-th row vector of Y ′ . 3.3 Ordinal-Aware Neural Network (Student) We introduce the Student Model illustrated in Figure 1, which is an ordinal-aware neural network model and consists of two main components: essay encoder that extracts the feature embedding 2349