正在加载图片...

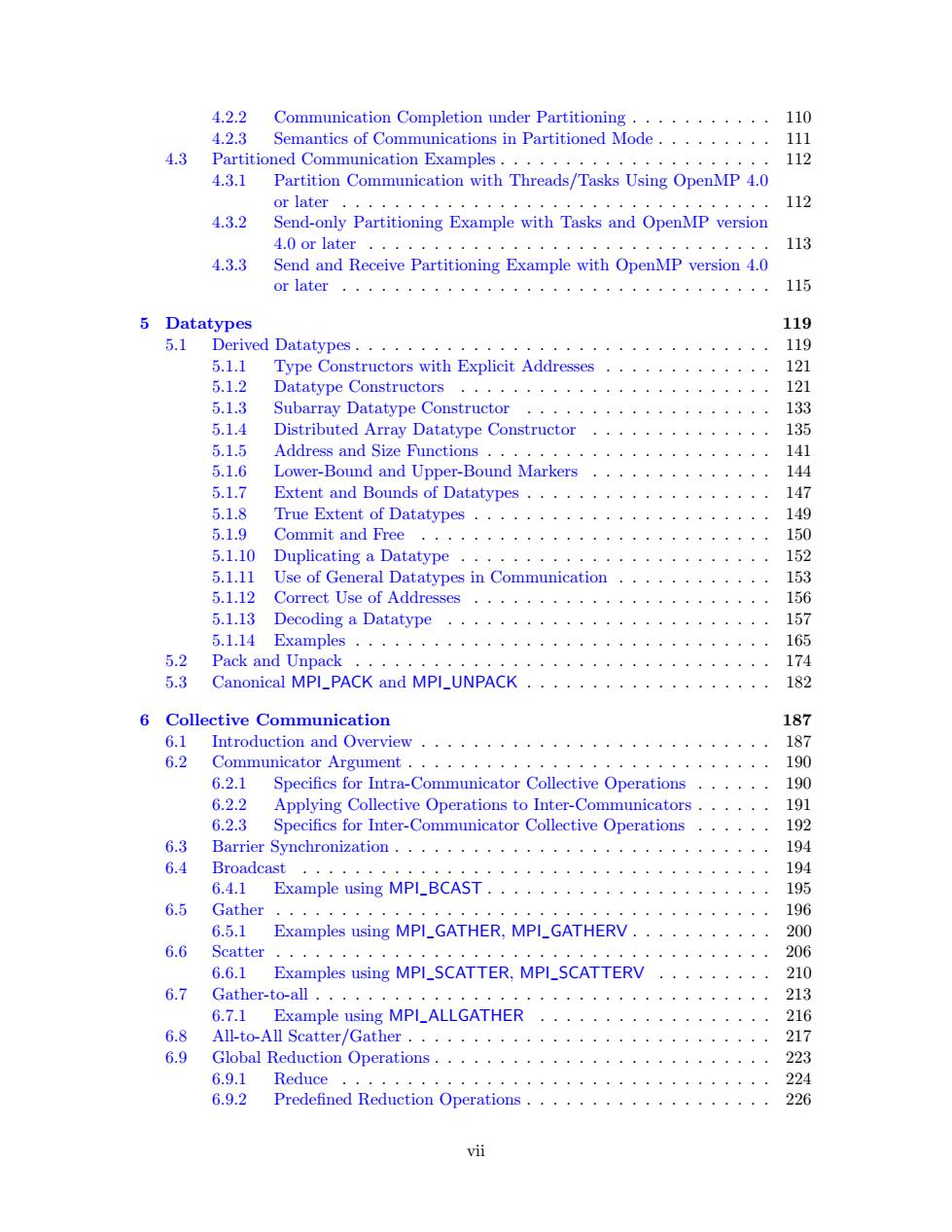

4.2.2 Communication Completion under Partitioning 110 4.2.3 Semantics of Communications in Partitioned Mode 111 4.3 Partitioned Communication Examples. 112 4.3.1 Partition Communication with Threads/Tasks Using OpenMP 4.0 or later 119 4.3.2 Send-only Partitioning Example with Tasks and OpenMP version 4 0 or later 11 4.3.3 Send and receive partitioning example with openmP version 4.0 or later 115 5 Datatypes 119 5.1 Derived Datatypes. 119 5.1. Type Constructors with Explicit Addresses 121 5.1.2 Datatype Constructors 。。。。。。,。。,。。。,,。。 121 5.13 Subarray Datatype Constructor 133 51.4 Distributed array datatype constructor 135 5.1.5 Address and Size Functions 141 5.1.6 Lower-Bound and Upper-Bound markers 144 5.1.7 Extent and bounds of Datatypes 147 5.1.8 True Extent of Datatypes 149 5.1.9 ●ommit and Free 150 5.1.10 Duplicating a Datatype 5111 Use of General Datatypes in Communication 5.1.12 Correct Use of Addresses 5.1.13 Decoding a Datatype 157 5.1.14 Examples 165 5.2 Pack and Unpack 174 5.3 Canonical MPI_PACK and MPI_UNPACK 182 6 Collective Communication 187 6.1 Introduction and Overview 6.2 Commnunicator Argument 19d 6.2.1 Specifics for Intra-Communicator Collective Operations 。 1 6.2. Applying Collective Operations to Inter-Communicators 191 6.2.3 Specifics for Inter-Communicator Collective Operations 192 6.3 Barrier Synchronization............ 194 6.4 Broadcas 。。。。。。,。。。。。,。。 6.4.1 Example using MPI_BCAST..... 6.5 Gather ,。 196 6.5.1 Examples using MPI_GATHER,MPI_GATHERV... 200 6.6 Scatter 。 206 6.6.1 Examples using MPI_SCATTER,MPI_SCATTERV 210 6.7 Gather-to-all . 213 6.71 Example using mPl allGATHER 216 6.8 All-to-All Scatter/Gather... 217 6.9 Global Reduction Operations......... 223 6.9.1 Reduce 224 6.9.2 Predefined Reduction Operations 226 vii 4.2.2 Communication Completion under Partitioning . . . . . . . . . . . 110 4.2.3 Semantics of Communications in Partitioned Mode . . . . . . . . . 111 4.3 Partitioned Communication Examples . . . . . . . . . . . . . . . . . . . . . 112 4.3.1 Partition Communication with Threads/Tasks Using OpenMP 4.0 or later . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 112 4.3.2 Send-only Partitioning Example with Tasks and OpenMP version 4.0 or later . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 113 4.3.3 Send and Receive Partitioning Example with OpenMP version 4.0 or later . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 115 5 Datatypes 119 5.1 Derived Datatypes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 119 5.1.1 Type Constructors with Explicit Addresses . . . . . . . . . . . . . 121 5.1.2 Datatype Constructors . . . . . . . . . . . . . . . . . . . . . . . . 121 5.1.3 Subarray Datatype Constructor . . . . . . . . . . . . . . . . . . . 133 5.1.4 Distributed Array Datatype Constructor . . . . . . . . . . . . . . 135 5.1.5 Address and Size Functions . . . . . . . . . . . . . . . . . . . . . . 141 5.1.6 Lower-Bound and Upper-Bound Markers . . . . . . . . . . . . . . 144 5.1.7 Extent and Bounds of Datatypes . . . . . . . . . . . . . . . . . . . 147 5.1.8 True Extent of Datatypes . . . . . . . . . . . . . . . . . . . . . . . 149 5.1.9 Commit and Free . . . . . . . . . . . . . . . . . . . . . . . . . . . 150 5.1.10 Duplicating a Datatype . . . . . . . . . . . . . . . . . . . . . . . . 152 5.1.11 Use of General Datatypes in Communication . . . . . . . . . . . . 153 5.1.12 Correct Use of Addresses . . . . . . . . . . . . . . . . . . . . . . . 156 5.1.13 Decoding a Datatype . . . . . . . . . . . . . . . . . . . . . . . . . 157 5.1.14 Examples . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 165 5.2 Pack and Unpack . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 174 5.3 Canonical MPI_PACK and MPI_UNPACK . . . . . . . . . . . . . . . . . . . 182 6 Collective Communication 187 6.1 Introduction and Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . 187 6.2 Communicator Argument . . . . . . . . . . . . . . . . . . . . . . . . . . . . 190 6.2.1 Specifics for Intra-Communicator Collective Operations . . . . . . 190 6.2.2 Applying Collective Operations to Inter-Communicators . . . . . . 191 6.2.3 Specifics for Inter-Communicator Collective Operations . . . . . . 192 6.3 Barrier Synchronization . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 194 6.4 Broadcast . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 194 6.4.1 Example using MPI_BCAST . . . . . . . . . . . . . . . . . . . . . . 195 6.5 Gather . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 196 6.5.1 Examples using MPI_GATHER, MPI_GATHERV . . . . . . . . . . . 200 6.6 Scatter . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 206 6.6.1 Examples using MPI_SCATTER, MPI_SCATTERV . . . . . . . . . 210 6.7 Gather-to-all . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 213 6.7.1 Example using MPI_ALLGATHER . . . . . . . . . . . . . . . . . . 216 6.8 All-to-All Scatter/Gather . . . . . . . . . . . . . . . . . . . . . . . . . . . . 217 6.9 Global Reduction Operations . . . . . . . . . . . . . . . . . . . . . . . . . . 223 6.9.1 Reduce . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 224 6.9.2 Predefined Reduction Operations . . . . . . . . . . . . . . . . . . . 226 vii