正在加载图片...

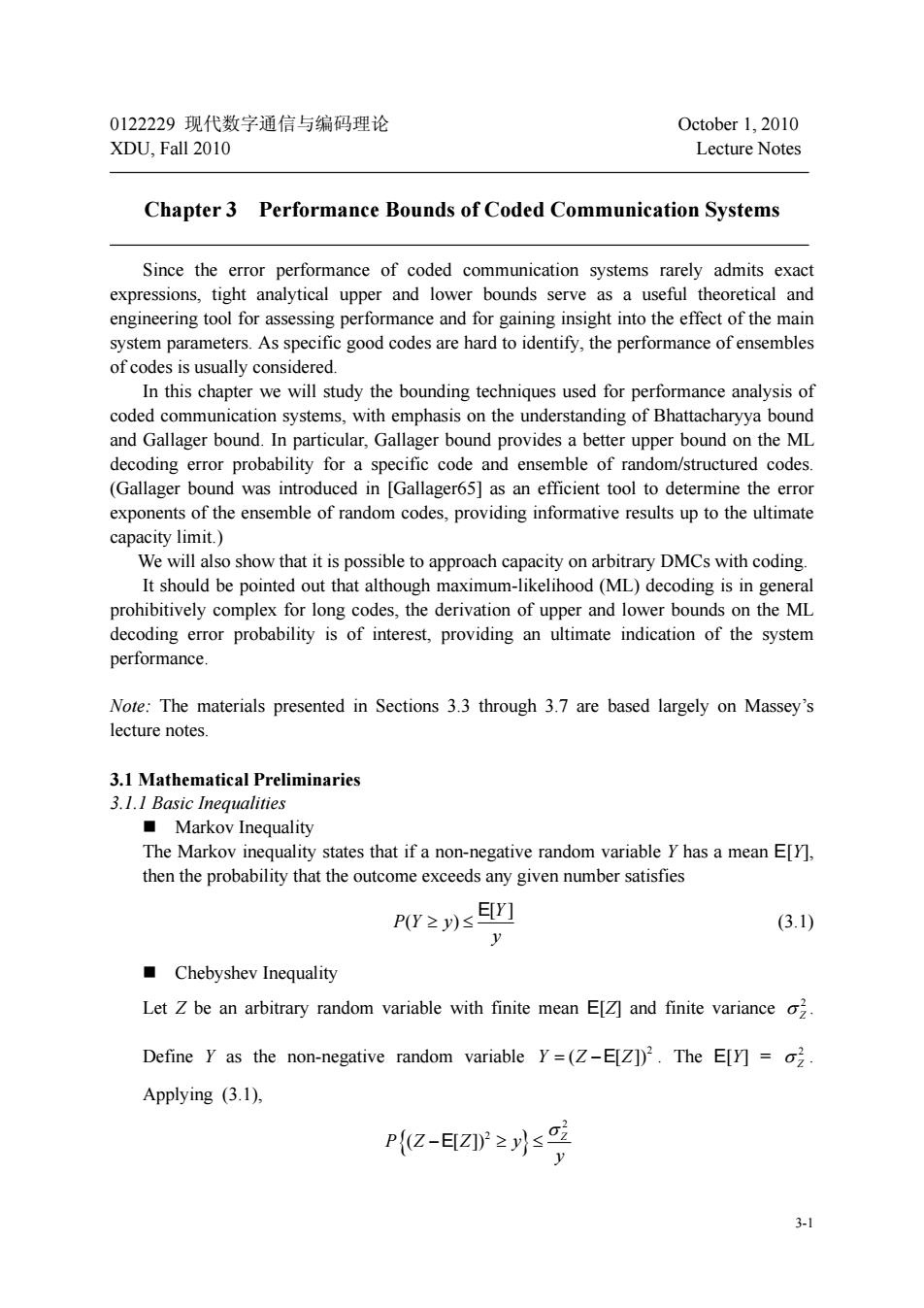

0122229现代数字通信与编码理论 October 1,2010 XDU,Fall 2010 Lecture Notes Chapter 3 Performance Bounds of Coded Communication Systems since the error performance of coded communication systems rarely admits exact express analytical uppe and lower bounds serve s a useful theoretical a nd engineering tool for assessing performance and for gaining insight into the effect of the main system parameters.As specific good codes are hard to identify,the performance of ensembles of codes is usually considered. In this chapter we will study the bounding techniques used for performance analysis of coded communication system s with em nphasis on the understanding of Bhattacharyya bound and Gallager bound. n particular.Gal ger bound provides a better upper bound on the ML decoding error probability for a specific code and ensemble of random/structured codes. (Gallager bound was introduced in [Gallager65]as an efficient tool to determine the error exponents of the ensemble of random codes.providing informative results up to the ultimate acity limit We will also show that it is possible to approach capacity on arb itrary DMCs with coding It should be pointed out that although maximum-likelihood (ML)decoding is in genera prohibitively complex for long codes,the derivation of upper and lower bounds on the ML decoding error probability is of interest,providing an ultimate indication of the system performance. Note:The materials presented in Sections 3.3 through 3.7 are based largely on Massey's lecture notes 3.1 Mathematical Preliminaries 3 1 I Basic inegualities Markov Inequality The Markov inequality states that if a non-negative random variable Y has a mean E[Y]. then the probability that the outcome exceeds any given number satisfies PW≥)s] (3.1) Chebyshev Inequality Let Z be an arbitrary random variable with finite mean E[Z]and finite variance o. Define Y as the non-negative random variable Y=(Z-E[Z]).The E[Y]=2 Applying (3.1). P((Z-EZ 1 心 3-1 0122229 现代数字通信与编码理论 October 1, 2010 XDU, Fall 2010 Lecture Notes Chapter 3 Performance Bounds of Coded Communication Systems Since the error performance of coded communication systems rarely admits exact expressions, tight analytical upper and lower bounds serve as a useful theoretical and engineering tool for assessing performance and for gaining insight into the effect of the main system parameters. As specific good codes are hard to identify, the performance of ensembles of codes is usually considered. In this chapter we will study the bounding techniques used for performance analysis of coded communication systems, with emphasis on the understanding of Bhattacharyya bound and Gallager bound. In particular, Gallager bound provides a better upper bound on the ML decoding error probability for a specific code and ensemble of random/structured codes. (Gallager bound was introduced in [Gallager65] as an efficient tool to determine the error exponents of the ensemble of random codes, providing informative results up to the ultimate capacity limit.) We will also show that it is possible to approach capacity on arbitrary DMCs with coding. It should be pointed out that although maximum-likelihood (ML) decoding is in general prohibitively complex for long codes, the derivation of upper and lower bounds on the ML decoding error probability is of interest, providing an ultimate indication of the system performance. Note: The materials presented in Sections 3.3 through 3.7 are based largely on Massey’s lecture notes. 3.1 Mathematical Preliminaries 3.1.1 Basic Inequalities Markov Inequality The Markov inequality states that if a non-negative random variable Y has a mean E[Y], then the probability that the outcome exceeds any given number satisfies [ ] ( ) Y PY y y ≥ ≤ E (3.1) Chebyshev Inequality Let Z be an arbitrary random variable with finite mean E[Z] and finite variance 2 σ Z . Define Y as the non-negative random variable 2 YZ Z = − ( [ ]) E . The E[Y] = 2 σ Z . Applying (3.1), { } 2 2 ( [ ]) Z PZ Z y y σ − ≥≤ E