正在加载图片...

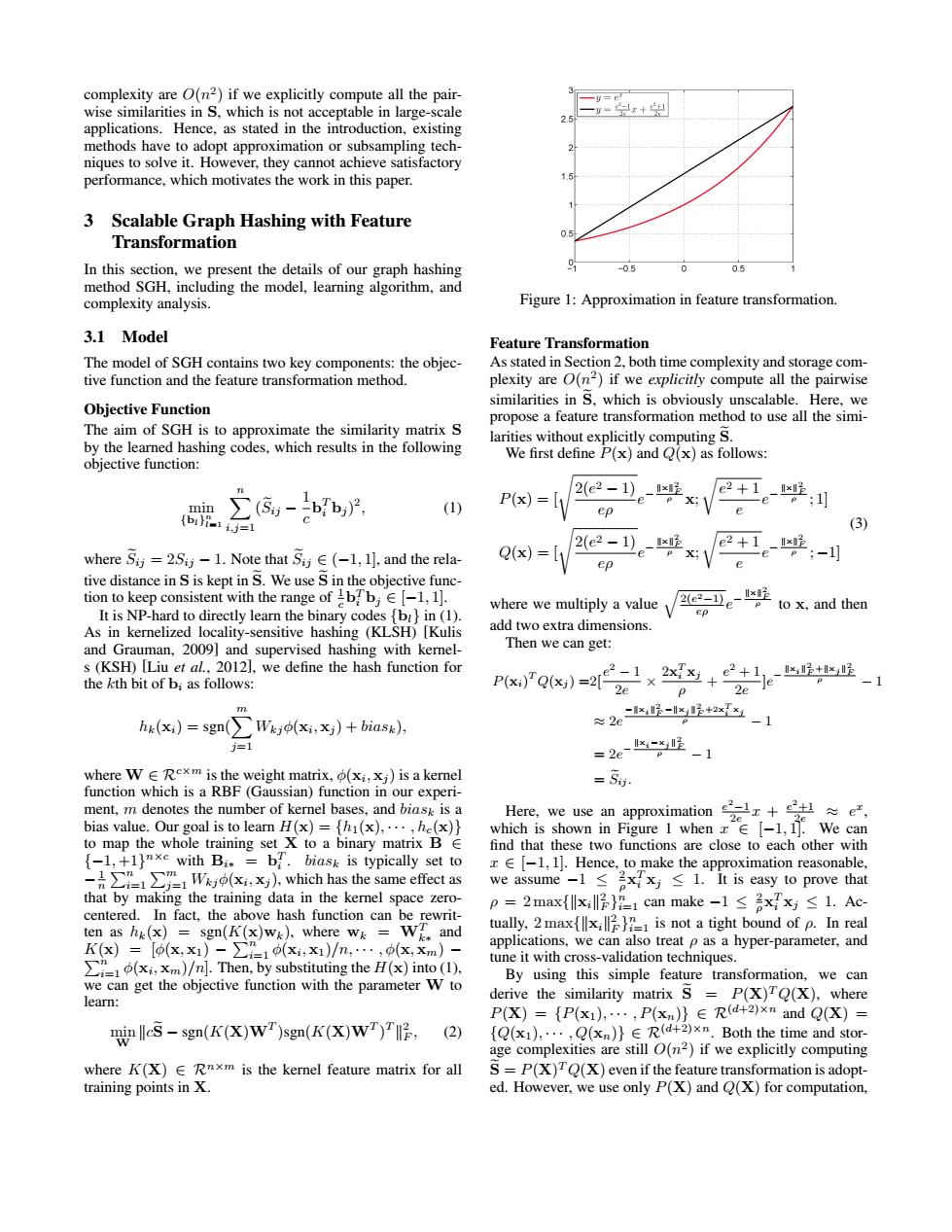

complexity are O(n2)if we explicitly compute all the pair- wise similarities in S,which is not acceptable in large-scale applications.Hence,as stated in the introduction,existing methods have to adopt approximation or subsampling tech- niques to solve it.However,they cannot achieve satisfactory performance,which motivates the work in this paper. 3 Scalable Graph Hashing with Feature Transformation In this section,we present the details of our graph hashing -05 0.5 method SGH,including the model,learning algorithm,and complexity analysis. Figure 1:Approximation in feature transformation 3.1 Model Feature Transformation The model of SGH contains two key components:the objec- As stated in Section 2,both time complexity and storage com- tive function and the feature transformation method. plexity are O(n2)if we explicitly compute all the pairwise similarities in S.which is obviously unscalable.Here,we Objective Function propose a feature transformation method to use all the simi- The aim of SGH is to approximate the similarity matrix S by the learned hashing codes,which results in the following larities without explicitly computing S. We first define P(x)and Q(x)as follows: objective function: min ∑-bb,, 2(e2-1e-x (1) P(x)= x;1 e2+1。-:川 {bt-1,j ep (3) 2(e2-1)。-x2 where Sij=2Si-l.Note that Sj∈(-1,l↓,and the rela-- Q(x)=[1 P X; 2+e-4;-1 tive distance in S is kept in S.We use S in the objective func- tion to keep consistent with the range of Ibb;E[-1,1]. to x,and then It is NP-hard to directly learn the binary codes {bi}in (1). where we multiply a value ep As in kernelized locality-sensitive hashing (KLSH)[Kulis add two extra dimensions. and Grauman,2009]and supervised hashing with kernel- Then we can get: s(KSH)[Liu et al.,2012],we define the hash function for the kth bit of bi as follows: Px)o)=22x+e正-1 hk(xi)=sgn(Wj(xixj)+biasx), ≈2e呢-3+色-1 =1 =2e--1 where W E Rexm is the weight matrix,(xi,xj)is a kernel =5 function which is a RBF(Gaussian)function in our experi- ment,m denotes the number of kernel bases,and biask is a Here,we use an approximatione, bias value.Our goal is to learn H(x)=th(x),...,he(x)} which is shown in Figure1 when x∈【-l,,We can to map the whole training set X to a binary matrix B E find that these two functions are close to each other with {-1,+1)nxe with Bi.=b.biask is typically set to z E[-1,1].Hence,to make the approximation reasonable, -∑gei∑=lWkp(x,)which has the same effect as we assume-l≤axx≤1.It is easy to prove that that by making the training data in the kernel space zero- p=2max{lx3}是1 can make-1≤axx≤1.Ac centered.In fact,the above hash function can be rewrit- ten as h(x)=sgn(K(x)w).where w=Wi.and tually,2 maxx is not a tight bound of p.In real K(x)=[(x,x)-∑1(x,x)/n,…,(x,xm)- applications,we can also treat p as a hyper-parameter,and )/n].Then,by substituting the H()into (1). tune it with cross-validation techniques. By using this simple feature transformation,we can we can get the objective function with the parameter W to learn: derive the similarity matrix S P(X)TQ(X),where P(X)={P(x),…,P(xn)}∈Rd+2)xn and Q(X)= nlleS -sgn(K(X)WT)sgn(K(x)WT)T (2) {Q(x1),...,Q(xn)}E R(d+2)xm.Both the time and stor- age complexities are still O(n2)if we explicitly computing where K(X)E Rnxm is the kernel feature matrix for all S=P(X)TQ(X)even if the feature transformation is adopt- training points in X. ed.However,we use only P(X)and O(X)for computation,complexity are O(n 2 ) if we explicitly compute all the pairwise similarities in S, which is not acceptable in large-scale applications. Hence, as stated in the introduction, existing methods have to adopt approximation or subsampling techniques to solve it. However, they cannot achieve satisfactory performance, which motivates the work in this paper. 3 Scalable Graph Hashing with Feature Transformation In this section, we present the details of our graph hashing method SGH, including the model, learning algorithm, and complexity analysis. 3.1 Model The model of SGH contains two key components: the objective function and the feature transformation method. Objective Function The aim of SGH is to approximate the similarity matrix S by the learned hashing codes, which results in the following objective function: min {bl} n l=1 Xn i,j=1 (Seij − 1 c b T i bj ) 2 , (1) where Seij = 2Sij − 1. Note that Seij ∈ (−1, 1], and the relative distance in S is kept in Se. We use Se in the objective function to keep consistent with the range of 1 c b T i bj ∈ [−1, 1]. It is NP-hard to directly learn the binary codes {bl} in (1). As in kernelized locality-sensitive hashing (KLSH) [Kulis and Grauman, 2009] and supervised hashing with kernels (KSH) [Liu et al., 2012], we define the hash function for the kth bit of bi as follows: hk(xi) = sgn( Xm j=1 Wkjφ(xi , xj ) + biask), where W ∈ Rc×m is the weight matrix, φ(xi , xj ) is a kernel function which is a RBF (Gaussian) function in our experiment, m denotes the number of kernel bases, and biask is a bias value. Our goal is to learn H(x) = {h1(x), · · · , hc(x)} to map the whole training set X to a binary matrix B ∈ {−1, +1} n×c with Bi∗ = b T i . biask is typically set to − 1 n Pn i=1 Pm j=1 Wkjφ(xi , xj ), which has the same effect as that by making the training data in the kernel space zerocentered. In fact, the above hash function can be rewritten as hk(x) = sgn(K(x)wk), where wk = WT k∗ and K(x) = [φ(x, x1) − Pn i=1 φ(xi P , x1)/n, · · · , φ(x, xm) − n i=1 φ(xi , xm)/n]. Then, by substituting the H(x) into (1), we can get the objective function with the parameter W to learn: min W kcSe − sgn(K(X)WT )sgn(K(X)WT ) T k 2 F , (2) where K(X) ∈ Rn×m is the kernel feature matrix for all training points in X. Figure 1: Approximation in feature transformation. Feature Transformation As stated in Section 2, both time complexity and storage complexity are O(n 2 ) if we explicitly compute all the pairwise similarities in Se, which is obviously unscalable. Here, we propose a feature transformation method to use all the similarities without explicitly computing Se. We first define P(x) and Q(x) as follows: P(x) = [s 2(e 2 − 1) eρ e − kxk 2 F ρ x; r e 2 + 1 e e − kxk 2 F ρ ; 1] Q(x) = [s 2(e 2 − 1) eρ e − kxk 2 F ρ x; r e 2 + 1 e e − kxk 2 F ρ ; −1] (3) where we multiply a value q2(e 2−1) eρ e − kxk 2 F ρ to x, and then add two extra dimensions. Then we can get: P(xi) T Q(xj ) =2[ e 2 − 1 2e × 2x T i xj ρ + e 2 + 1 2e ]e − kxik 2 F +kxj k 2 F ρ − 1 ≈ 2e −kxik 2 F −kxj k 2 F +2xT i xj ρ − 1 = 2e − kxi−xj k 2 F ρ − 1 = Seij . Here, we use an approximation e 2−1 2e x + e 2+1 2e ≈ e x , which is shown in Figure 1 when x ∈ [−1, 1]. We can find that these two functions are close to each other with x ∈ [−1, 1]. Hence, to make the approximation reasonable, we assume −1 ≤ 2 ρ x T i xj ≤ 1. It is easy to prove that ρ = 2 max{kxik 2 F } n i=1 can make −1 ≤ 2 ρ x T i xj ≤ 1. Actually, 2 max{kxik 2 F } n i=1 is not a tight bound of ρ. In real applications, we can also treat ρ as a hyper-parameter, and tune it with cross-validation techniques. By using this simple feature transformation, we can derive the similarity matrix Se = P(X) T Q(X), where P(X) = {P(x1), · · · , P(xn)} ∈ R(d+2)×n and Q(X) = {Q(x1), · · · , Q(xn)} ∈ R(d+2)×n. Both the time and storage complexities are still O(n 2 ) if we explicitly computing Se = P(X) T Q(X) even if the feature transformation is adopted. However, we use only P(X) and Q(X) for computation