正在加载图片...

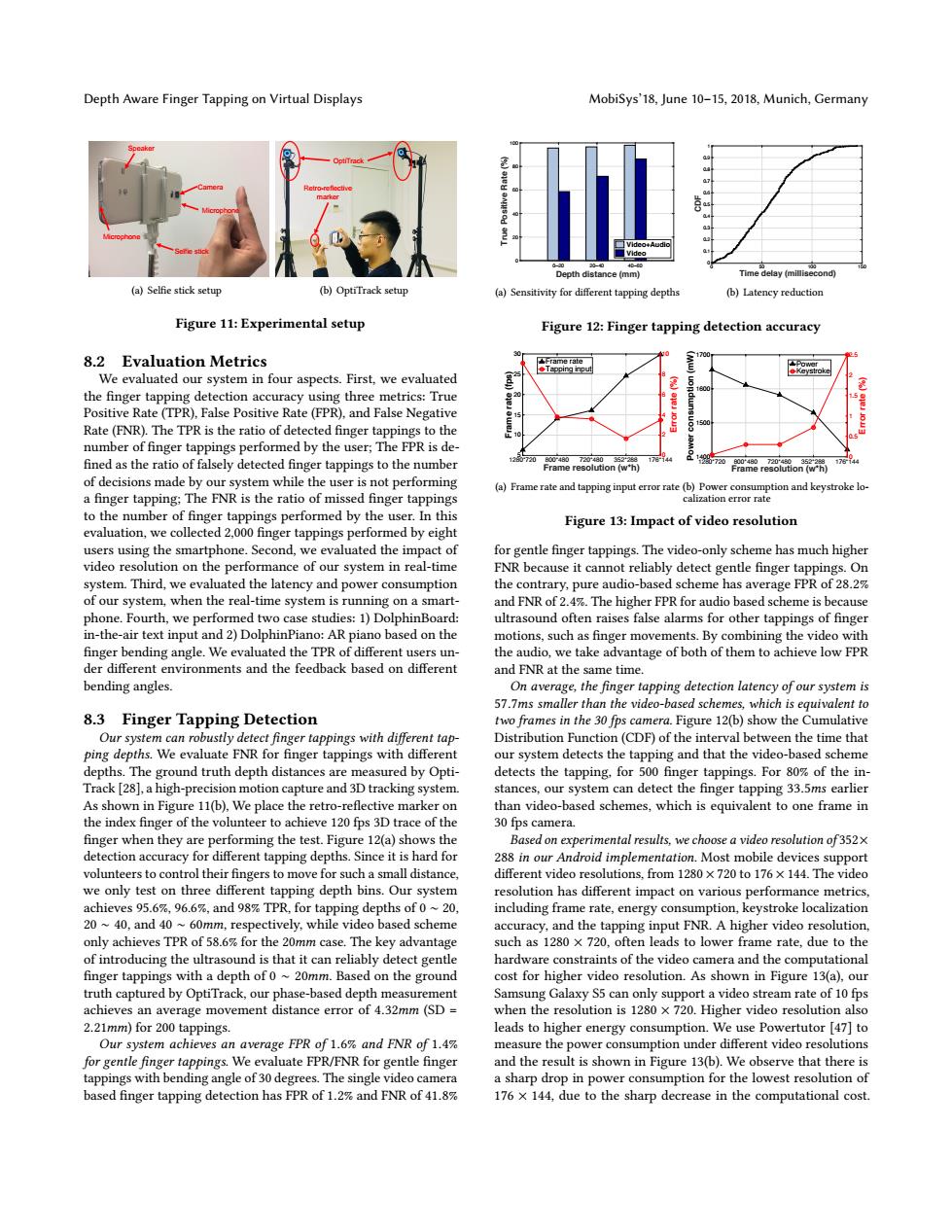

Depth Aware Finger Tapping on Virtual Displays MobiSys'18,June 10-15,2018,Munich,Germany Depth distan ce Time delay (millisecond) (a)Selfie stick setup (b)OptiTrack setup (a)Sensitivity for different tapping depths (b)Latency reduction Figure 11:Experimental setup Figure 12:Finger tapping detection accuracy 8.2 Evaluation Metrics Keystroke We evaluated our system in four aspects.First,we evaluated the finger tapping detection accuracy using three metrics:True Positive Rate(TPR).False Positive Rate(FPR),and False Negative Rate(FNR).The TPR is the ratio of detected finger tappings to the number of finger tappings performed by the user:The FPR is de- fined as the ratio of falsely detected finger tappings to the number 20 00487248035中2g 1 Frame resolution(w'h) 720 Frame resolution (wh) 178 of decisions made by our system while the user is not performing (a)Frame rate and tapping input error rate (b)Power consumption and keystroke lo- a finger tapping:The FNR is the ratio of missed finger tappings calization error rate to the number of finger tappings performed by the user.In this Figure 13:Impact of video resolution evaluation,we collected 2,000 finger tappings performed by eight users using the smartphone.Second,we evaluated the impact of for gentle finger tappings.The video-only scheme has much higher video resolution on the performance of our system in real-time FNR because it cannot reliably detect gentle finger tappings.On system.Third,we evaluated the latency and power consumption the contrary,pure audio-based scheme has average FPR of 28.2% of our system,when the real-time system is running on a smart- and FNR of 2.4%.The higher FPR for audio based scheme is because phone.Fourth,we performed two case studies:1)DolphinBoard: ultrasound often raises false alarms for other tappings of finger in-the-air text input and 2)DolphinPiano:AR piano based on the motions,such as finger movements.By combining the video with finger bending angle.We evaluated the TPR of different users un- the audio,we take advantage of both of them to achieve low FPR der different environments and the feedback based on different and FNR at the same time. bending angles. On average,the finger tapping detection latency of our system is 57.7ms smaller than the video-based schemes,which is equivalent to 8.3 Finger Tapping Detection two frames in the 30 fos camera.Figure 12(b)show the Cumulative Our system can robustly detect finger tappings with different tap- Distribution Function(CDF)of the interval between the time that ping depths.We evaluate FNR for finger tappings with different our system detects the tapping and that the video-based scheme depths.The ground truth depth distances are measured by Opti- detects the tapping,for 500 finger tappings.For 80%of the in- Track[28],a high-precision motion capture and 3D tracking system. stances,our system can detect the finger tapping 33.5ms earlier As shown in Figure 11(b).We place the retro-reflective marker on than video-based schemes,which is equivalent to one frame in the index finger of the volunteer to achieve 120 fps 3D trace of the 30 fps camera. finger when they are performing the test.Figure 12(a)shows the Based on experimental results,we choose a video resolution of352x detection accuracy for different tapping depths.Since it is hard for 288 in our Android implementation.Most mobile devices support volunteers to control their fingers to move for such a small distance different video resolutions.from 1280 x 720 to 176 x 144.The video we only test on three different tapping depth bins.Our system resolution has different impact on various performance metrics, achieves 95.6%,96.6%,and 98%TPR,for tapping depths of ~20, including frame rate,energy consumption,keystroke localization 20~40,and 40~60mm,respectively,while video based scheme accuracy,and the tapping input FNR.A higher video resolution, only achieves TPR of 58.6%for the 20mm case.The key advantage such as 1280 x 720,often leads to lower frame rate,due to the of introducing the ultrasound is that it can reliably detect gentle hardware constraints of the video camera and the computational finger tappings with a depth of 0~20mm.Based on the ground cost for higher video resolution.As shown in Figure 13(a),our truth captured by OptiTrack,our phase-based depth measurement Samsung Galaxy S5 can only support a video stream rate of 10 fps achieves an average movement distance error of 4.32mm(SD= when the resolution is 1280 x 720.Higher video resolution also 2.21mm)for 200 tappings. leads to higher energy consumption.We use Powertutor [47]to Our system achieves an average FPR of 1.6%and FNR of 1.4% measure the power consumption under different video resolutions for gentle finger tappings.We evaluate FPR/FNR for gentle finger and the result is shown in Figure 13(b).We observe that there is tappings with bending angle of 30 degrees.The single video camera a sharp drop in power consumption for the lowest resolution of based finger tapping detection has FPR of 1.2%and FNR of 41.8% 176 x 144,due to the sharp decrease in the computational cost.Depth Aware Finger Tapping on Virtual Displays MobiSys’18, June 10–15, 2018, Munich, Germany Camera Speaker Microphone Microphone Selfie stick (a) Selfie stick setup OptiTrack Retro-reflective marker (b) OptiTrack setup Figure 11: Experimental setup 8.2 Evaluation Metrics We evaluated our system in four aspects. First, we evaluated the finger tapping detection accuracy using three metrics: True Positive Rate (TPR), False Positive Rate (FPR), and False Negative Rate (FNR). The TPR is the ratio of detected finger tappings to the number of finger tappings performed by the user; The FPR is defined as the ratio of falsely detected finger tappings to the number of decisions made by our system while the user is not performing a finger tapping; The FNR is the ratio of missed finger tappings to the number of finger tappings performed by the user. In this evaluation, we collected 2,000 finger tappings performed by eight users using the smartphone. Second, we evaluated the impact of video resolution on the performance of our system in real-time system. Third, we evaluated the latency and power consumption of our system, when the real-time system is running on a smartphone. Fourth, we performed two case studies: 1) DolphinBoard: in-the-air text input and 2) DolphinPiano: AR piano based on the finger bending angle. We evaluated the TPR of different users under different environments and the feedback based on different bending angles. 8.3 Finger Tapping Detection Our system can robustly detect finger tappings with different tapping depths. We evaluate FNR for finger tappings with different depths. The ground truth depth distances are measured by OptiTrack [28], a high-precision motion capture and 3D tracking system. As shown in Figure 11(b), We place the retro-reflective marker on the index finger of the volunteer to achieve 120 fps 3D trace of the finger when they are performing the test. Figure 12(a) shows the detection accuracy for different tapping depths. Since it is hard for volunteers to control their fingers to move for such a small distance, we only test on three different tapping depth bins. Our system achieves 95.6%, 96.6%, and 98% TPR, for tapping depths of 0 ∼ 20, 20 ∼ 40, and 40 ∼ 60mm, respectively, while video based scheme only achieves TPR of 58.6% for the 20mm case. The key advantage of introducing the ultrasound is that it can reliably detect gentle finger tappings with a depth of 0 ∼ 20mm. Based on the ground truth captured by OptiTrack, our phase-based depth measurement achieves an average movement distance error of 4.32mm (SD = 2.21mm) for 200 tappings. Our system achieves an average FPR of 1.6% and FNR of 1.4% for gentle finger tappings. We evaluate FPR/FNR for gentle finger tappings with bending angle of 30 degrees. The single video camera based finger tapping detection has FPR of 1.2% and FNR of 41.8% 0~20 20~40 40~60 Depth distance (mm) 0 20 40 60 80 100 True Positive Rate (%) Video+Audio Video (a) Sensitivity for different tapping depths Time delay (millisecond) 0 50 100 150 CDF 0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 1 (b) Latency reduction Figure 12: Finger tapping detection accuracy Frame resolution (w*h) 1280*720 800*480 720*480 352*288 176*144 Frame rate (fps) 5 10 15 20 25 30 Error rate (%) 0 2 4 6 8 10 Frame rate Tapping input (a) Frame rate and tapping input error rate Frame resolution (w*h) Power consumption (mW) 1280*720 800*480 720*480 352*288 176*144 1400 1500 1600 1700 Error rate (%) 0 0.5 1 1.5 2 2.5 Power Keystroke (b) Power consumption and keystroke localization error rate Figure 13: Impact of video resolution for gentle finger tappings. The video-only scheme has much higher FNR because it cannot reliably detect gentle finger tappings. On the contrary, pure audio-based scheme has average FPR of 28.2% and FNR of 2.4%. The higher FPR for audio based scheme is because ultrasound often raises false alarms for other tappings of finger motions, such as finger movements. By combining the video with the audio, we take advantage of both of them to achieve low FPR and FNR at the same time. On average, the finger tapping detection latency of our system is 57.7ms smaller than the video-based schemes, which is equivalent to two frames in the 30 fps camera. Figure 12(b) show the Cumulative Distribution Function (CDF) of the interval between the time that our system detects the tapping and that the video-based scheme detects the tapping, for 500 finger tappings. For 80% of the instances, our system can detect the finger tapping 33.5ms earlier than video-based schemes, which is equivalent to one frame in 30 fps camera. Based on experimental results, we choose a video resolution of 352× 288 in our Android implementation. Most mobile devices support different video resolutions, from 1280 × 720 to 176 × 144. The video resolution has different impact on various performance metrics, including frame rate, energy consumption, keystroke localization accuracy, and the tapping input FNR. A higher video resolution, such as 1280 × 720, often leads to lower frame rate, due to the hardware constraints of the video camera and the computational cost for higher video resolution. As shown in Figure 13(a), our Samsung Galaxy S5 can only support a video stream rate of 10 fps when the resolution is 1280 × 720. Higher video resolution also leads to higher energy consumption. We use Powertutor [47] to measure the power consumption under different video resolutions and the result is shown in Figure 13(b). We observe that there is a sharp drop in power consumption for the lowest resolution of 176 × 144, due to the sharp decrease in the computational cost