正在加载图片...

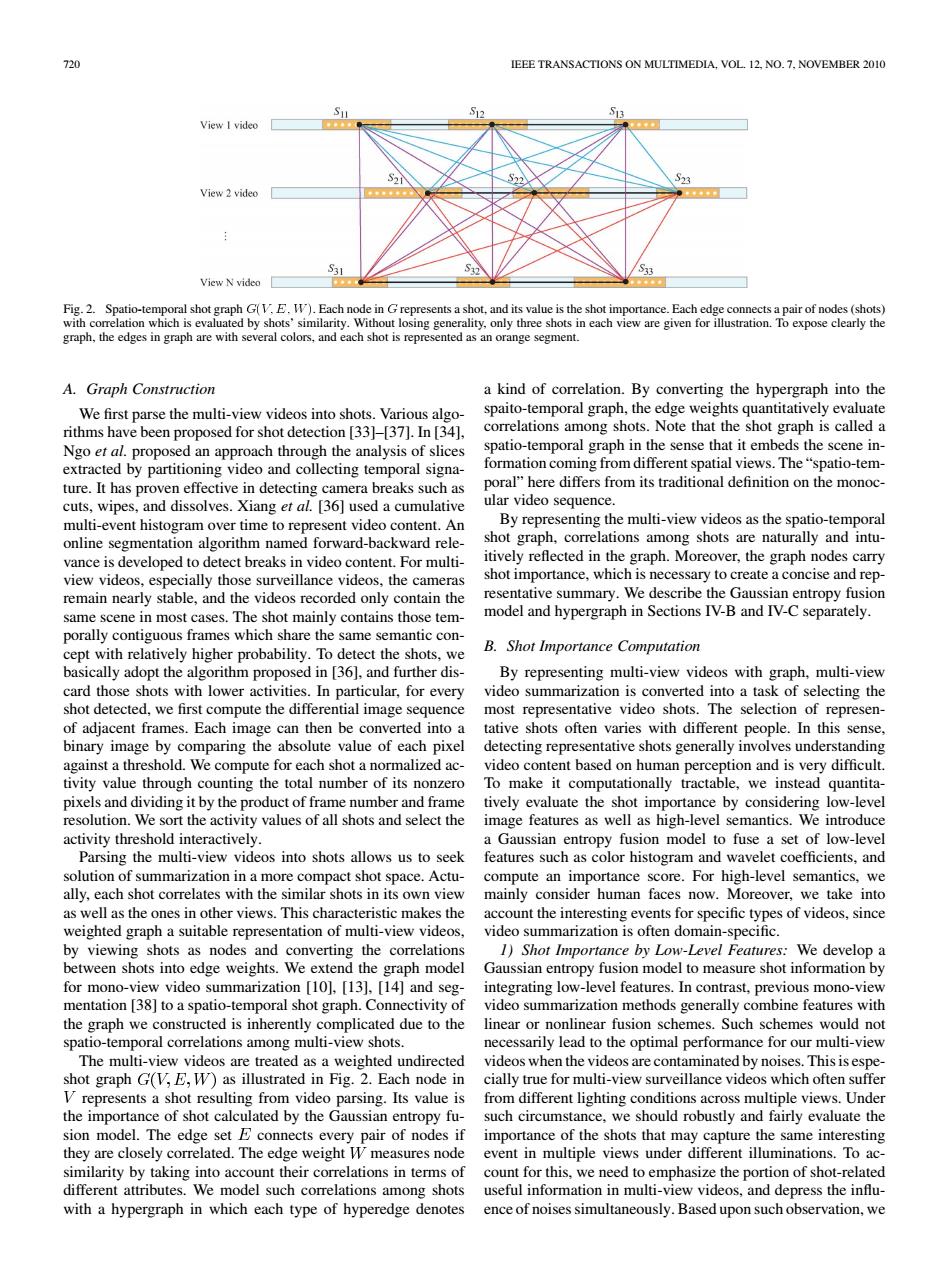

720 IEEE TRANSACTIONS ON MULTIMEDIA.VOL.12.NO.7.NOVEMBER 2010 1 View I video S22 S23 View 2 video S31 View N video■ Fig.2.Spatio-temporal shot graph G(V.E.W).Each node in G represents a shot,and its value is the shot importance.Each edge connects a pair of nodes (shots) with correlation which is evaluated by shots'similarity.Without losing generality,only three shots in each view are given for illustration.To expose clearly the graph,the edges in graph are with several colors,and each shot is represented as an orange segment. A.Graph Construction a kind of correlation.By converting the hypergraph into the We first parse the multi-view videos into shots.Various algo- spaito-temporal graph,the edge weights quantitatively evaluate rithms have been proposed for shot detection [33]-[37].In [34], correlations among shots.Note that the shot graph is called a Ngo et al.proposed an approach through the analysis of slices spatio-temporal graph in the sense that it embeds the scene in- extracted by partitioning video and collecting temporal signa- formation coming from different spatial views.The"spatio-tem- ture.It has proven effective in detecting camera breaks such as poral"here differs from its traditional definition on the monoc- cuts,wipes,and dissolves.Xiang et al.[36]used a cumulative ular video sequence. multi-event histogram over time to represent video content.An By representing the multi-view videos as the spatio-temporal online segmentation algorithm named forward-backward rele- shot graph,correlations among shots are naturally and intu- vance is developed to detect breaks in video content.For multi- itively reflected in the graph.Moreover,the graph nodes carry view videos,especially those surveillance videos,the cameras shot importance,which is necessary to create a concise and rep- remain nearly stable,and the videos recorded only contain the resentative summary.We describe the Gaussian entropy fusion same scene in most cases.The shot mainly contains those tem- model and hypergraph in Sections IV-B and IV-C separately. porally contiguous frames which share the same semantic con- cept with relatively higher probability.To detect the shots,we B.Shot Importance Computation basically adopt the algorithm proposed in [36],and further dis- By representing multi-view videos with graph,multi-view card those shots with lower activities.In particular,for every video summarization is converted into a task of selecting the shot detected,we first compute the differential image sequence most representative video shots.The selection of represen- of adjacent frames.Each image can then be converted into a tative shots often varies with different people.In this sense, binary image by comparing the absolute value of each pixel detecting representative shots generally involves understanding against a threshold.We compute for each shot a normalized ac- video content based on human perception and is very difficult. tivity value through counting the total number of its nonzero To make it computationally tractable,we instead quantita- pixels and dividing it by the product of frame number and frame tively evaluate the shot importance by considering low-level resolution.We sort the activity values of all shots and select the image features as well as high-level semantics.We introduce activity threshold interactively. a Gaussian entropy fusion model to fuse a set of low-level Parsing the multi-view videos into shots allows us to seek features such as color histogram and wavelet coefficients,and solution of summarization in a more compact shot space.Actu-compute an importance score.For high-level semantics,we ally,each shot correlates with the similar shots in its own view mainly consider human faces now.Moreover,we take into as well as the ones in other views.This characteristic makes the account the interesting events for specific types of videos,since weighted graph a suitable representation of multi-view videos, video summarization is often domain-specific. by viewing shots as nodes and converting the correlations 1)Shot Importance by Low-Level Features:We develop a between shots into edge weights.We extend the graph model Gaussian entropy fusion model to measure shot information by for mono-view video summarization [10,[13,[14]and seg- integrating low-level features.In contrast,previous mono-view mentation38]to a spatio-temporal shot graph.Connectivity of video summarization methods generally combine features with the graph we constructed is inherently complicated due to the linear or nonlinear fusion schemes.Such schemes would not spatio-temporal correlations among multi-view shots necessarily lead to the optimal performance for our multi-view The multi-view videos are treated as a weighted undirected videos when the videos are contaminated by noises.This is espe- shot graph G(V,E,W)as illustrated in Fig.2.Each node in cially true for multi-view surveillance videos which often suffer V represents a shot resulting from video parsing.Its value is from different lighting conditions across multiple views.Under the importance of shot calculated by the Gaussian entropy fu- such circumstance,we should robustly and fairly evaluate the sion model.The edge set E connects every pair of nodes if importance of the shots that may capture the same interesting they are closely correlated.The edge weight W measures node event in multiple views under different illuminations.To ac- similarity by taking into account their correlations in terms of count for this,we need to emphasize the portion of shot-related different attributes.We model such correlations among shots useful information in multi-view videos,and depress the influ- with a hypergraph in which each type of hyperedge denotes ence of noises simultaneously.Based upon such observation,we720 IEEE TRANSACTIONS ON MULTIMEDIA, VOL. 12, NO. 7, NOVEMBER 2010 Fig. 2. Spatio-temporal shot graph . Each node in represents a shot, and its value is the shot importance. Each edge connects a pair of nodes (shots) with correlation which is evaluated by shots’ similarity. Without losing generality, only three shots in each view are given for illustration. To expose clearly the graph, the edges in graph are with several colors, and each shot is represented as an orange segment. A. Graph Construction We first parse the multi-view videos into shots. Various algorithms have been proposed for shot detection [33]–[37]. In [34], Ngo et al. proposed an approach through the analysis of slices extracted by partitioning video and collecting temporal signature. It has proven effective in detecting camera breaks such as cuts, wipes, and dissolves. Xiang et al. [36] used a cumulative multi-event histogram over time to represent video content. An online segmentation algorithm named forward-backward relevance is developed to detect breaks in video content. For multiview videos, especially those surveillance videos, the cameras remain nearly stable, and the videos recorded only contain the same scene in most cases. The shot mainly contains those temporally contiguous frames which share the same semantic concept with relatively higher probability. To detect the shots, we basically adopt the algorithm proposed in [36], and further discard those shots with lower activities. In particular, for every shot detected, we first compute the differential image sequence of adjacent frames. Each image can then be converted into a binary image by comparing the absolute value of each pixel against a threshold. We compute for each shot a normalized activity value through counting the total number of its nonzero pixels and dividing it by the product of frame number and frame resolution. We sort the activity values of all shots and select the activity threshold interactively. Parsing the multi-view videos into shots allows us to seek solution of summarization in a more compact shot space. Actually, each shot correlates with the similar shots in its own view as well as the ones in other views. This characteristic makes the weighted graph a suitable representation of multi-view videos, by viewing shots as nodes and converting the correlations between shots into edge weights. We extend the graph model for mono-view video summarization [10], [13], [14] and segmentation [38] to a spatio-temporal shot graph. Connectivity of the graph we constructed is inherently complicated due to the spatio-temporal correlations among multi-view shots. The multi-view videos are treated as a weighted undirected shot graph as illustrated in Fig. 2. Each node in represents a shot resulting from video parsing. Its value is the importance of shot calculated by the Gaussian entropy fusion model. The edge set connects every pair of nodes if they are closely correlated. The edge weight measures node similarity by taking into account their correlations in terms of different attributes. We model such correlations among shots with a hypergraph in which each type of hyperedge denotes a kind of correlation. By converting the hypergraph into the spaito-temporal graph, the edge weights quantitatively evaluate correlations among shots. Note that the shot graph is called a spatio-temporal graph in the sense that it embeds the scene information coming from different spatial views. The “spatio-temporal” here differs from its traditional definition on the monocular video sequence. By representing the multi-view videos as the spatio-temporal shot graph, correlations among shots are naturally and intuitively reflected in the graph. Moreover, the graph nodes carry shot importance, which is necessary to create a concise and representative summary. We describe the Gaussian entropy fusion model and hypergraph in Sections IV-B and IV-C separately. B. Shot Importance Computation By representing multi-view videos with graph, multi-view video summarization is converted into a task of selecting the most representative video shots. The selection of representative shots often varies with different people. In this sense, detecting representative shots generally involves understanding video content based on human perception and is very difficult. To make it computationally tractable, we instead quantitatively evaluate the shot importance by considering low-level image features as well as high-level semantics. We introduce a Gaussian entropy fusion model to fuse a set of low-level features such as color histogram and wavelet coefficients, and compute an importance score. For high-level semantics, we mainly consider human faces now. Moreover, we take into account the interesting events for specific types of videos, since video summarization is often domain-specific. 1) Shot Importance by Low-Level Features: We develop a Gaussian entropy fusion model to measure shot information by integrating low-level features. In contrast, previous mono-view video summarization methods generally combine features with linear or nonlinear fusion schemes. Such schemes would not necessarily lead to the optimal performance for our multi-view videos when the videos are contaminated by noises. This is especially true for multi-view surveillance videos which often suffer from different lighting conditions across multiple views. Under such circumstance, we should robustly and fairly evaluate the importance of the shots that may capture the same interesting event in multiple views under different illuminations. To account for this, we need to emphasize the portion of shot-related useful information in multi-view videos, and depress the influence of noises simultaneously. Based upon such observation, we����