正在加载图片...

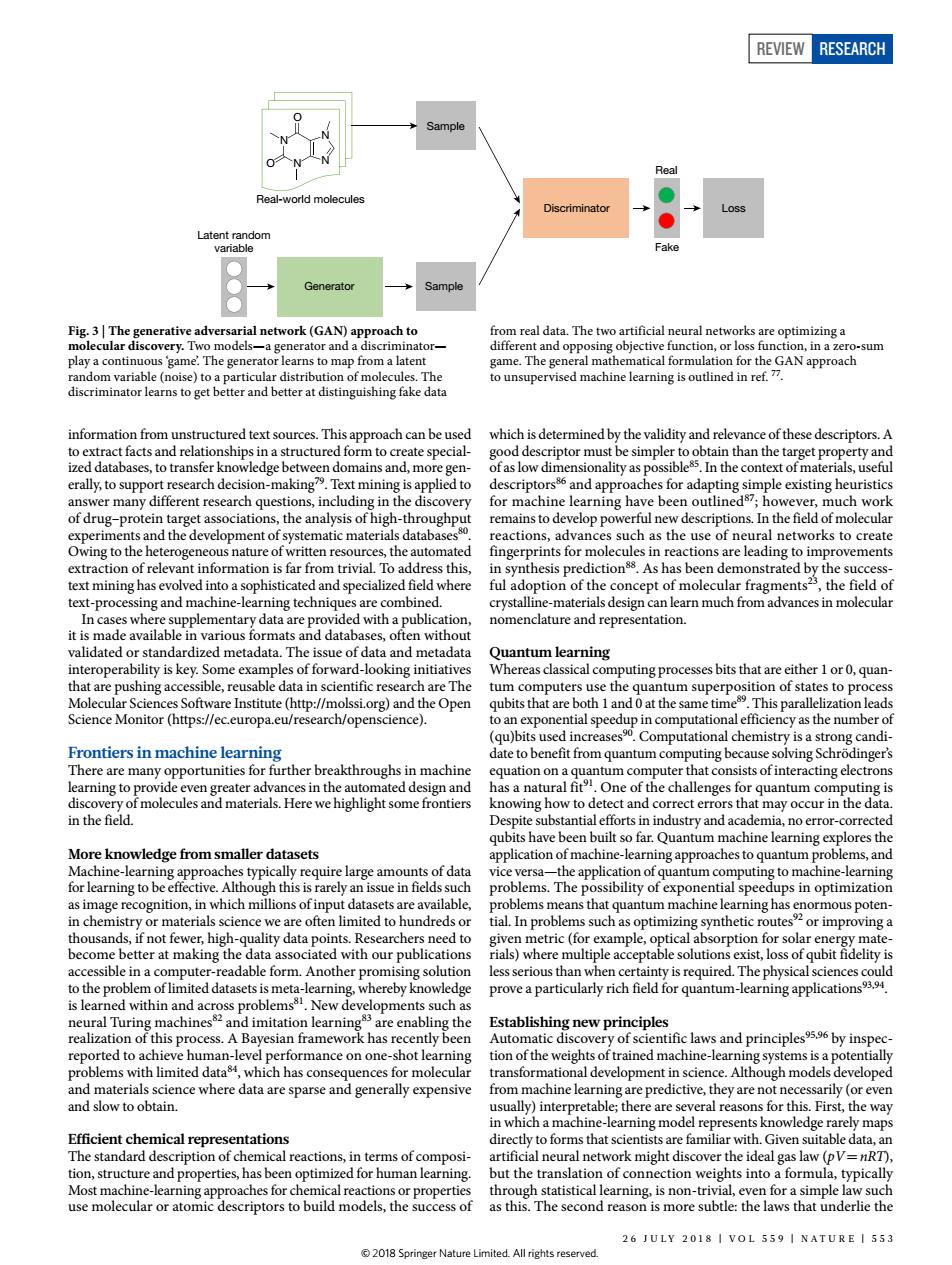

REVIEW RESEARCH Sampl generative(GAN)approach to ioedldaTetoatinctneralnetwotkeopmtng e the general mathe n for the GANap to unsuper ised machine learning is outlined in ref.7 hcanbe which is determined by the yalidity and rele ace of these deserinto tract fac erally,to supp arch decision-making ext mining is pplied to and appro mpleexisting heuristic of dru otein tithe of high-throug ins to dey wing to the hete s the tomated is far from trivia To a ction.As has been den ts?the field o ext-proces nate uting processes bits that are either l or o auan are pushing acc ble y as the number o Frontiers in machine learning date to be Gt from qu mputing becau ng S int ntum als.He ers o nowledge f in to he app of qu rr blems m ntum machine lea arning has orm ous poten a che eD r solar at mak ing th ed wil n our publi )where ible in ed.The Prove Particularly rich field for guantum-eanpication achinesand imitation learning are enabl the Establishing new principles 19506 orted to cheve human-eve ance on one-shot learn yial ems with limited da molec and slow to obtain. are cral reaso for this.First. the wa directly to forms that scie iver but the translat 26 JULY 2018 I VOL 559 I NATURE I 553 All righ Review RESEARCH information from unstructured text sources. This approach can be used to extract facts and relationships in a structured form to create specialized databases, to transfer knowledge between domains and, more generally, to support research decision-making79. Text mining is applied to answer many different research questions, including in the discovery of drug–protein target associations, the analysis of high-throughput experiments and the development of systematic materials databases80. Owing to the heterogeneous nature of written resources, the automated extraction of relevant information is far from trivial. To address this, text mining has evolved into a sophisticated and specialized field where text-processing and machine-learning techniques are combined. In cases where supplementary data are provided with a publication, it is made available in various formats and databases, often without validated or standardized metadata. The issue of data and metadata interoperability is key. Some examples of forward-looking initiatives that are pushing accessible, reusable data in scientific research are The Molecular Sciences Software Institute (http://molssi.org) and the Open Science Monitor (https://ec.europa.eu/research/openscience). Frontiers in machine learning There are many opportunities for further breakthroughs in machine learning to provide even greater advances in the automated design and discovery of molecules and materials. Here we highlight some frontiers in the field. More knowledge from smaller datasets Machine-learning approaches typically require large amounts of data for learning to be effective. Although this is rarely an issue in fields such as image recognition, in which millions of input datasets are available, in chemistry or materials science we are often limited to hundreds or thousands, if not fewer, high-quality data points. Researchers need to become better at making the data associated with our publications accessible in a computer-readable form. Another promising solution to the problem of limited datasets is meta-learning, whereby knowledge is learned within and across problems81. New developments such as neural Turing machines82 and imitation learning83 are enabling the realization of this process. A Bayesian framework has recently been reported to achieve human-level performance on one-shot learning problems with limited data84, which has consequences for molecular and materials science where data are sparse and generally expensive and slow to obtain. Efficient chemical representations The standard description of chemical reactions, in terms of composition, structure and properties, has been optimized for human learning. Most machine-learning approaches for chemical reactions or properties use molecular or atomic descriptors to build models, the success of which is determined by the validity and relevance of these descriptors. A good descriptor must be simpler to obtain than the target property and of as low dimensionality as possible85. In the context of materials, useful descriptors86 and approaches for adapting simple existing heuristics for machine learning have been outlined87; however, much work remains to develop powerful new descriptions. In the field of molecular reactions, advances such as the use of neural networks to create fingerprints for molecules in reactions are leading to improvements in synthesis prediction88. As has been demonstrated by the successful adoption of the concept of molecular fragments23, the field of crystalline-materials design can learn much from advances in molecular nomenclature and representation. Quantum learning Whereas classical computing processes bits that are either 1 or 0, quantum computers use the quantum superposition of states to process qubits that are both 1 and 0 at the same time89. This parallelization leads to an exponential speedup in computational efficiency as the number of (qu)bits used increases90. Computational chemistry is a strong candidate to benefit from quantum computing because solving Schrödinger’s equation on a quantum computer that consists of interacting electrons has a natural fit91. One of the challenges for quantum computing is knowing how to detect and correct errors that may occur in the data. Despite substantial efforts in industry and academia, no error-corrected qubits have been built so far. Quantum machine learning explores the application of machine-learning approaches to quantum problems, and vice versa—the application of quantum computing to machine-learning problems. The possibility of exponential speedups in optimization problems means that quantum machine learning has enormous potential. In problems such as optimizing synthetic routes92 or improving a given metric (for example, optical absorption for solar energy materials) where multiple acceptable solutions exist, loss of qubit fidelity is less serious than when certainty is required. The physical sciences could prove a particularly rich field for quantum-learning applications93,94. Establishing new principles Automatic discovery of scientific laws and principles95,96 by inspection of the weights of trained machine-learning systems is a potentially transformational development in science. Although models developed from machine learning are predictive, they are not necessarily (or even usually) interpretable; there are several reasons for this. First, the way in which a machine-learning model represents knowledge rarely maps directly to forms that scientists are familiar with. Given suitable data, an artificial neural network might discover the ideal gas law (pV=nRT), but the translation of connection weights into a formula, typically through statistical learning, is non-trivial, even for a simple law such as this. The second reason is more subtle: the laws that underlie the Real-world molecules Sample Generator Discriminator Loss Sample Latent random variable Real Fake N N N N O O Fig. 3 | The generative adversarial network (GAN) approach to molecular discovery. Two models—a generator and a discriminator— play a continuous ‘game’. The generator learns to map from a latent random variable (noise) to a particular distribution of molecules. The discriminator learns to get better and better at distinguishing fake data from real data. The two artificial neural networks are optimizing a different and opposing objective function, or loss function, in a zero-sum game. The general mathematical formulation for the GAN approach to unsupervised machine learning is outlined in ref. 77. 26 J U LY 2018 | V OL 559 | NATUR E | 553 © 2018 Springer Nature Limited. All rights reserved