正在加载图片...

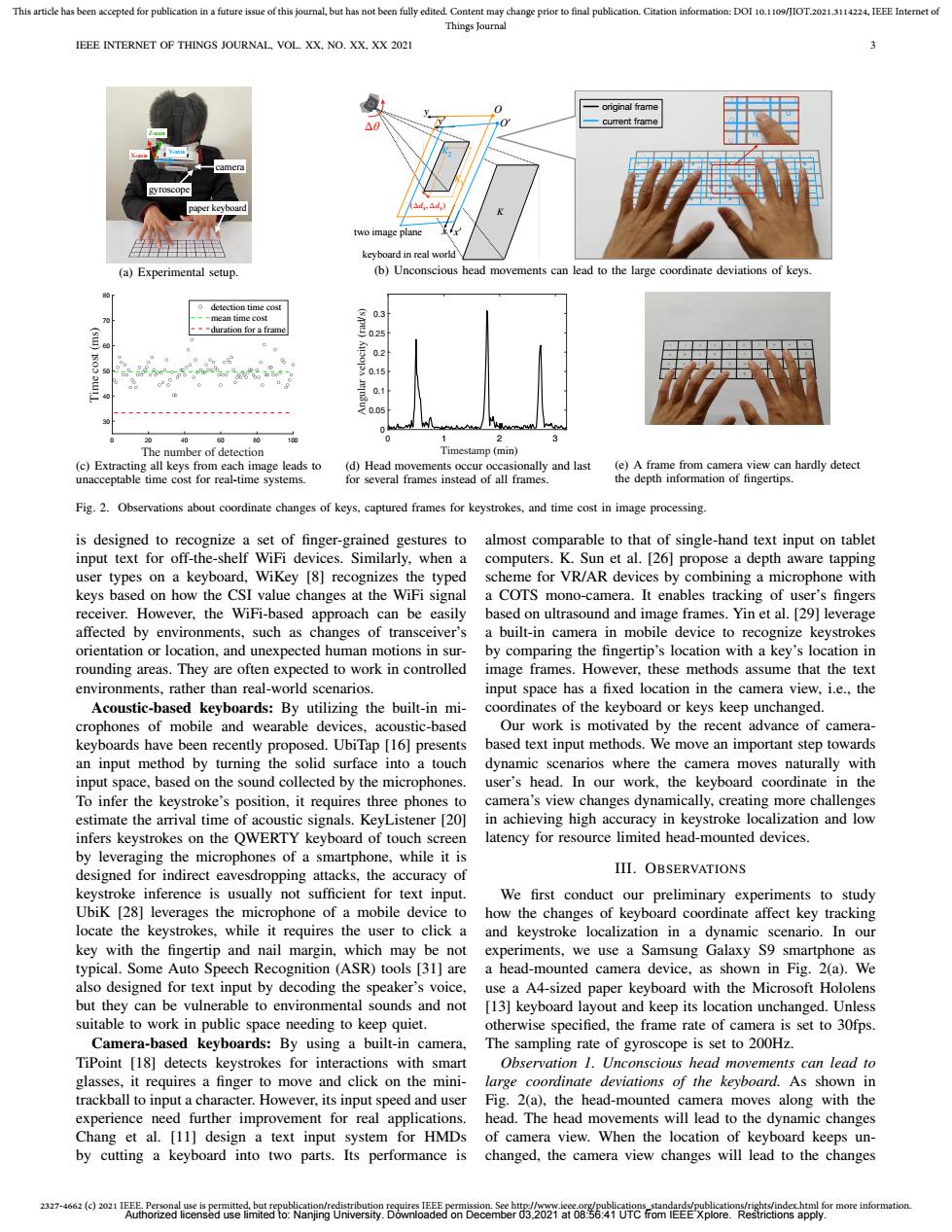

This article has been accepted for publication in a future issue of this journal,but has not been fully edited.Content may change prior to final publication.Citation information:DOI 10.1109/JIOT.2021.3114224.IEEE Internet of Things Journal IEEE INTERNET OF THINGS JOURNAL,VOL.XX,NO.XX,XX 2021 original frame current frame paper keyboard two image plane keyboard in real world (a)Experimental setup. (b)Unconscious head movements can lead to the large coordinate deviations of keys. 0 detec0n e cost -mean time cost 03 --"duration for a frame 2 0) g° 0.2 90.15 0 0 30 10 The number of detection Timestamp(min) (c)Extracting all keys from each image leads to (d)Head movements occur occasionally and last (e)A frame from camera view can hardly detect unacceptable time cost for real-time systems. for several frames instead of all frames the depth information of fingertips. Fig.2.Observations about coordinate changes of keys,captured frames for keystrokes,and time cost in image processing. is designed to recognize a set of finger-grained gestures to almost comparable to that of single-hand text input on tablet input text for off-the-shelf WiFi devices.Similarly,when a computers.K.Sun et al.[26]propose a depth aware tapping user types on a keyboard,WiKey [8]recognizes the typed scheme for VR/AR devices by combining a microphone with keys based on how the CSI value changes at the WiFi signal a COTS mono-camera.It enables tracking of user's fingers receiver.However,the WiFi-based approach can be easily based on ultrasound and image frames.Yin et al.[29]leverage affected by environments,such as changes of transceiver's a built-in camera in mobile device to recognize keystrokes orientation or location,and unexpected human motions in sur- by comparing the fingertip's location with a key's location in rounding areas.They are often expected to work in controlled image frames.However,these methods assume that the text environments,rather than real-world scenarios. input space has a fixed location in the camera view,i.e.,the Acoustic-based keyboards:By utilizing the built-in mi- coordinates of the keyboard or keys keep unchanged. crophones of mobile and wearable devices,acoustic-based Our work is motivated by the recent advance of camera- keyboards have been recently proposed.UbiTap [16]presents based text input methods.We move an important step towards an input method by turning the solid surface into a touch dynamic scenarios where the camera moves naturally with input space,based on the sound collected by the microphones. user's head.In our work,the keyboard coordinate in the To infer the keystroke's position,it requires three phones to camera's view changes dynamically,creating more challenges estimate the arrival time of acoustic signals.KeyListener [20] in achieving high accuracy in keystroke localization and low infers keystrokes on the QWERTY keyboard of touch screen latency for resource limited head-mounted devices. by leveraging the microphones of a smartphone,while it is designed for indirect eavesdropping attacks,the accuracy of III.OBSERVATIONS keystroke inference is usually not sufficient for text input. We first conduct our preliminary experiments to study UbiK [28]leverages the microphone of a mobile device to how the changes of keyboard coordinate affect key tracking locate the keystrokes,while it requires the user to click a and keystroke localization in a dynamic scenario.In our key with the fingertip and nail margin,which may be not experiments,we use a Samsung Galaxy S9 smartphone as typical.Some Auto Speech Recognition (ASR)tools [31]are a head-mounted camera device,as shown in Fig.2(a).We also designed for text input by decoding the speaker's voice, use a A4-sized paper keyboard with the Microsoft Hololens but they can be vulnerable to environmental sounds and not [13]keyboard layout and keep its location unchanged.Unless suitable to work in public space needing to keep quiet. otherwise specified,the frame rate of camera is set to 30fps. Camera-based keyboards:By using a built-in camera, The sampling rate of gyroscope is set to 200Hz. TiPoint [18]detects keystrokes for interactions with smart Observation 1.Unconscious head movements can lead to glasses,it requires a finger to move and click on the mini- large coordinate deviations of the keyboard.As shown in trackball to input a character.However,its input speed and user Fig.2(a),the head-mounted camera moves along with the experience need further improvement for real applications. head.The head movements will lead to the dynamic changes Chang et al.[11]design a text input system for HMDs of camera view.When the location of keyboard keeps un- by cutting a keyboard into two parts.Its performance is changed,the camera view changes will lead to the changes2327-4662 (c) 2021 IEEE. Personal use is permitted, but republication/redistribution requires IEEE permission. See http://www.ieee.org/publications_standards/publications/rights/index.html for more information. This article has been accepted for publication in a future issue of this journal, but has not been fully edited. Content may change prior to final publication. Citation information: DOI 10.1109/JIOT.2021.3114224, IEEE Internet of Things Journal IEEE INTERNET OF THINGS JOURNAL, VOL. XX, NO. XX, XX 2021 3 camera Y-axis X-axis Z-axis paper keyboard gyroscope (a) Experimental setup. (Δdx, Δdy) two image plane keyboard in real world Δθ x x′ y O O′ K K1 K2 (b) The unconscious head movements can lead to the large coordinate deviations of keys. y′ 1 2 3 4 5 6 7 8 9 0 Q W E R T Y U I O P A S D F G H J K L Z X C V B N M , . current frame original frame (b) Unconscious head movements can lead to the large coordinate deviations of keys. 0 20 40 60 80 100 The number of detection 30 40 50 60 70 80 Time cost (ms) detection time cost mean time cost duration for a frame (c) Extracting all keys from each image leads to unacceptable time cost for real-time systems. current frame original frame 0 5000 10000 15000 Timestamp (ms) 0 0.05 0.1 0.15 0.2 0.25 0.3 Angular velocity (rad/s) (min) 1 2 3 (d) Head movements occur occasionally and last for several frames instead of all frames. (e) A frame from camera view can hardly detect the depth information of fingertips. Fig. 2. Observations about coordinate changes of keys, captured frames for keystrokes, and time cost in image processing. is designed to recognize a set of finger-grained gestures to input text for off-the-shelf WiFi devices. Similarly, when a user types on a keyboard, WiKey [8] recognizes the typed keys based on how the CSI value changes at the WiFi signal receiver. However, the WiFi-based approach can be easily affected by environments, such as changes of transceiver’s orientation or location, and unexpected human motions in surrounding areas. They are often expected to work in controlled environments, rather than real-world scenarios. Acoustic-based keyboards: By utilizing the built-in microphones of mobile and wearable devices, acoustic-based keyboards have been recently proposed. UbiTap [16] presents an input method by turning the solid surface into a touch input space, based on the sound collected by the microphones. To infer the keystroke’s position, it requires three phones to estimate the arrival time of acoustic signals. KeyListener [20] infers keystrokes on the QWERTY keyboard of touch screen by leveraging the microphones of a smartphone, while it is designed for indirect eavesdropping attacks, the accuracy of keystroke inference is usually not sufficient for text input. UbiK [28] leverages the microphone of a mobile device to locate the keystrokes, while it requires the user to click a key with the fingertip and nail margin, which may be not typical. Some Auto Speech Recognition (ASR) tools [31] are also designed for text input by decoding the speaker’s voice, but they can be vulnerable to environmental sounds and not suitable to work in public space needing to keep quiet. Camera-based keyboards: By using a built-in camera, TiPoint [18] detects keystrokes for interactions with smart glasses, it requires a finger to move and click on the minitrackball to input a character. However, its input speed and user experience need further improvement for real applications. Chang et al. [11] design a text input system for HMDs by cutting a keyboard into two parts. Its performance is almost comparable to that of single-hand text input on tablet computers. K. Sun et al. [26] propose a depth aware tapping scheme for VR/AR devices by combining a microphone with a COTS mono-camera. It enables tracking of user’s fingers based on ultrasound and image frames. Yin et al. [29] leverage a built-in camera in mobile device to recognize keystrokes by comparing the fingertip’s location with a key’s location in image frames. However, these methods assume that the text input space has a fixed location in the camera view, i.e., the coordinates of the keyboard or keys keep unchanged. Our work is motivated by the recent advance of camerabased text input methods. We move an important step towards dynamic scenarios where the camera moves naturally with user’s head. In our work, the keyboard coordinate in the camera’s view changes dynamically, creating more challenges in achieving high accuracy in keystroke localization and low latency for resource limited head-mounted devices. III. OBSERVATIONS We first conduct our preliminary experiments to study how the changes of keyboard coordinate affect key tracking and keystroke localization in a dynamic scenario. In our experiments, we use a Samsung Galaxy S9 smartphone as a head-mounted camera device, as shown in Fig. 2(a). We use a A4-sized paper keyboard with the Microsoft Hololens [13] keyboard layout and keep its location unchanged. Unless otherwise specified, the frame rate of camera is set to 30fps. The sampling rate of gyroscope is set to 200Hz. Observation 1. Unconscious head movements can lead to large coordinate deviations of the keyboard. As shown in Fig. 2(a), the head-mounted camera moves along with the head. The head movements will lead to the dynamic changes of camera view. When the location of keyboard keeps unchanged, the camera view changes will lead to the changes Authorized licensed use limited to: Nanjing University. Downloaded on December 03,2021 at 08:56:41 UTC from IEEE Xplore. Restrictions apply