正在加载图片...

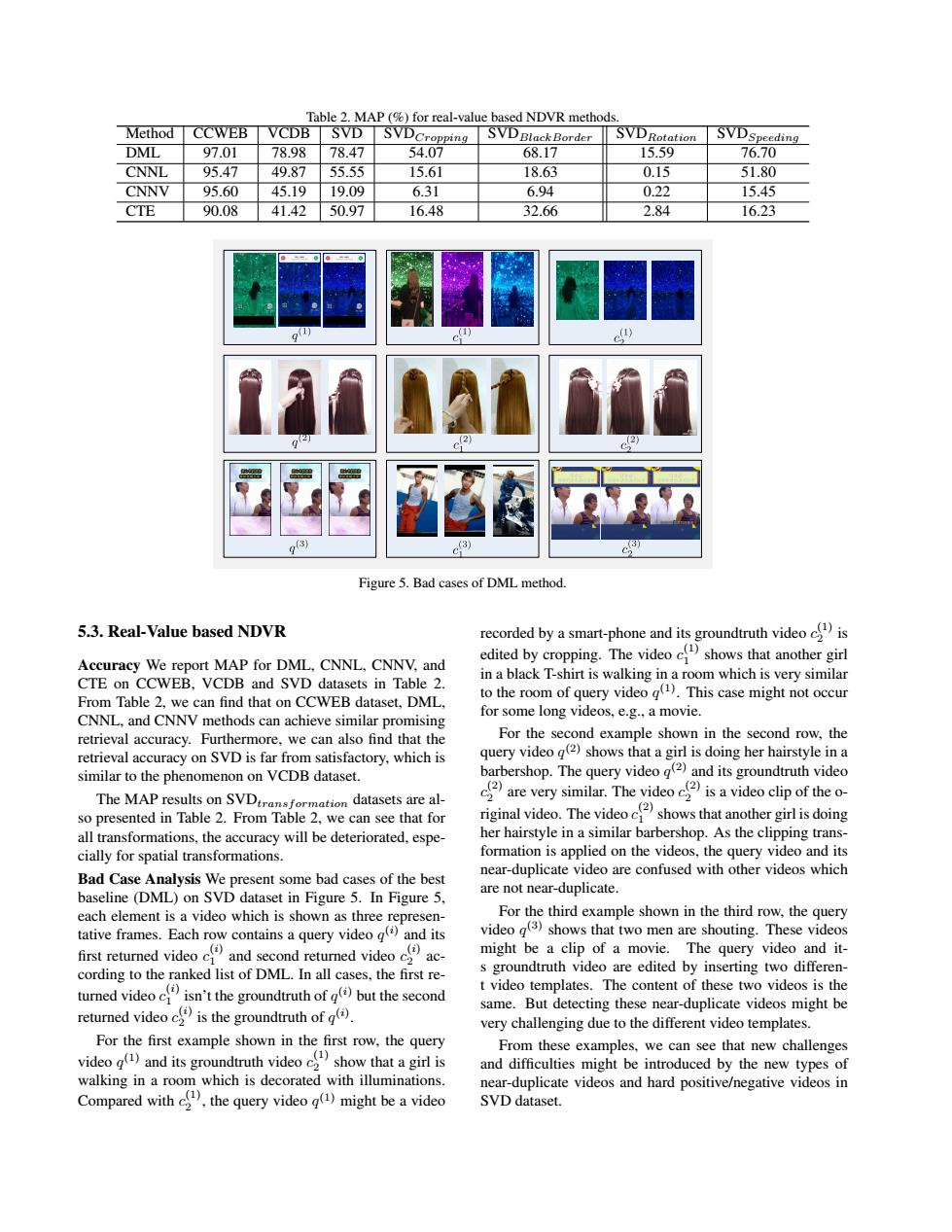

Table 2.MAP(%)for real-value based NDVR methods. Method CCWEB VCDB SVD SVDCropping SVDBlack Border SVDRotation SVDSpeeding DML 97.01 78.98 78.47 54.07 68.17 15.59 76.70 CNNL 95.47 49.87 55.55 15.61 18.63 0.15 51.80 CNNV 95.60 45.19 19.09 6.31 6.94 0.22 15.45 CTE 90.08 41.4250.97 16.48 32.66 2.84 16.23 C Figure 5.Bad cases of DML method. 5.3.Real-Value based NDVR recorded by a smart-phone and its groundtruth videois Accuracy We report MAP for DML,CNNL,CNNV,and edited by cropping.The video shows that another girl in a black T-shirt is walking in a room which is very similar CTE on CCWEB.VCDB and SVD datasets in Table 2. to the room of query video (1).This case might not occur From Table 2,we can find that on CCWEB dataset,DML, CNNL,and CNNV methods can achieve similar promising for some long videos,e.g.,a movie. retrieval accuracy.Furthermore,we can also find that the For the second example shown in the second row,the retrieval accuracy on SVD is far from satisfactory,which is query video g(2)shows that a girl is doing her hairstyle in a similar to the phenomenon on VCDB dataset. barbershop.The query video (2)and its groundtruth video The MAP results on SVDrmtio datasets are al- are very similar.The video isa video clip of theo- so presented in Table 2.From Table 2,we can see that for riginal video.The videoshows that another girlis doing all transformations,the accuracy will be deteriorated,espe- her hairstyle in a similar barbershop.As the clipping trans- cially for spatial transformations. formation is applied on the videos,the query video and its near-duplicate video are confused with other videos which Bad Case Analysis We present some bad cases of the best baseline (DML)on SVD dataset in Figure 5.In Figure 5, are not near-duplicate. each element is a video which is shown as three represen- For the third example shown in the third row,the query tative frames.Each row contains a query video g()and its video g(3)shows that two men are shouting.These videos first returned videoand second returned videoac- might be a clip of a movie.The query video and it- cording to the ranked list of DML.In all cases,the first re- s groundtruth video are edited by inserting two differen- tured videoisn't the groundtruth ofbut the second t video templates.The content of these two videos is the retumed video is the groundtruth of) same.But detecting these near-duplicate videos might be very challenging due to the different video templates. For the first example shown in the first row,the query From these examples,we can see that new challenges videoand its groundtruth videoshow that a girl is and difficulties might be introduced by the new types of walking in a room which is decorated with illuminations. near-duplicate videos and hard positive/negative videos in Compared withthe query video)might be a video SVD dataset.Table 2. MAP (%) for real-value based NDVR methods. Method CCWEB VCDB SVD SVDCropping SVDBlackBorder SVDRotation SVDSpeeding DML 97.01 78.98 78.47 54.07 68.17 15.59 76.70 CNNL 95.47 49.87 55.55 15.61 18.63 0.15 51.80 CNNV 95.60 45.19 19.09 6.31 6.94 0.22 15.45 CTE 90.08 41.42 50.97 16.48 32.66 2.84 16.23 Figure 5. Bad cases of DML method. 5.3. Real-Value based NDVR Accuracy We report MAP for DML, CNNL, CNNV, and CTE on CCWEB, VCDB and SVD datasets in Table 2. From Table 2, we can find that on CCWEB dataset, DML, CNNL, and CNNV methods can achieve similar promising retrieval accuracy. Furthermore, we can also find that the retrieval accuracy on SVD is far from satisfactory, which is similar to the phenomenon on VCDB dataset. The MAP results on SVDtransformation datasets are also presented in Table 2. From Table 2, we can see that for all transformations, the accuracy will be deteriorated, especially for spatial transformations. Bad Case Analysis We present some bad cases of the best baseline (DML) on SVD dataset in Figure 5. In Figure 5, each element is a video which is shown as three representative frames. Each row contains a query video q (i) and its first returned video c (i) 1 and second returned video c (i) 2 according to the ranked list of DML. In all cases, the first returned video c (i) 1 isn’t the groundtruth of q (i) but the second returned video c (i) 2 is the groundtruth of q (i) . For the first example shown in the first row, the query video q (1) and its groundtruth video c (1) 2 show that a girl is walking in a room which is decorated with illuminations. Compared with c (1) 2 , the query video q (1) might be a video recorded by a smart-phone and its groundtruth video c (1) 2 is edited by cropping. The video c (1) 1 shows that another girl in a black T-shirt is walking in a room which is very similar to the room of query video q (1). This case might not occur for some long videos, e.g., a movie. For the second example shown in the second row, the query video q (2) shows that a girl is doing her hairstyle in a barbershop. The query video q (2) and its groundtruth video c (2) 2 are very similar. The video c (2) 2 is a video clip of the original video. The video c (2) 1 shows that another girl is doing her hairstyle in a similar barbershop. As the clipping transformation is applied on the videos, the query video and its near-duplicate video are confused with other videos which are not near-duplicate. For the third example shown in the third row, the query video q (3) shows that two men are shouting. These videos might be a clip of a movie. The query video and its groundtruth video are edited by inserting two different video templates. The content of these two videos is the same. But detecting these near-duplicate videos might be very challenging due to the different video templates. From these examples, we can see that new challenges and difficulties might be introduced by the new types of near-duplicate videos and hard positive/negative videos in SVD dataset