正在加载图片...

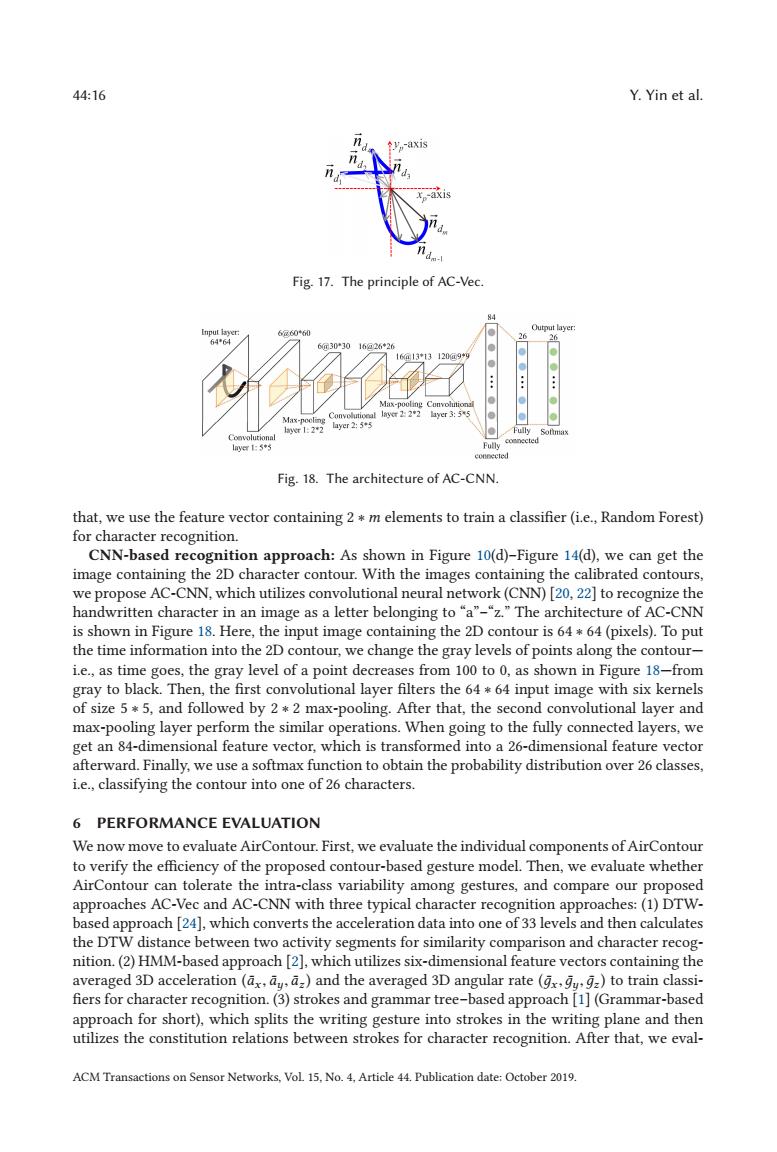

44:16 Y.Yin et al. y-axis -axis Fig.17.The principle of AC-Vec. 84 【mput layer: 6@60*60 Output layer: 64*64 26 6@30*3016@26*2 16a13*13120a9*9 Max-pooling Cunvolutionil Max-noolin layer 2:2*2 layer 3:55 Convolutional r12*2 yr2:33 Fully 1闯g上55 Fully connected Fig.18.The architecture of AC-CNN. that,we use the feature vector containing 2 m elements to train a classifier(i.e.,Random Forest) for character recognition. CNN-based recognition approach:As shown in Figure 10(d)-Figure 14(d),we can get the image containing the 2D character contour.With the images containing the calibrated contours, we propose AC-CNN,which utilizes convolutional neural network(CNN)[20,22]to recognize the handwritten character in an image as a letter belonging to"a"-"z."The architecture of AC-CNN is shown in Figure 18.Here,the input image containing the 2D contour is 64 64(pixels).To put the time information into the 2D contour,we change the gray levels of points along the contour- i.e.,as time goes,the gray level of a point decreases from 100 to 0,as shown in Figure 18-from gray to black.Then,the first convolutional layer filters the 6464 input image with six kernels of size 5*5,and followed by 2 *2 max-pooling.After that,the second convolutional layer and max-pooling layer perform the similar operations.When going to the fully connected layers,we get an 84-dimensional feature vector,which is transformed into a 26-dimensional feature vector afterward.Finally,we use a softmax function to obtain the probability distribution over 26 classes, i.e.,classifying the contour into one of 26 characters. 6 PERFORMANCE EVALUATION We now move to evaluate AirContour.First,we evaluate the individual components of AirContour to verify the efficiency of the proposed contour-based gesture model.Then,we evaluate whether AirContour can tolerate the intra-class variability among gestures,and compare our proposed approaches AC-Vec and AC-CNN with three typical character recognition approaches:(1)DTW- based approach [24],which converts the acceleration data into one of 33 levels and then calculates the DTW distance between two activity segments for similarity comparison and character recog- nition.(2)HMM-based approach [2],which utilizes six-dimensional feature vectors containing the averaged 3D acceleration (ax,dy a)and the averaged 3D angular rate(gx,gyg)to train classi- fiers for character recognition.(3)strokes and grammar tree-based approach [1](Grammar-based approach for short),which splits the writing gesture into strokes in the writing plane and then utilizes the constitution relations between strokes for character recognition.After that,we eval- ACM Transactions on Sensor Networks,Vol 15.No.4.Article 44.Publication date:October 2019.44:16 Y. Yin et al. Fig. 17. The principle of AC-Vec. Fig. 18. The architecture of AC-CNN. that, we use the feature vector containing 2 ∗ m elements to train a classifier (i.e., Random Forest) for character recognition. CNN-based recognition approach: As shown in Figure 10(d)–Figure 14(d), we can get the image containing the 2D character contour. With the images containing the calibrated contours, we propose AC-CNN, which utilizes convolutional neural network (CNN) [20, 22] to recognize the handwritten character in an image as a letter belonging to “a”–“z.” The architecture of AC-CNN is shown in Figure 18. Here, the input image containing the 2D contour is 64 ∗ 64 (pixels). To put the time information into the 2D contour, we change the gray levels of points along the contour— i.e., as time goes, the gray level of a point decreases from 100 to 0, as shown in Figure 18—from gray to black. Then, the first convolutional layer filters the 64 ∗ 64 input image with six kernels of size 5 ∗ 5, and followed by 2 ∗ 2 max-pooling. After that, the second convolutional layer and max-pooling layer perform the similar operations. When going to the fully connected layers, we get an 84-dimensional feature vector, which is transformed into a 26-dimensional feature vector afterward. Finally, we use a softmax function to obtain the probability distribution over 26 classes, i.e., classifying the contour into one of 26 characters. 6 PERFORMANCE EVALUATION We now move to evaluate AirContour. First, we evaluate the individual components of AirContour to verify the efficiency of the proposed contour-based gesture model. Then, we evaluate whether AirContour can tolerate the intra-class variability among gestures, and compare our proposed approaches AC-Vec and AC-CNN with three typical character recognition approaches: (1) DTWbased approach [24], which converts the acceleration data into one of 33 levels and then calculates the DTW distance between two activity segments for similarity comparison and character recognition. (2) HMM-based approach [2], which utilizes six-dimensional feature vectors containing the averaged 3D acceleration (a¯x , a¯y, a¯z ) and the averaged 3D angular rate (д¯x ,д¯y,д¯z ) to train classifiers for character recognition. (3) strokes and grammar tree–based approach [1] (Grammar-based approach for short), which splits the writing gesture into strokes in the writing plane and then utilizes the constitution relations between strokes for character recognition. After that, we evalACM Transactions on Sensor Networks, Vol. 15, No. 4, Article 44. Publication date: October 2019