正在加载图片...

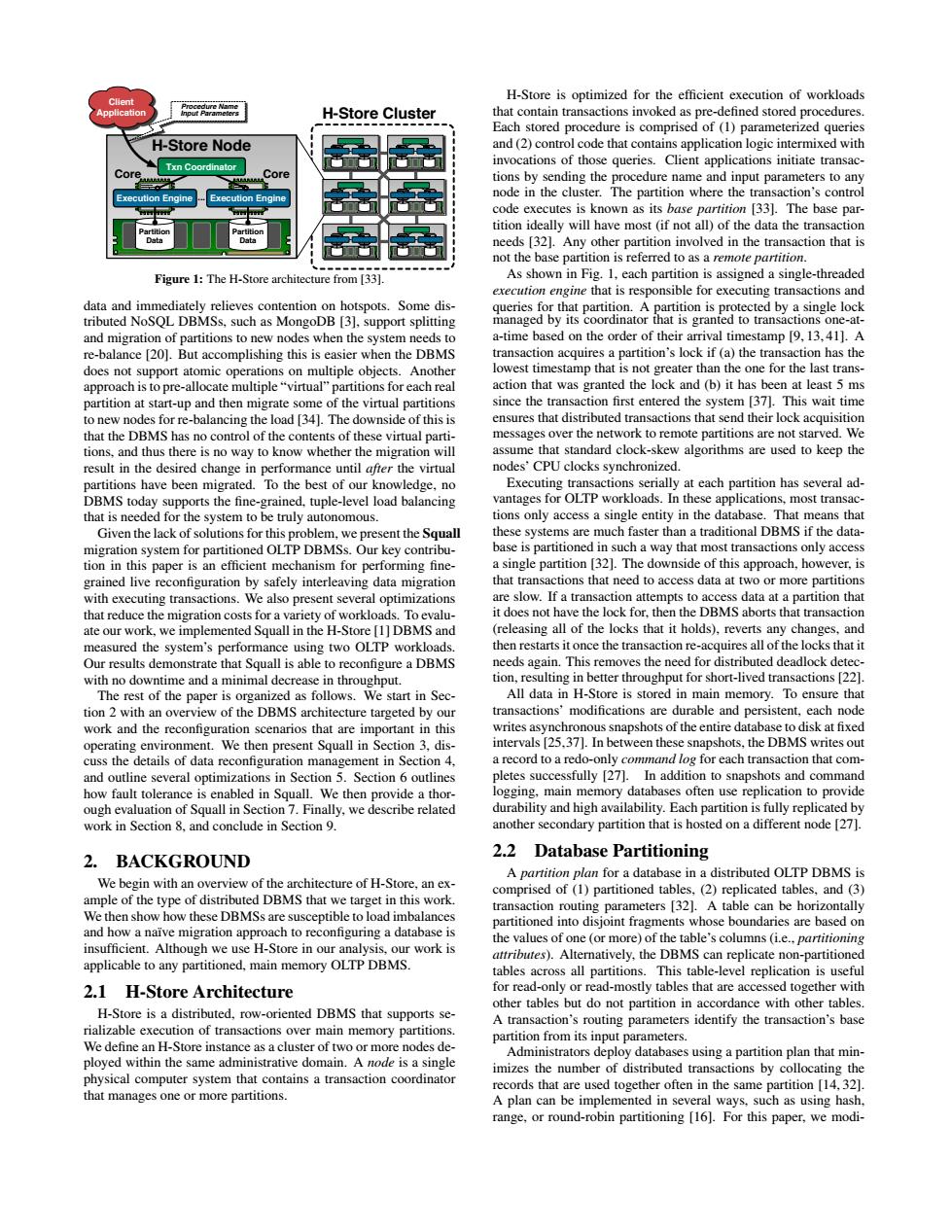

H-Store is optimized for the efficient execution of workload H-Store Cluster Eacontain -Store Nod ind (2)contr e the I input pa ecutes is s its bas 1331.The base o that is itecture from 33 le th Figure 1:The H-Sto tis responsible for executing tran quenes for a-time hased on the order of the ival ti nce 201.But accomplishing this ise when the DBMS action tha granted the lock and (b)it ha 3 t lea ures that distributed t sume tha ard clock-skew s CP ach tage nt the Squall ese sys al DBMS e da of th approach.h ntigurationbyafeyint slow.If a tr hat reduc DBMS ed th system's perfoma ance usin then res re a DB in thro tion t for short-lived u ns 12 hitecture ctions' and persistent. of data s.the DBMS fault tol e is en d in S nd hich ition is fulb work in Section 8.and conclude in Section 9 2.BACKGROUND 2.2 Database Partitioning et in thiswork ms (i.e replication is 2.1 H-Store Architecture othe H-Store is a distributed,row-oriented DBMS that supports se partition from its that manages one or more partitions range.or round-robin partitioning 6).For this paper.we modi-H-Store Node BadAss Chip 3000 Squirrels. Yes, Squirrels BadAss Chip 3000 Squirrels. Yes, Squirrels ... Partition Data Partition Data Execution Engine Execution Engine Txn Coordinator H-Store Cluster Client Application Core Core Procedure Name Input Parameters BadAss Chip 3000 Squirrels. Yes, Squirrels BadAss Chip 3000 Squirrels. Yes, Squirrels ... BadAss Chip 3000 Squirrels. Yes, Squirrels BadAss Chip 3000 Squirrels. Yes, Squirrels ... BadAss Chip 3000 Squirrels. Yes, Squirrels BadAss Chip 3000 Squirrels. Yes, Squirrels ... BadAss Chip 3000 Squirrels. Yes, Squirrels BadAss Chip 3000 Squirrels. Yes, Squirrels ... BadAss Chip 3000 Squirrels. Yes, Squirrels BadAss Chip 3000 Squirrels. Yes, Squirrels ... BadAss Chip 3000 Squirrels. Yes, Squirrels BadAss Chip 3000 Squirrels. Yes, Squirrels ... Figure 1: The H-Store architecture from [33]. data and immediately relieves contention on hotspots. Some distributed NoSQL DBMSs, such as MongoDB [3], support splitting and migration of partitions to new nodes when the system needs to re-balance [20]. But accomplishing this is easier when the DBMS does not support atomic operations on multiple objects. Another approach is to pre-allocate multiple “virtual” partitions for each real partition at start-up and then migrate some of the virtual partitions to new nodes for re-balancing the load [34]. The downside of this is that the DBMS has no control of the contents of these virtual partitions, and thus there is no way to know whether the migration will result in the desired change in performance until after the virtual partitions have been migrated. To the best of our knowledge, no DBMS today supports the fine-grained, tuple-level load balancing that is needed for the system to be truly autonomous. Given the lack of solutions for this problem, we present the Squall migration system for partitioned OLTP DBMSs. Our key contribution in this paper is an efficient mechanism for performing finegrained live reconfiguration by safely interleaving data migration with executing transactions. We also present several optimizations that reduce the migration costs for a variety of workloads. To evaluate our work, we implemented Squall in the H-Store [1] DBMS and measured the system’s performance using two OLTP workloads. Our results demonstrate that Squall is able to reconfigure a DBMS with no downtime and a minimal decrease in throughput. The rest of the paper is organized as follows. We start in Section 2 with an overview of the DBMS architecture targeted by our work and the reconfiguration scenarios that are important in this operating environment. We then present Squall in Section 3, discuss the details of data reconfiguration management in Section 4, and outline several optimizations in Section 5. Section 6 outlines how fault tolerance is enabled in Squall. We then provide a thorough evaluation of Squall in Section 7. Finally, we describe related work in Section 8, and conclude in Section 9. 2. BACKGROUND We begin with an overview of the architecture of H-Store, an example of the type of distributed DBMS that we target in this work. We then show how these DBMSs are susceptible to load imbalances and how a naïve migration approach to reconfiguring a database is insufficient. Although we use H-Store in our analysis, our work is applicable to any partitioned, main memory OLTP DBMS. 2.1 H-Store Architecture H-Store is a distributed, row-oriented DBMS that supports serializable execution of transactions over main memory partitions. We define an H-Store instance as a cluster of two or more nodes deployed within the same administrative domain. A node is a single physical computer system that contains a transaction coordinator that manages one or more partitions. H-Store is optimized for the efficient execution of workloads that contain transactions invoked as pre-defined stored procedures. Each stored procedure is comprised of (1) parameterized queries and (2) control code that contains application logic intermixed with invocations of those queries. Client applications initiate transactions by sending the procedure name and input parameters to any node in the cluster. The partition where the transaction’s control code executes is known as its base partition [33]. The base partition ideally will have most (if not all) of the data the transaction needs [32]. Any other partition involved in the transaction that is not the base partition is referred to as a remote partition. As shown in Fig. 1, each partition is assigned a single-threaded execution engine that is responsible for executing transactions and queries for that partition. A partition is protected by a single lock managed by its coordinator that is granted to transactions one-ata-time based on the order of their arrival timestamp [9, 13, 41]. A transaction acquires a partition’s lock if (a) the transaction has the lowest timestamp that is not greater than the one for the last transaction that was granted the lock and (b) it has been at least 5 ms since the transaction first entered the system [37]. This wait time ensures that distributed transactions that send their lock acquisition messages over the network to remote partitions are not starved. We assume that standard clock-skew algorithms are used to keep the nodes’ CPU clocks synchronized. Executing transactions serially at each partition has several advantages for OLTP workloads. In these applications, most transactions only access a single entity in the database. That means that these systems are much faster than a traditional DBMS if the database is partitioned in such a way that most transactions only access a single partition [32]. The downside of this approach, however, is that transactions that need to access data at two or more partitions are slow. If a transaction attempts to access data at a partition that it does not have the lock for, then the DBMS aborts that transaction (releasing all of the locks that it holds), reverts any changes, and then restarts it once the transaction re-acquires all of the locks that it needs again. This removes the need for distributed deadlock detection, resulting in better throughput for short-lived transactions [22]. All data in H-Store is stored in main memory. To ensure that transactions’ modifications are durable and persistent, each node writes asynchronous snapshots of the entire database to disk at fixed intervals [25,37]. In between these snapshots, the DBMS writes out a record to a redo-only command log for each transaction that completes successfully [27]. In addition to snapshots and command logging, main memory databases often use replication to provide durability and high availability. Each partition is fully replicated by another secondary partition that is hosted on a different node [27]. 2.2 Database Partitioning A partition plan for a database in a distributed OLTP DBMS is comprised of (1) partitioned tables, (2) replicated tables, and (3) transaction routing parameters [32]. A table can be horizontally partitioned into disjoint fragments whose boundaries are based on the values of one (or more) of the table’s columns (i.e., partitioning attributes). Alternatively, the DBMS can replicate non-partitioned tables across all partitions. This table-level replication is useful for read-only or read-mostly tables that are accessed together with other tables but do not partition in accordance with other tables. A transaction’s routing parameters identify the transaction’s base partition from its input parameters. Administrators deploy databases using a partition plan that minimizes the number of distributed transactions by collocating the records that are used together often in the same partition [14, 32]. A plan can be implemented in several ways, such as using hash, range, or round-robin partitioning [16]. For this paper, we modi-