正在加载图片...

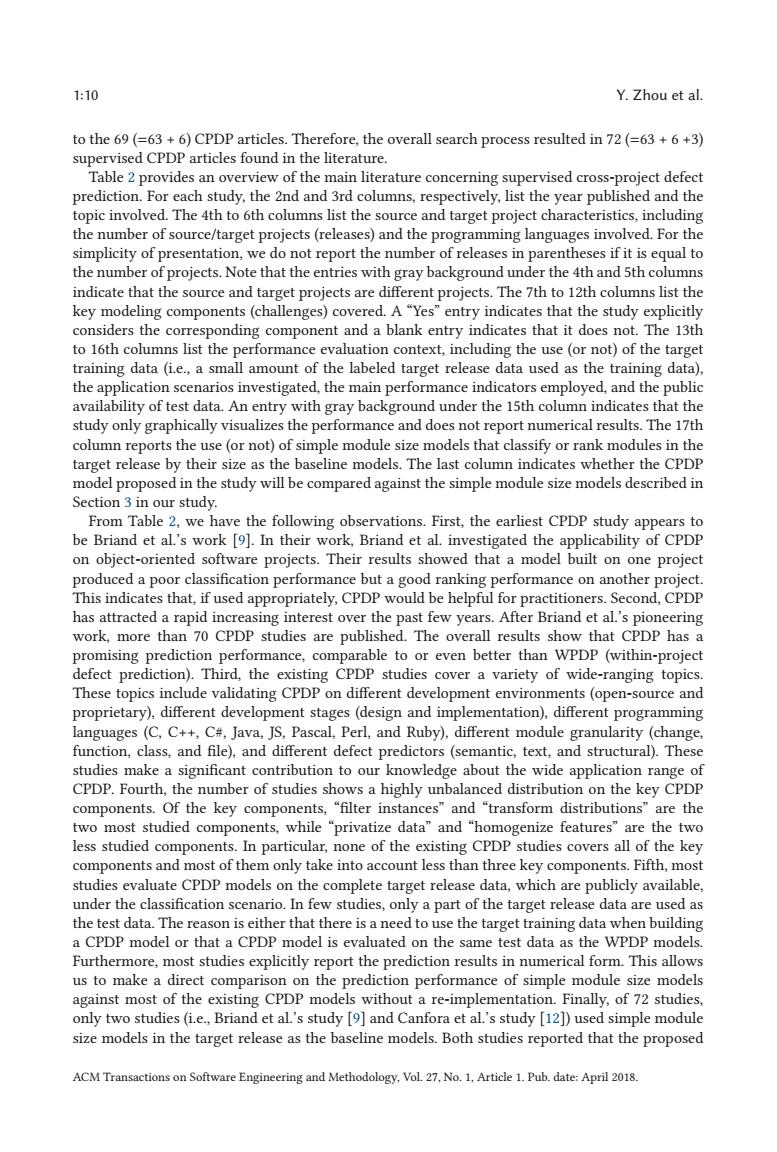

1:10 Y.Zhou et al. to the 69(=63+6)CPDP articles.Therefore,the overall search process resulted in 72(=63+6+3) supervised CPDP articles found in the literature. Table 2 provides an overview of the main literature concerning supervised cross-project defect prediction.For each study,the 2nd and 3rd columns,respectively,list the year published and the topic involved.The 4th to 6th columns list the source and target project characteristics,including the number of source/target projects(releases)and the programming languages involved.For the simplicity of presentation,we do not report the number of releases in parentheses if it is equal to the number of projects.Note that the entries with gray background under the 4th and 5th columns indicate that the source and target projects are different projects.The 7th to 12th columns list the key modeling components(challenges)covered.A"Yes"entry indicates that the study explicitly considers the corresponding component and a blank entry indicates that it does not.The 13th to 16th columns list the performance evaluation context,including the use (or not)of the target training data(i.e.,a small amount of the labeled target release data used as the training data), the application scenarios investigated,the main performance indicators employed,and the public availability of test data.An entry with gray background under the 15th column indicates that the study only graphically visualizes the performance and does not report numerical results.The 17th column reports the use(or not)of simple module size models that classify or rank modules in the target release by their size as the baseline models.The last column indicates whether the CPDP model proposed in the study will be compared against the simple module size models described in Section 3 in our study. From Table 2,we have the following observations.First,the earliest CPDP study appears to be Briand et al.'s work [9].In their work,Briand et al.investigated the applicability of CPDP on object-oriented software projects.Their results showed that a model built on one project produced a poor classification performance but a good ranking performance on another project. This indicates that,if used appropriately,CPDP would be helpful for practitioners.Second,CPDP has attracted a rapid increasing interest over the past few years.After Briand et al.'s pioneering work,more than 70 CPDP studies are published.The overall results show that CPDP has a promising prediction performance,comparable to or even better than WPDP(within-project defect prediction).Third,the existing CPDP studies cover a variety of wide-ranging topics. These topics include validating CPDP on different development environments(open-source and proprietary),different development stages(design and implementation),different programming languages(C,C++,C#,Java,JS,Pascal,Perl,and Ruby),different module granularity(change, function,class,and file),and different defect predictors(semantic,text,and structural).These studies make a significant contribution to our knowledge about the wide application range of CPDP.Fourth,the number of studies shows a highly unbalanced distribution on the key CPDP components..Of the key components,“filter instances'”and“transform distributions'”are the two most studied components,while "privatize data"and "homogenize features"are the two less studied components.In particular,none of the existing CPDP studies covers all of the key components and most of them only take into account less than three key components.Fifth,most studies evaluate CPDP models on the complete target release data,which are publicly available, under the classification scenario.In few studies,only a part of the target release data are used as the test data.The reason is either that there is a need to use the target training data when building a CPDP model or that a CPDP model is evaluated on the same test data as the WPDP models. Furthermore,most studies explicitly report the prediction results in numerical form.This allows us to make a direct comparison on the prediction performance of simple module size models against most of the existing CPDP models without a re-implementation.Finally,of 72 studies, only two studies (i.e.,Briand et al.'s study [9]and Canfora et al.'s study [12])used simple module size models in the target release as the baseline models.Both studies reported that the proposed ACM Transactions on Software Engineering and Methodology,Vol.27,No.1,Article 1.Pub.date:April 2018.1:10 Y. Zhou et al. to the 69 (=63 + 6) CPDP articles. Therefore, the overall search process resulted in 72 (=63 + 6 +3) supervised CPDP articles found in the literature. Table 2 provides an overview of the main literature concerning supervised cross-project defect prediction. For each study, the 2nd and 3rd columns, respectively, list the year published and the topic involved. The 4th to 6th columns list the source and target project characteristics, including the number of source/target projects (releases) and the programming languages involved. For the simplicity of presentation, we do not report the number of releases in parentheses if it is equal to the number of projects. Note that the entries with gray background under the 4th and 5th columns indicate that the source and target projects are different projects. The 7th to 12th columns list the key modeling components (challenges) covered. A “Yes” entry indicates that the study explicitly considers the corresponding component and a blank entry indicates that it does not. The 13th to 16th columns list the performance evaluation context, including the use (or not) of the target training data (i.e., a small amount of the labeled target release data used as the training data), the application scenarios investigated, the main performance indicators employed, and the public availability of test data. An entry with gray background under the 15th column indicates that the study only graphically visualizes the performance and does not report numerical results. The 17th column reports the use (or not) of simple module size models that classify or rank modules in the target release by their size as the baseline models. The last column indicates whether the CPDP model proposed in the study will be compared against the simple module size models described in Section 3 in our study. From Table 2, we have the following observations. First, the earliest CPDP study appears to be Briand et al.’s work [9]. In their work, Briand et al. investigated the applicability of CPDP on object-oriented software projects. Their results showed that a model built on one project produced a poor classification performance but a good ranking performance on another project. This indicates that, if used appropriately, CPDP would be helpful for practitioners. Second, CPDP has attracted a rapid increasing interest over the past few years. After Briand et al.’s pioneering work, more than 70 CPDP studies are published. The overall results show that CPDP has a promising prediction performance, comparable to or even better than WPDP (within-project defect prediction). Third, the existing CPDP studies cover a variety of wide-ranging topics. These topics include validating CPDP on different development environments (open-source and proprietary), different development stages (design and implementation), different programming languages (C, C++, C#, Java, JS, Pascal, Perl, and Ruby), different module granularity (change, function, class, and file), and different defect predictors (semantic, text, and structural). These studies make a significant contribution to our knowledge about the wide application range of CPDP. Fourth, the number of studies shows a highly unbalanced distribution on the key CPDP components. Of the key components, “filter instances” and “transform distributions” are the two most studied components, while “privatize data” and “homogenize features” are the two less studied components. In particular, none of the existing CPDP studies covers all of the key components and most of them only take into account less than three key components. Fifth, most studies evaluate CPDP models on the complete target release data, which are publicly available, under the classification scenario. In few studies, only a part of the target release data are used as the test data. The reason is either that there is a need to use the target training data when building a CPDP model or that a CPDP model is evaluated on the same test data as the WPDP models. Furthermore, most studies explicitly report the prediction results in numerical form. This allows us to make a direct comparison on the prediction performance of simple module size models against most of the existing CPDP models without a re-implementation. Finally, of 72 studies, only two studies (i.e., Briand et al.’s study [9] and Canfora et al.’s study [12]) used simple module size models in the target release as the baseline models. Both studies reported that the proposed ACM Transactions on Software Engineering and Methodology, Vol. 27, No. 1, Article 1. Pub. date: April 2018