正在加载图片...

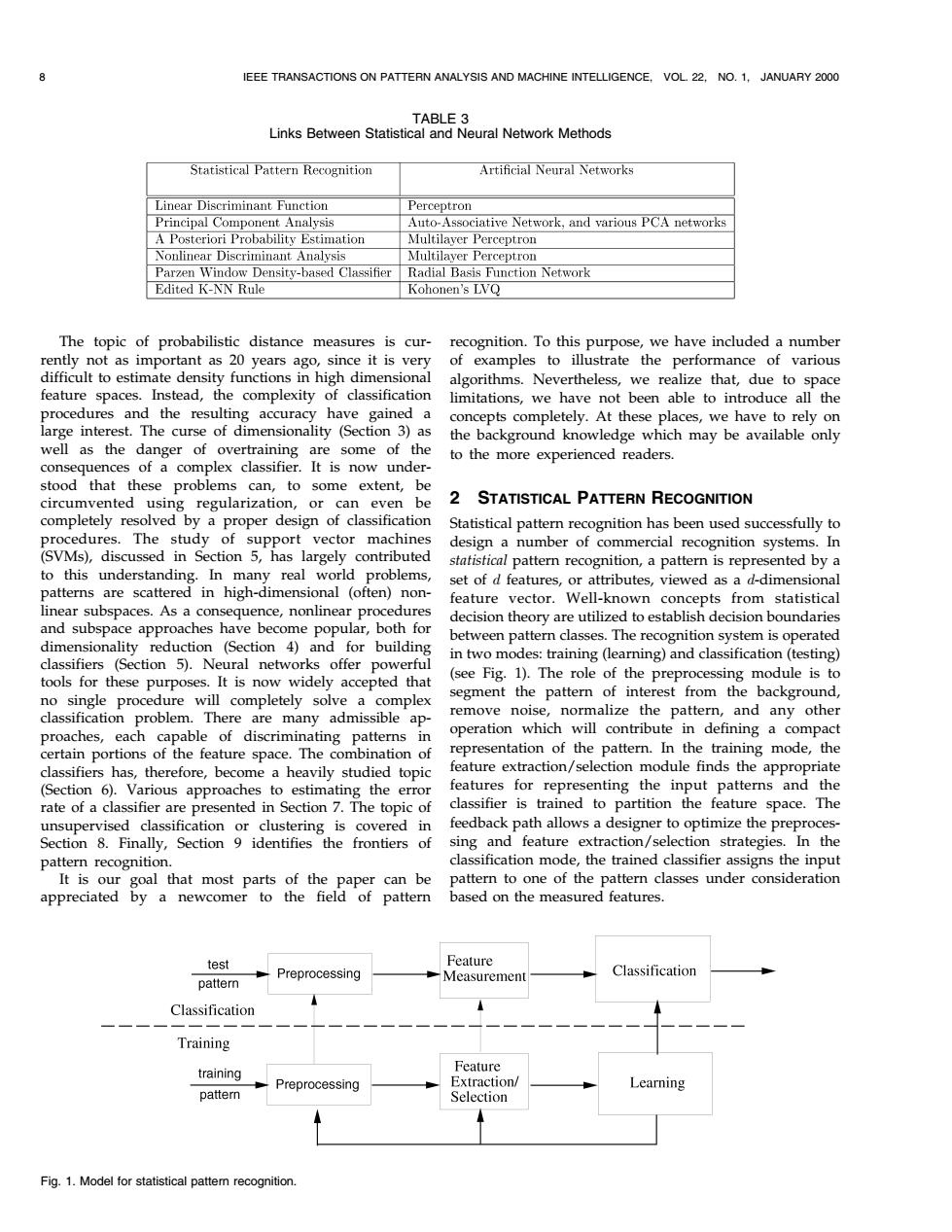

8 EEE TRANSACTIONS ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,VOL 22,NO.1,JANUARY 2000 TABLE 3 Links Between Statistical and Neural Network Methods Statistical Pattern Recognition Artificial Neural Networks Linear Discriminant Function Perceptron Principal Component Analysis Auto-Associative Network,and various PCA networks A Posteriori Probability Estimation Multilayer Perceptron Nonlinear Discriminant Analysis Multilayer Perceptron Parzen Window Density-based Classifier Radial Basis Function Network Edited K-NN Rule Kohonen's LVQ The topic of probabilistic distance measures is cur- recognition.To this purpose,we have included a number rently not as important as 20 years ago,since it is very of examples to illustrate the performance of various difficult to estimate density functions in high dimensional algorithms.Nevertheless,we realize that,due to space feature spaces.Instead,the complexity of classification limitations,we have not been able to introduce all the procedures and the resulting accuracy have gained a concepts completely.At these places,we have to rely on large interest.The curse of dimensionality (Section 3)as the background knowledge which may be available only well as the danger of overtraining are some of the to the more experienced readers. consequences of a complex classifier.It is now under- stood that these problems can,to some extent,be circumvented using regularization,or can even be 2 STATISTICAL PATTERN RECOGNITION completely resolved by a proper design of classification Statistical pattern recognition has been used successfully to procedures.The study of support vector machines design a number of commercial recognition systems.In (SVMs),discussed in Section 5,has largely contributed statistical pattern recognition,a pattern is represented by a to this understanding.In many real world problems, set of d features,or attributes,viewed as a d-dimensional patterns are scattered in high-dimensional (often)non- feature vector.Well-known concepts from statistical linear subspaces.As a consequence,nonlinear procedures decision theory are utilized to establish decision boundaries and subspace approaches have become popular,both for dimensionality reduction (Section 4)and for building between pattern classes.The recognition system is operated classifiers (Section 5).Neural networks offer powerful in two modes:training (learning)and classification(testing) tools for these purposes.It is now widely accepted that (see Fig.1).The role of the preprocessing module is to no single procedure will completely solve a complex segment the pattern of interest from the background, classification problem.There are many admissible ap- remove noise,normalize the pattern,and any other proaches,each capable of discriminating patterns in operation which will contribute in defining a compact certain portions of the feature space.The combination of representation of the pattern.In the training mode,the classifiers has,therefore,become a heavily studied topic feature extraction/selection module finds the appropriate (Section 6).Various approaches to estimating the error features for representing the input patterns and the rate of a classifier are presented in Section 7.The topic of classifier is trained to partition the feature space.The unsupervised classification or clustering is covered in feedback path allows a designer to optimize the preproces- Section 8.Finally,Section 9 identifies the frontiers of sing and feature extraction/selection strategies.In the pattern recognition. classification mode,the trained classifier assigns the input It is our goal that most parts of the paper can be pattern to one of the pattern classes under consideration appreciated by a newcomer to the field of pattern based on the measured features. test Feature Preprocessing Measurement Classification pattern Classification Training Feature training Preprocessing Extraction/ Learning pattern Selection Fig.1.Model for statistical pattem recognition.The topic of probabilistic distance measures is currently not as important as 20 years ago, since it is very difficult to estimate density functions in high dimensional feature spaces. Instead, the complexity of classification procedures and the resulting accuracy have gained a large interest. The curse of dimensionality (Section 3) as well as the danger of overtraining are some of the consequences of a complex classifier. It is now understood that these problems can, to some extent, be circumvented using regularization, or can even be completely resolved by a proper design of classification procedures. The study of support vector machines (SVMs), discussed in Section 5, has largely contributed to this understanding. In many real world problems, patterns are scattered in high-dimensional (often) nonlinear subspaces. As a consequence, nonlinear procedures and subspace approaches have become popular, both for dimensionality reduction (Section 4) and for building classifiers (Section 5). Neural networks offer powerful tools for these purposes. It is now widely accepted that no single procedure will completely solve a complex classification problem. There are many admissible approaches, each capable of discriminating patterns in certain portions of the feature space. The combination of classifiers has, therefore, become a heavily studied topic (Section 6). Various approaches to estimating the error rate of a classifier are presented in Section 7. The topic of unsupervised classification or clustering is covered in Section 8. Finally, Section 9 identifies the frontiers of pattern recognition. It is our goal that most parts of the paper can be appreciated by a newcomer to the field of pattern recognition. To this purpose, we have included a number of examples to illustrate the performance of various algorithms. Nevertheless, we realize that, due to space limitations, we have not been able to introduce all the concepts completely. At these places, we have to rely on the background knowledge which may be available only to the more experienced readers. 2 STATISTICAL PATTERN RECOGNITION Statistical pattern recognition has been used successfully to design a number of commercial recognition systems. In statistical pattern recognition, a pattern is represented by a set of d features, or attributes, viewed as a d-dimensional feature vector. Well-known concepts from statistical decision theory are utilized to establish decision boundaries between pattern classes. The recognition system is operated in two modes: training (learning) and classification (testing) (see Fig. 1). The role of the preprocessing module is to segment the pattern of interest from the background, remove noise, normalize the pattern, and any other operation which will contribute in defining a compact representation of the pattern. In the training mode, the feature extraction/selection module finds the appropriate features for representing the input patterns and the classifier is trained to partition the feature space. The feedback path allows a designer to optimize the preprocessing and feature extraction/selection strategies. In the classification mode, the trained classifier assigns the input pattern to one of the pattern classes under consideration based on the measured features. 8 IEEE TRANSACTIONS ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE, VOL. 22, NO. 1, JANUARY 2000 TABLE 3 Links Between Statistical and Neural Network Methods Fig. 1. Model for statistical pattern recognition