正在加载图片...

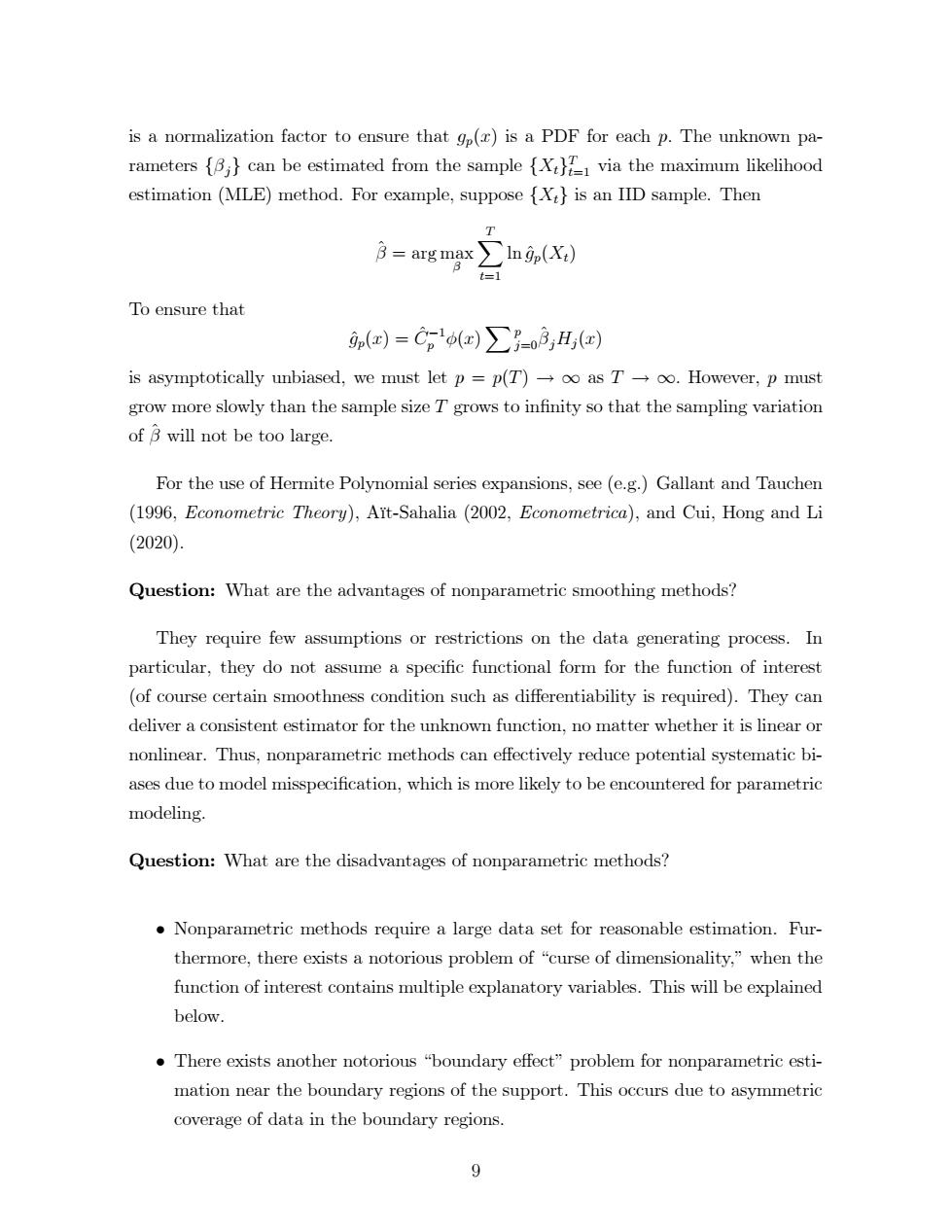

is a normalization factor to ensure that gp(r)is a PDF for each p.The unknown pa- rameters (can be estimated from the sample via the maximum likelihood estimation (MLE)method.For example,suppose {Xt}is an IID sample.Then T 3=arg max∑n9p(X) t=1 To ensure that p(m)=Cg()∑-o3,H) is asymptotically unbiased,we must let p =p(T)oo as T-oo.However,p must grow more slowly than the sample size T grows to infinity so that the sampling variation of B will not be too large. For the use of Hermite Polynomial series expansions,see (e.g.)Gallant and Tauchen (1996,Econometric Theory),Ait-Sahalia (2002,Econometrica),and Cui,Hong and Li (2020) Question:What are the advantages of nonparametric smoothing methods? They require few assumptions or restrictions on the data generating process.In particular,they do not assume a specific functional form for the function of interest (of course certain smoothness condition such as differentiability is required).They can deliver a consistent estimator for the unknown function,no matter whether it is linear or nonlinear.Thus,nonparametric methods can effectively reduce potential systematic bi- ases due to model misspecification,which is more likely to be encountered for parametric modeling. Question:What are the disadvantages of nonparametric methods? Nonparametric methods require a large data set for reasonable estimation.Fur- thermore,there exists a notorious problem of "curse of dimensionality,"when the function of interest contains multiple explanatory variables.This will be explained below. There exists another notorious "boundary effect"problem for nonparametric esti- mation near the boundary regions of the support.This occurs due to asymmetric coverage of data in the boundary regions. 9is a normalization factor to ensure that gp(x) is a PDF for each p: The unknown parameters f

jg can be estimated from the sample fXtg T t=1 via the maximum likelihood estimation (MLE) method. For example, suppose fXtg is an IID sample. Then

^ = arg max

X T t=1 ln ^gp(Xt) To ensure that g^p(x) = C^1 p

(x) Xp j=0

^ jHj (x) is asymptotically unbiased, we must let p = p(T) ! 1 as T ! 1: However, p must grow more slowly than the sample size T grows to inÖnity so that the sampling variation of

^ will not be too large. For the use of Hermite Polynomial series expansions, see (e.g.) Gallant and Tauchen (1996, Econometric Theory), AÔt-Sahalia (2002, Econometrica), and Cui, Hong and Li (2020). Question: What are the advantages of nonparametric smoothing methods? They require few assumptions or restrictions on the data generating process. In particular, they do not assume a speciÖc functional form for the function of interest (of course certain smoothness condition such as di§erentiability is required). They can deliver a consistent estimator for the unknown function, no matter whether it is linear or nonlinear. Thus, nonparametric methods can e§ectively reduce potential systematic biases due to model misspeciÖcation, which is more likely to be encountered for parametric modeling. Question: What are the disadvantages of nonparametric methods? Nonparametric methods require a large data set for reasonable estimation. Furthermore, there exists a notorious problem of ìcurse of dimensionality,îwhen the function of interest contains multiple explanatory variables. This will be explained below. There exists another notorious ìboundary e§ectîproblem for nonparametric estimation near the boundary regions of the support. This occurs due to asymmetric coverage of data in the boundary regions. 9