正在加载图片...

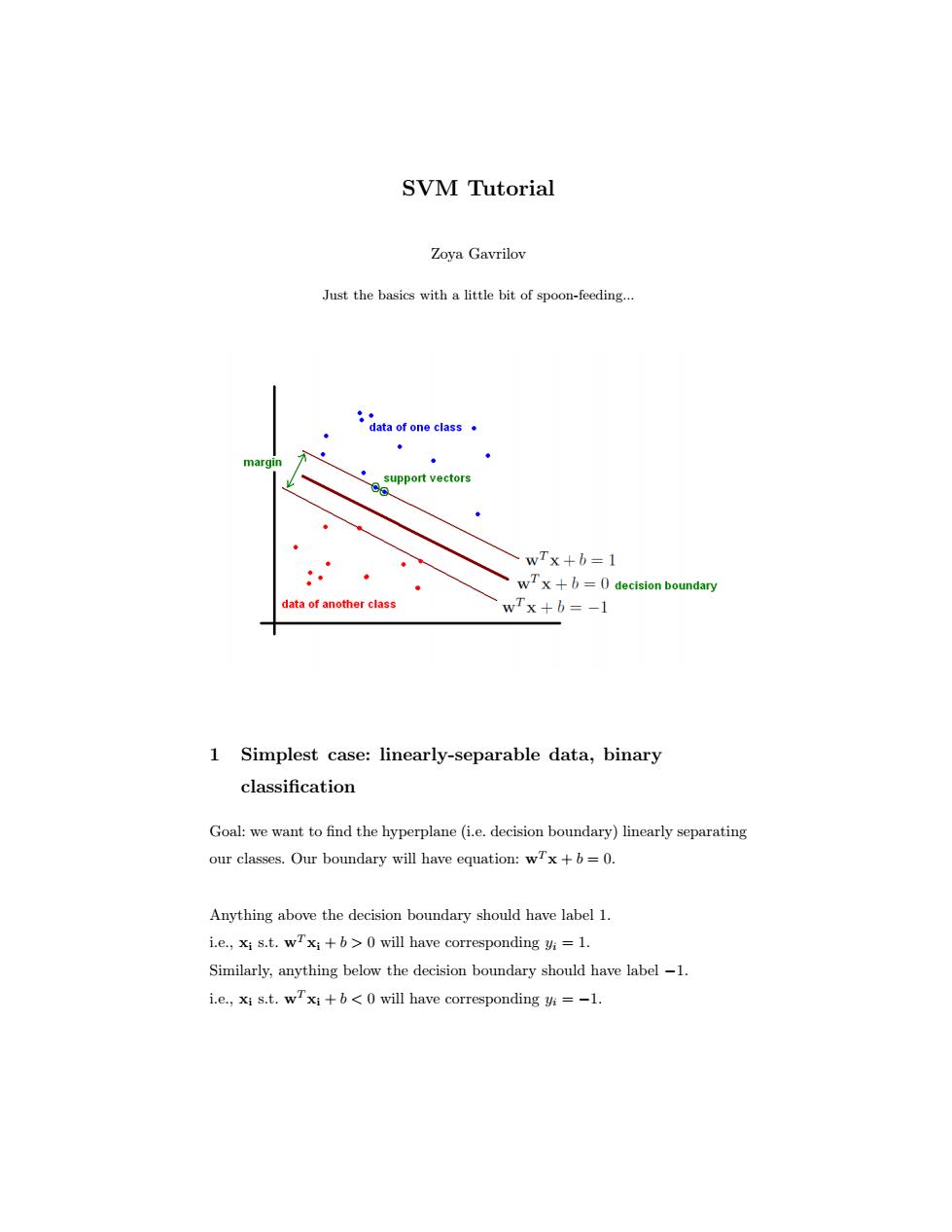

SVM Tutorial Zoya Gavrilov Just the basics with a little bit of spoon-feeding... 。· data of one class. margin support vectors wTx+b=1 wTx+b=0 decision boundary data of another class wTx+b=-1 1 Simplest case:linearly-separable data,binary classification Goal:we want to find the hyperplane(i.e.decision boundary)linearly separating our classes.Our boundary will have equation:wTx+b=0. Anything above the decision boundary should have label 1. i.e.,xi s.t.wTxi+b>0 will have corresponding yi =1. Similarly,anything below the decision boundary should have label-1. i.e.,xi s.t.wxi+b<0 will have corresponding yi =-1.SVM Tutorial Zoya Gavrilov Just the basics with a little bit of spoon-feeding... 1 Simplest case: linearly-separable data, binary classification Goal: we want to find the hyperplane (i.e. decision boundary) linearly separating our classes. Our boundary will have equation: wT x + b = 0. Anything above the decision boundary should have label 1. i.e., xi s.t. wT xi + b > 0 will have corresponding yi = 1. Similarly, anything below the decision boundary should have label −1. i.e., xi s.t. wT xi + b < 0 will have corresponding yi = −1