正在加载图片...

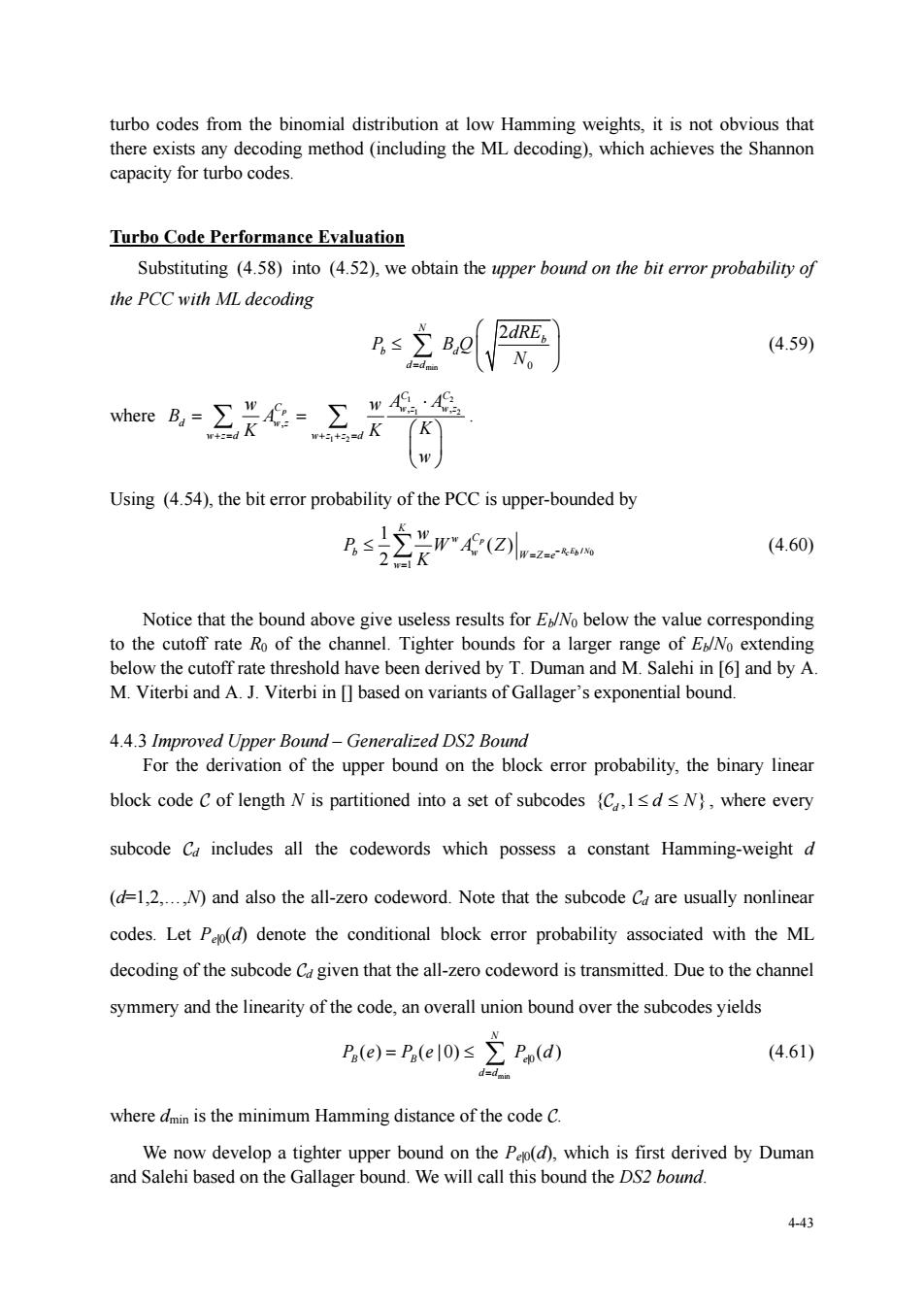

turbo codes from the binomial distribution at low Hamming weights,it is not obvious that there exists any decoding method (including the ML decoding).which achieves the Shannon capacity for turbo codes. Turbo Code Performance Evaluation Substituting (4.58)into (4.52),we obtain the upper bound on the bit error probability of the PCC with ML decoding 芝c要 (4.59) 8-3名 w Using (4.54),the bit error probability of the PCC is upper-bounded by B≤2r2到列2 (4.60) Notice that the bound above give useless results for ENo below the value corresponding to the cutoff rate Ro of the channel.Tighter bounds for a larger range of E/No extending below the cutoff rate threshold have been derived by T.Duman and M.Salehi in [6]and by A. M.Viterbi and A.J.Viterbi in]based on variants of Gallager's exponential bound. 4.4.3 Improved Upper Bound-Generalized DS2 Bound For the derivation of the upper bound on the block error probability,the binary linear block code C of length N is partitioned into a set of subcodes C,1sd s N),where every subcode C includes all the codewords which possess a constant Hamming-weightd (d=1,2.N)and also the all-zero codeword.Note that the subcode C are usually nonlinear codes.Let P)denote the conditional block error probability associated with the ML decoding of the subcode Cgiven that the all-zero codeword is transmitted.Due to the channel symmery and the linearity of the code,an overall union bound over the subcodes yields P.(e)=P.(e10)sPn(d) (4.61) wherem is the minimum Hamming distance of the code C. We now develop a tighter upper bound on the Pa(d),which is first derived by Duman and Salehi based on the Gallager bound.We will call this bound the DS2 bound. 4-43 4-43 turbo codes from the binomial distribution at low Hamming weights, it is not obvious that there exists any decoding method (including the ML decoding), which achieves the Shannon capacity for turbo codes. Turbo Code Performance Evaluation Substituting (4.58) into (4.52), we obtain the upper bound on the bit error probability of the PCC with ML decoding min 0 2 N b b d d d dRE P BQ N (4.59) where , Cp d wz wzd w B A K 1 2 1 2 1 2 , , C C wz wz wz z d w A A K K w . Using (4.54), the bit error probability of the PCC is upper-bounded by / 0 1 1 ( ) 2 p RE N c b K w C b w WZe w w P WA Z K (4.60) Notice that the bound above give useless results for Eb/N0 below the value corresponding to the cutoff rate R0 of the channel. Tighter bounds for a larger range of Eb/N0 extending below the cutoff rate threshold have been derived by T. Duman and M. Salehi in [6] and by A. M. Viterbi and A. J. Viterbi in [] based on variants of Gallager’s exponential bound. 4.4.3 Improved Upper Bound – Generalized DS2 Bound For the derivation of the upper bound on the block error probability, the binary linear block code of length N is partitioned into a set of subcodes { ,1 } d d N , where every subcode d includes all the codewords which possess a constant Hamming-weight d (d=1,2,.,N) and also the all-zero codeword. Note that the subcode d are usually nonlinear codes. Let Pe|0(d) denote the conditional block error probability associated with the ML decoding of the subcode d given that the all-zero codeword is transmitted. Due to the channel symmery and the linearity of the code, an overall union bound over the subcodes yields min |0 ( ) ( | 0) ( ) N BB e d d Pe Pe P d (4.61) where dmin is the minimum Hamming distance of the code . We now develop a tighter upper bound on the Pe|0(d), which is first derived by Duman and Salehi based on the Gallager bound. We will call this bound the DS2 bound