正在加载图片...

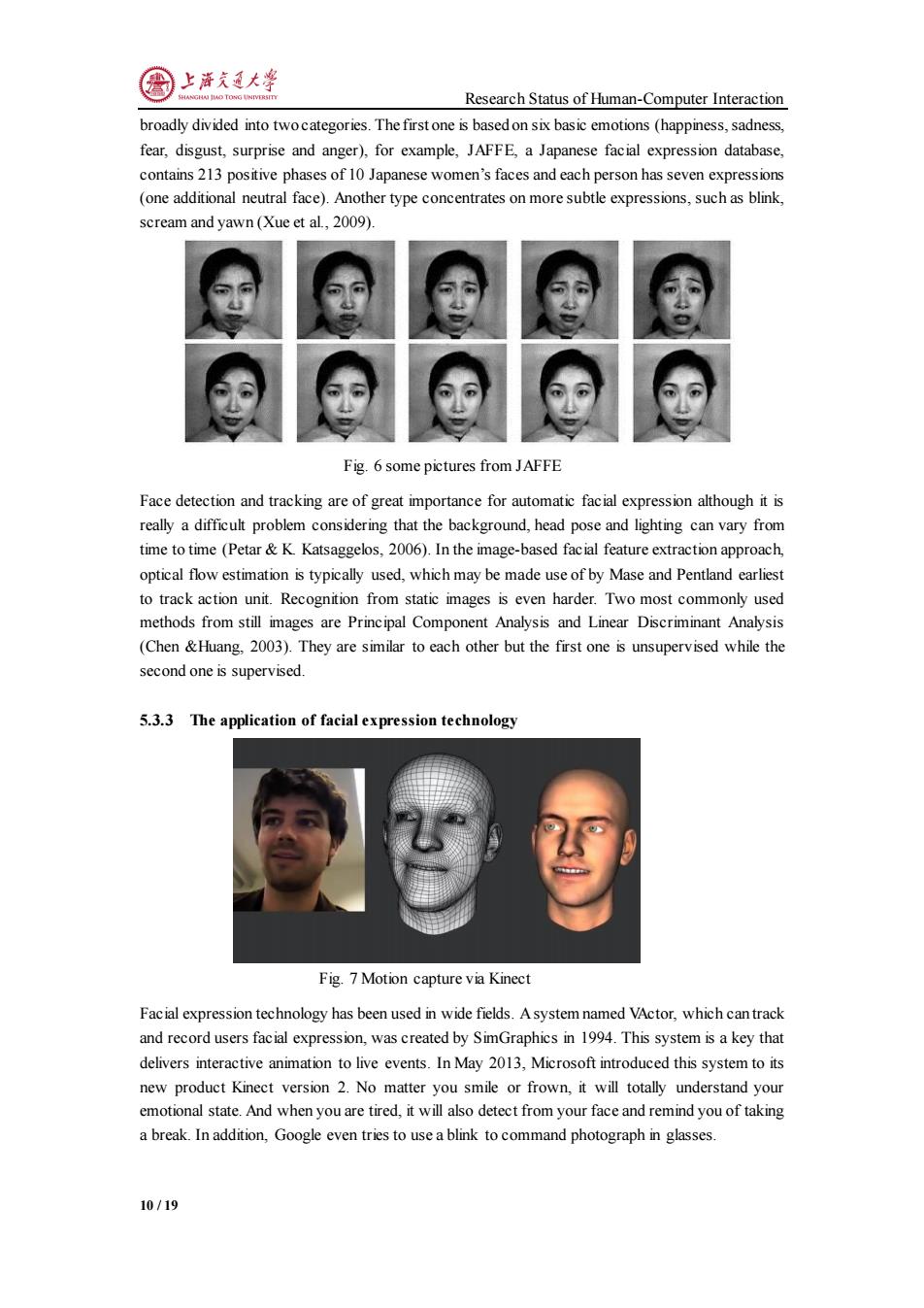

上游文通大学 Research Status of Human-Computer Interaction broadly divided into twocategories.The first one is based on six basic emotions (happiness,sadness, fear,disgust,surprise and anger),for example,JAFFE,a Japanese facial expression database, contains 213 positive phases of 10 Japanese women's faces and each person has seven expressions (one additional neutral face).Another type concentrates on more subtle expressions,such as blink, scream and yawn(Xue et al,2009). Fig.6 some pictures from JAFFE Face detection and tracking are of great importance for automatic facial expression although it is really a difficult problem considering that the background,head pose and lighting can vary from time to time (Petar&K.Katsaggelos,2006).In the image-based facial feature extraction approach, optical flow estimation is typically used,which may be made use of by Mase and Pentland earliest to track action unit.Recognition from static images is even harder.Two most commonly used methods from still images are Principal Component Analysis and Linear Discriminant Analysis (Chen &Huang,2003).They are similar to each other but the first one is unsupervised while the second one is supervised. 5.3.3 The application of facial expression technology Fig.7 Motion capture via Kinect Facial expression technology has been used in wide fields.Asystem named VActor,which can track and record users facial expression,was created by SimGraphics in 1994.This system is a key that delivers interactive animation to live events.In May 2013,Microsoft introduced this system to its new product Kinect version 2.No matter you smile or frown,it will totally understand your emotional state.And when you are tired,it will also detect from your face and remind you of taking a break.In addition,Google even tries to use a blink to command photograph in glasses. 10/19Research Status of Human-Computer Interaction 10 / 19 broadly divided into two categories. The first one is based on six basic emotions (happiness, sadness, fear, disgust, surprise and anger), for example, JAFFE, a Japanese facial expression database, contains 213 positive phases of 10 Japanese women’s faces and each person has seven expressions (one additional neutral face). Another type concentrates on more subtle expressions, such as blink, scream and yawn (Xue et al., 2009). Fig. 6 some pictures from JAFFE Face detection and tracking are of great importance for automatic facial expression although it is really a difficult problem considering that the background, head pose and lighting can vary from time to time (Petar & K. Katsaggelos, 2006). In the image-based facial feature extraction approach, optical flow estimation is typically used, which may be made use of by Mase and Pentland earliest to track action unit. Recognition from static images is even harder. Two most commonly used methods from still images are Principal Component Analysis and Linear Discriminant Analysis (Chen &Huang, 2003). They are similar to each other but the first one is unsupervised while the second one is supervised. 5.3.3 The application of facial expression technology Fig. 7 Motion capture via Kinect Facial expression technology has been used in wide fields. A system named VActor, which can track and record users facial expression, was created by SimGraphics in 1994. This system is a key that delivers interactive animation to live events. In May 2013, Microsoft introduced this system to its new product Kinect version 2. No matter you smile or frown, it will totally understand your emotional state. And when you are tired, it will also detect from your face and remind you of taking a break. In addition, Google even tries to use a blink to command photograph in glasses