正在加载图片...

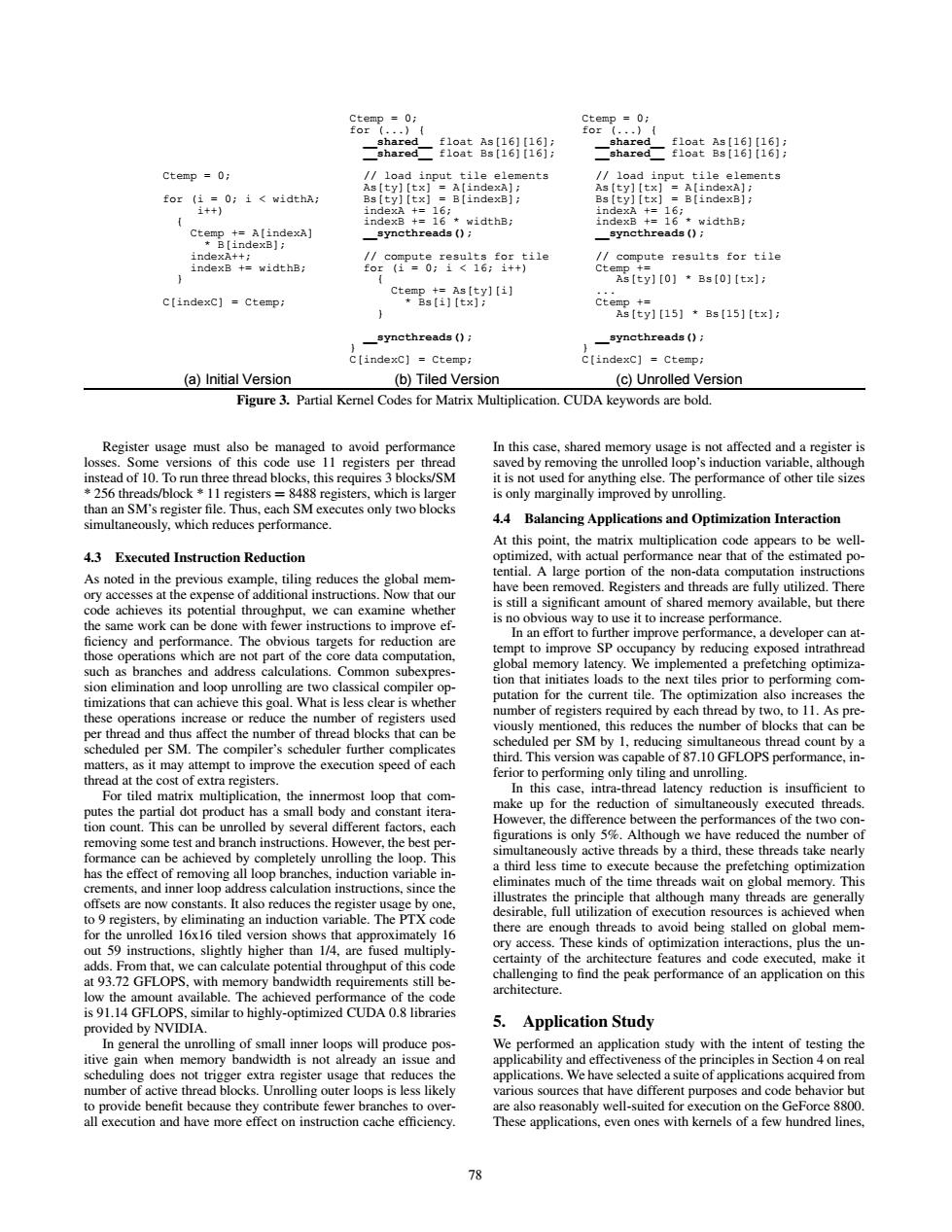

Ctemp 0; Ctemp 0; for( for( shared f1 oat As[16]【16]: f1 oat As[16][16]: shared f1 oat Bs[16][16]: shared f1 oat Bs[161[16]: Ctemp =0; /load input tile elements /load input tile elements As[ty][tx】 As[ty][tx] =A【indexA] for (i=0;i<widthA; Bs[ty][tx]B[indexB]; Bs[ty][tx]=B[indexBj; i++) indexA +=16; indexA +=16; indexB+=16 widthB; indexB +=16 widthB; syncthreads(); syncthreads(); indexA++; /compute results for tile /compute results for tile indexB +widthB; for(i=0:i<16:i++) CteD+= As[ty][0]Bs[0][tx]; c[indexC]Ctemp; Ctemt:,Asty][i】 Bs[i][tx]; Ctemp+= As[ty][15]+Bs[15][tx]: _syncthreads () _syncthreads(); c[indexC】=Ctemp; c[indexc】=Ctempi (a)Initial Version (b)Tiled Version (c)Unrolled Version Figure 3.Partial Kernel Codes for Matrix Multiplication.CUDA keywords are bold. Register usage must also be managed to avoid performance In this case,shared memory usage is not affected and a register is losses.Some versions of this code use 11 registers per thread saved by removing the unrolled loop's induction variable,although instead of 10.To run three thread blocks,this requires 3 blocks/SM it is not used for anything else.The performance of other tile sizes *256 threads/block 11 registers =8488 registers,which is larger is only marginally improved by unrolling. than an SM's register file.Thus,each SM executes only two blocks simultaneously,which reduces performance. 4.4 Balancing Applications and Optimization Interaction At this point,the matrix multiplication code appears to be well- 4.3 Executed Instruction Reduction optimized,with actual performance near that of the estimated po- As noted in the previous example,tiling reduces the global mem- tential.A large portion of the non-data computation instructions ory accesses at the expense of additional instructions.Now that our have been removed.Registers and threads are fully utilized.There is still a significant amount of shared memory available,but there code achieves its potential throughput,we can examine whether the same work can be done with fewer instructions to improve ef- is no obvious way to use it to increase performance. In an effort to further improve performance,a developer can at- ficiency and performance.The obvious targets for reduction are those operations which are not part of the core data computation, tempt to improve SP occupancy by reducing exposed intrathread such as branches and address calculations.Common subexpres- global memory latency.We implemented a prefetching optimiza- sion elimination and loop unrolling are two classical compiler op- tion that initiates loads to the next tiles prior to performing com- timizations that can achieve this goal.What is less clear is whether putation for the current tile.The optimization also increases the these operations increase or reduce the number of registers used number of registers required by each thread by two,to 11.As pre viously mentioned,this reduces the number of blocks that can be per thread and thus affect the number of thread blocks that can be scheduled per SM.The compiler's scheduler further complicates scheduled per SM by 1,reducing simultaneous thread count by a third.This version was capable of 87.10 GFLOPS performance,in- matters,as it may attempt to improve the execution speed of each ferior to performing only tiling and unrolling. thread at the cost of extra registers. In this case,intra-thread latency reduction is insufficient to For tiled matrix multiplication,the innermost loop that com- putes the partial dot product has a small body and constant itera- make up for the reduction of simultaneously executed threads. tion count.This can be unrolled by several different factors,each However,the difference between the performances of the two con- removing some test and branch instructions.However,the best per- figurations is only 5%.Although we have reduced the number of formance can be achieved by completely unrolling the loop.This simultaneously active threads by a third,these threads take nearly has the effect of removing all loop branches,induction variable in- a third less time to execute because the prefetching optimization crements,and inner loop address calculation instructions,since the eliminates much of the time threads wait on global memory.This offsets are now constants.It also reduces the register usage by one, illustrates the principle that although many threads are generally desirable,full utilization of execution resources is achieved when to 9 registers,by eliminating an induction variable.The PTX code for the unrolled 16x16 tiled version shows that approximately 16 there are enough threads to avoid being stalled on global mem- out 59 instructions,slightly higher than 1/4,are fused multiply- ory access.These kinds of optimization interactions,plus the un- certainty of the architecture features and code executed,make it adds.From that,we can calculate potential throughput of this code at 93.72 GFLOPS,with memory bandwidth requirements still be- challenging to find the peak performance of an application on this architecture. low the amount available.The achieved performance of the code is 91.14 GFLOPS,similar to highly-optimized CUDA 0.8 libraries provided by NVIDIA. 5. Application Study In general the unrolling of small inner loops will produce pos- We performed an application study with the intent of testing the itive gain when memory bandwidth is not already an issue and applicability and effectiveness of the principles in Section 4 on real scheduling does not trigger extra register usage that reduces the applications.We have selected a suite of applications acquired from number of active thread blocks.Unrolling outer loops is less likely various sources that have different purposes and code behavior but to provide benefit because they contribute fewer branches to over- are also reasonably well-suited for execution on the GeForce 8800 all execution and have more effect on instruction cache efficiency. These applications,even ones with kernels of a few hundred lines 78Ctemp = 0; for (i = 0; i < widthA; i++) { Ctemp += A[indexA] * B[indexB]; indexA++; indexB += widthB; } C[indexC] = Ctemp; Ctemp = 0; for (...) { __shared__ float As[16][16]; __shared__ float Bs[16][16]; // load input tile elements As[ty][tx] = A[indexA]; Bs[ty][tx] = B[indexB]; indexA += 16; indexB += 16 * widthB; __syncthreads(); // compute results for tile for (i = 0; i < 16; i++) { Ctemp += As[ty][i] * Bs[i][tx]; } __syncthreads(); } C[indexC] = Ctemp; Ctemp = 0; for (...) { __shared__ float As[16][16]; __shared__ float Bs[16][16]; // load input tile elements As[ty][tx] = A[indexA]; Bs[ty][tx] = B[indexB]; indexA += 16; indexB += 16 * widthB; __syncthreads(); // compute results for tile Ctemp += As[ty][0] * Bs[0][tx]; ... Ctemp += As[ty][15] * Bs[15][tx]; __syncthreads(); } C[indexC] = Ctemp; (a) Initial Version (b) Tiled Version (c) Unrolled Version Figure 3. Partial Kernel Codes for Matrix Multiplication. CUDA keywords are bold. Register usage must also be managed to avoid performance losses. Some versions of this code use 11 registers per thread instead of 10. To run three thread blocks, this requires 3 blocks/SM * 256 threads/block * 11 registers = 8488 registers, which is larger than an SM’s register file. Thus, each SM executes only two blocks simultaneously, which reduces performance. 4.3 Executed Instruction Reduction As noted in the previous example, tiling reduces the global memory accesses at the expense of additional instructions. Now that our code achieves its potential throughput, we can examine whether the same work can be done with fewer instructions to improve ef- ficiency and performance. The obvious targets for reduction are those operations which are not part of the core data computation, such as branches and address calculations. Common subexpression elimination and loop unrolling are two classical compiler optimizations that can achieve this goal. What is less clear is whether these operations increase or reduce the number of registers used per thread and thus affect the number of thread blocks that can be scheduled per SM. The compiler’s scheduler further complicates matters, as it may attempt to improve the execution speed of each thread at the cost of extra registers. For tiled matrix multiplication, the innermost loop that computes the partial dot product has a small body and constant iteration count. This can be unrolled by several different factors, each removing some test and branch instructions. However, the best performance can be achieved by completely unrolling the loop. This has the effect of removing all loop branches, induction variable increments, and inner loop address calculation instructions, since the offsets are now constants. It also reduces the register usage by one, to 9 registers, by eliminating an induction variable. The PTX code for the unrolled 16x16 tiled version shows that approximately 16 out 59 instructions, slightly higher than 1/4, are fused multiplyadds. From that, we can calculate potential throughput of this code at 93.72 GFLOPS, with memory bandwidth requirements still below the amount available. The achieved performance of the code is 91.14 GFLOPS, similar to highly-optimized CUDA 0.8 libraries provided by NVIDIA. In general the unrolling of small inner loops will produce positive gain when memory bandwidth is not already an issue and scheduling does not trigger extra register usage that reduces the number of active thread blocks. Unrolling outer loops is less likely to provide benefit because they contribute fewer branches to overall execution and have more effect on instruction cache efficiency. In this case, shared memory usage is not affected and a register is saved by removing the unrolled loop’s induction variable, although it is not used for anything else. The performance of other tile sizes is only marginally improved by unrolling. 4.4 Balancing Applications and Optimization Interaction At this point, the matrix multiplication code appears to be welloptimized, with actual performance near that of the estimated potential. A large portion of the non-data computation instructions have been removed. Registers and threads are fully utilized. There is still a significant amount of shared memory available, but there is no obvious way to use it to increase performance. In an effort to further improve performance, a developer can attempt to improve SP occupancy by reducing exposed intrathread global memory latency. We implemented a prefetching optimization that initiates loads to the next tiles prior to performing computation for the current tile. The optimization also increases the number of registers required by each thread by two, to 11. As previously mentioned, this reduces the number of blocks that can be scheduled per SM by 1, reducing simultaneous thread count by a third. This version was capable of 87.10 GFLOPS performance, inferior to performing only tiling and unrolling. In this case, intra-thread latency reduction is insufficient to make up for the reduction of simultaneously executed threads. However, the difference between the performances of the two con- figurations is only 5%. Although we have reduced the number of simultaneously active threads by a third, these threads take nearly a third less time to execute because the prefetching optimization eliminates much of the time threads wait on global memory. This illustrates the principle that although many threads are generally desirable, full utilization of execution resources is achieved when there are enough threads to avoid being stalled on global memory access. These kinds of optimization interactions, plus the uncertainty of the architecture features and code executed, make it challenging to find the peak performance of an application on this architecture. 5. Application Study We performed an application study with the intent of testing the applicability and effectiveness of the principles in Section 4 on real applications. We have selected a suite of applications acquired from various sources that have different purposes and code behavior but are also reasonably well-suited for execution on the GeForce 8800. These applications, even ones with kernels of a few hundred lines, 78