正在加载图片...

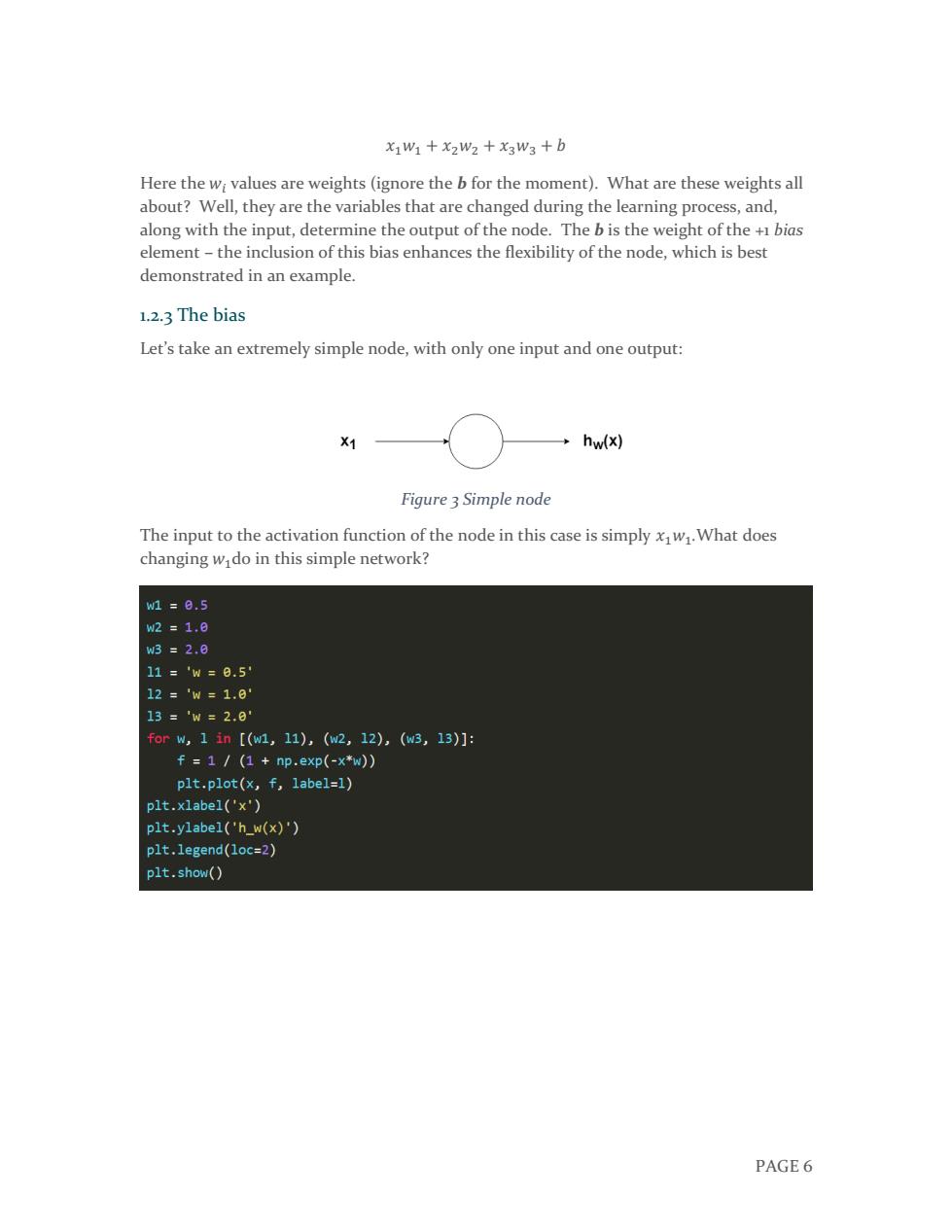

x1W1+x2W2+x3W3+b Here the wi values are weights(ignore the b for the moment).What are these weights all about?Well,they are the variables that are changed during the learning process,and, along with the input,determine the output of the node.The b is the weight of the +1 bias element-the inclusion of this bias enhances the flexibility of the node,which is best demonstrated in an example. 1.2.3 The bias Let's take an extremely simple node,with only one input and one output: X1 hw(x) Figure 3 Simple node The input to the activation function of the node in this case is simply xw.What does changing wi do in this simple network? w1=8.5 w2=1.8 w3=2.0 11='w=0.5 12='w=1.0 13='w=2.8 forw,1in[(w1,11),(w2,12),(w3,13)]: f=1/(1+np.exp(-x*w)) plt.plot(x,f,label=1) plt.xlabel('x') plt.ylabel('h_w(x)') plt.legend(loc=2) plt.show() PAGE6PAGE 6 𝑥1𝑤1 + 𝑥2𝑤2 + 𝑥3𝑤3 + 𝑏 Here the 𝑤𝑖 values are weights (ignore the b for the moment). What are these weights all about? Well, they are the variables that are changed during the learning process, and, along with the input, determine the output of the node. The b is the weight of the +1 bias element – the inclusion of this bias enhances the flexibility of the node, which is best demonstrated in an example. 1.2.3 The bias Let’s take an extremely simple node, with only one input and one output: Figure 3 Simple node The input to the activation function of the node in this case is simply 𝑥1𝑤1.What does changing 𝑤1do in this simple network?