正在加载图片...

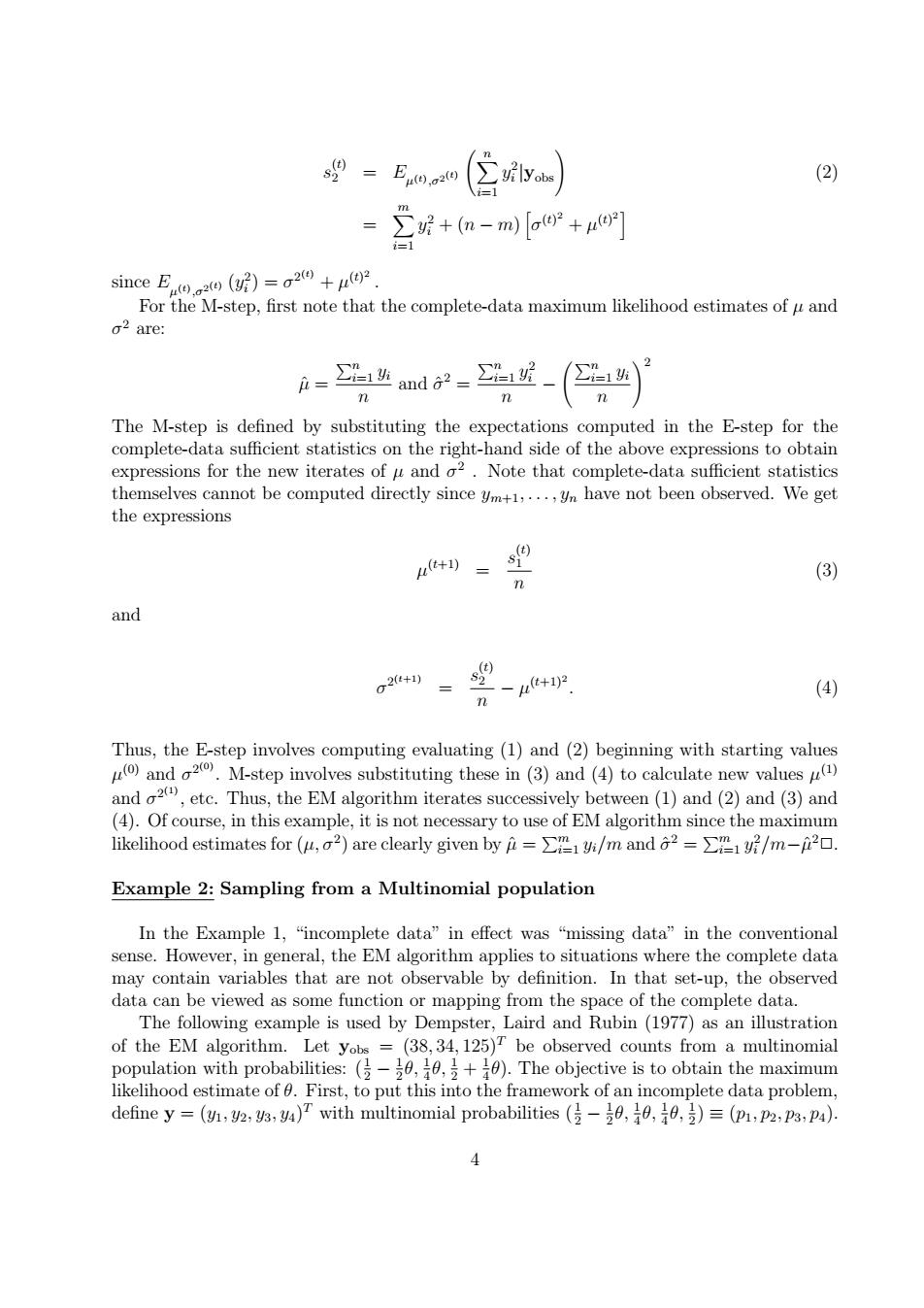

9-Eno(区) (2) =∑听+(n-m)[oo+ue] since E()=22 For the M-step,first note that the complete-data maximum likelihood estimates of u and o2 are: =业and2= =1 2 n n The M-step is defined by substituting the expectations computed in the E-step for the complete-data sufficient statistics on the right-hand side of the above expressions to obtain expressions for the new iterates of u and o2.Note that complete-data sufficient statistics themselves cannot be computed directly since ym+1,...,Un have not been observed.We get the expressions (3) n and 02+1) -+, (4) n Thus,the E-step involves computing evaluating(1)and (2)beginning with starting values )and a2.M-step involves substituting these in (3)and (4)to calculate new values and 2,etc.Thus,the EM algorithm iterates successively between(1)and(2)and (3)and (4).Of course,in this example,it is not necessary to use of EM algorithm since the maximum likelihood estimates for (u,o2)are clearly given by=/m and 2=/m-20. Example 2:Sampling from a Multinomial population In the Example 1,“incomplete data'”in effect was“missing data'”in the conventional sense.However,in general,the EM algorithm applies to situations where the complete data may contain variables that are not observable by definition.In that set-up,the observed data can be viewed as some function or mapping from the space of the complete data. The following example is used by Dempster,Laird and Rubin (1977)as an illustration of the EM algorithm.Let yobs =(38,34,125)T be observed counts from a multinomial population with probabilities:(,,+)The objective is to obtain the maximum likelihood estimate of 0.First,to put this into the framework of an incomplete data problem, define y=(v,y2,43,)T with multinomial probabilities (,,,)=(pi,p2,P3,pa). 4s (t) 2 = E µ(t) ,σ2 (t) Xn i=1 y 2 i |yobs! (2) = Xm i=1 y 2 i + (n − m) h σ (t) 2 + µ (t) 2 i since E µ(t) ,σ2 (t) (y 2 i ) = σ 2 (t) + µ (t) 2 . For the M-step, first note that the complete-data maximum likelihood estimates of µ and σ 2 are: µˆ = Pn i=1 yi n and σˆ 2 = Pn i=1 y 2 i n − Pn i=1 yi n !2 The M-step is defined by substituting the expectations computed in the E-step for the complete-data sufficient statistics on the right-hand side of the above expressions to obtain expressions for the new iterates of µ and σ 2 . Note that complete-data sufficient statistics themselves cannot be computed directly since ym+1, . . . , yn have not been observed. We get the expressions µ (t+1) = s (t) 1 n (3) and σ 2 (t+1) = s (t) 2 n − µ (t+1)2 . (4) Thus, the E-step involves computing evaluating (1) and (2) beginning with starting values µ (0) and σ 2 (0) . M-step involves substituting these in (3) and (4) to calculate new values µ (1) and σ 2 (1) , etc. Thus, the EM algorithm iterates successively between (1) and (2) and (3) and (4). Of course, in this example, it is not necessary to use of EM algorithm since the maximum likelihood estimates for (µ, σ 2 ) are clearly given by µˆ = Pm i=1 yi/m and σˆ 2 = Pm i=1 y 2 i /m−µˆ 2✷. Example 2: Sampling from a Multinomial population In the Example 1, “incomplete data” in effect was “missing data” in the conventional sense. However, in general, the EM algorithm applies to situations where the complete data may contain variables that are not observable by definition. In that set-up, the observed data can be viewed as some function or mapping from the space of the complete data. The following example is used by Dempster, Laird and Rubin (1977) as an illustration of the EM algorithm. Let yobs = (38, 34, 125)T be observed counts from a multinomial population with probabilities: ( 1 2 − 1 2 θ, 1 4 θ, 1 2 + 1 4 θ). The objective is to obtain the maximum likelihood estimate of θ. First, to put this into the framework of an incomplete data problem, define y = (y1, y2, y3, y4) T with multinomial probabilities ( 1 2 − 1 2 θ, 1 4 θ, 1 4 θ, 1 2 ) ≡ (p1, p2, p3, p4). 4