正在加载图片...

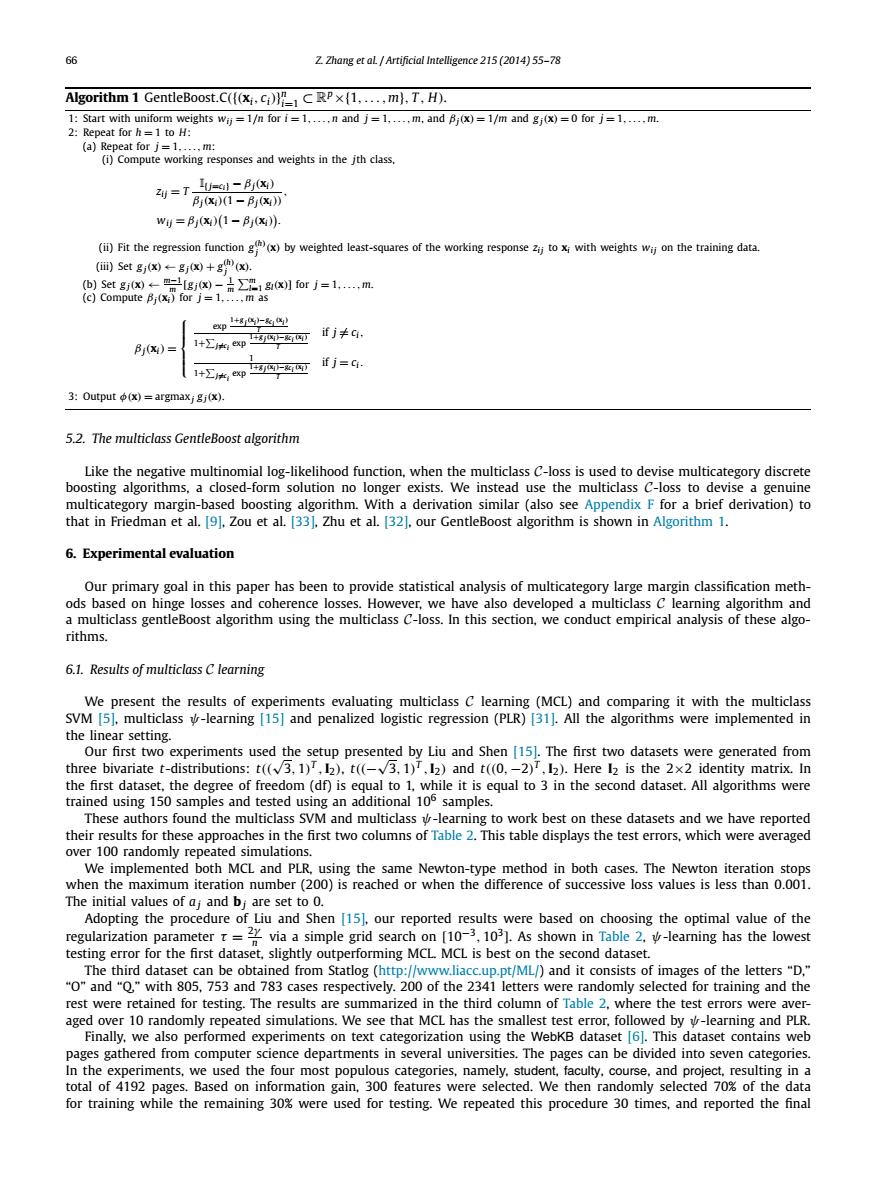

6 Z Zhang et aL Artificial Intelligence 215 (2014)55-78 Algorithm 1 GentleBoost.C(((xi.Ci))CRPx(1.....m).T.H). 1:Start with uniform weights wij =1/n for i =1,....n and j=1,....m,and Bi(x)=1/m and gj(x)=0 for j=1.....m. 2:Repeat for h=1 to H: (a)Repeat for j=1....,m: (i)Compute working responses and weights in the jth class. 到=T =-Bj) (X)(1-B(X)》 w=B(X)(1-K) (i)Fit the regression function(x)by weighted least-squares of the working responseto with weights wjon the training data. (ii)Set gj(x)--gj(x)+g(x). 图图Aj=1m ep中 +8网一微好网 fj≠c: Bj(x) 1+∑*xp 1 if j=q. 1+∑*x即 1+网一网可 3:Output (x)=argmaxi gj(x). 5.2.The multiclass GentleBoost algorithm Like the negative multinomial log-likelihood function,when the multiclass C-loss is used to devise multicategory discrete boosting algorithms,a closed-form solution no longer exists.We instead use the multiclass C-loss to devise a genuine multicategory margin-based boosting algorithm.With a derivation similar(also see Appendix F for a brief derivation)to that in Friedman et al.[9.Zou et al.[331.Zhu et al.[321.our GentleBoost algorithm is shown in Algorithm 1. 6.Experimental evaluation Our primary goal in this paper has been to provide statistical analysis of multicategory large margin classification meth- ods based on hinge losses and coherence losses.However,we have also developed a multiclass C learning algorithm and a multiclass gentleBoost algorithm using the multiclass C-loss.In this section,we conduct empirical analysis of these algo- rithms. 6.1.Results of multiclass C learning We present the results of experiments evaluating multiclass C learning(MCL)and comparing it with the multiclass SVM [5].multiclass v-learning [15]and penalized logistic regression(PLR)[31].All the algorithms were implemented in the linear setting. Our first two experiments used the setup presented by Liu and Shen [15].The first two datasets were generated from three bivariate t-distributions:t((v3,1),I2).t((-3,1)T,I2)and t((0,-2),I2).Here I2 is the 2x2 identity matrix.In the first dataset,the degree of freedom(df)is equal to 1,while it is equal to 3 in the second dataset.All algorithms were trained using 150 samples and tested using an additional 106 samples. These authors found the multiclass SVM and multiclass -learning to work best on these datasets and we have reported their results for these approaches in the first two columns of Table 2.This table displays the test errors,which were averaged over 100 randomly repeated simulations. We implemented both MCL and PLR,using the same Newton-type method in both cases.The Newton iteration stops when the maximum iteration number(200)is reached or when the difference of successive loss values is less than 0.001. The initial values of aj and bj are set to 0. Adopting the procedure of Liu and Shen [15],our reported results were based on choosing the optimal value of the regularization parametervia a simple grid search on10101.As shown in Table .-learning has the lowest testing error for the first dataset,slightly outperforming MCL.MCL is best on the second dataset. The third dataset can be obtained from Statlog(http://www.liacc.up.pt/ML/)and it consists of images of the letters"D," "O"and "Q,"with 805,753 and 783 cases respectively.200 of the 2341 letters were randomly selected for training and the rest were retained for testing.The results are summarized in the third column of Table 2,where the test errors were aver- aged over 10 randomly repeated simulations.We see that MCL has the smallest test error,followed by -learning and PLR. Finally,we also performed experiments on text categorization using the WebKB dataset [6].This dataset contains web pages gathered from computer science departments in several universities.The pages can be divided into seven categories. In the experiments,we used the four most populous categories,namely.student,faculty,course,and project,resulting in a total of 4192 pages.Based on information gain,300 features were selected.We then randomly selected 70%of the data for training while the remaining 30%were used for testing.We repeated this procedure 30 times,and reported the final66 Z. Zhang et al. / Artificial Intelligence 215 (2014) 55–78 Algorithm 1 GentleBoost.C({(xi, ci)} n i=1 ⊂ Rp×{1,...,m}, T , H). 1: Start with uniform weights wij = 1/n for i = 1,...,n and j = 1,...,m, and βj(x) = 1/m and g j(x) = 0 for j = 1,...,m. 2: Repeat for h = 1 to H: (a) Repeat for j = 1,...,m: (i) Compute working responses and weights in the jth class, zij = T I{j=ci} − βj(xi) βj(xi)(1 − βj(xi)), wij = βj(xi) 1 − βj(xi) . (ii) Fit the regression function g(h) j (x) by weighted least-squares of the working response zij to xi with weights wij on the training data. (iii) Set g j(x) ← g j(x) + g(h) j (x). (b) Set g j(x) ← m−1 m [g j(x) − 1 m m l=1 gl(x)] for j = 1,...,m. (c) Compute βj(xi) for j = 1,...,m as βj(xi) = ⎧ ⎪⎪⎨ ⎪⎪⎩ exp 1+g j(xi)−gci (xi) T 1+ j=ci exp 1+g j(xi)−gci (xi) T if j = ci, 1 1+ j=ci exp 1+g j(xi)−gci (xi) T if j = ci . 3: Output φ(x) = argmaxj g j(x). 5.2. The multiclass GentleBoost algorithm Like the negative multinomial log-likelihood function, when the multiclass C-loss is used to devise multicategory discrete boosting algorithms, a closed-form solution no longer exists. We instead use the multiclass C-loss to devise a genuine multicategory margin-based boosting algorithm. With a derivation similar (also see Appendix F for a brief derivation) to that in Friedman et al. [9], Zou et al. [33], Zhu et al. [32], our GentleBoost algorithm is shown in Algorithm 1. 6. Experimental evaluation Our primary goal in this paper has been to provide statistical analysis of multicategory large margin classification methods based on hinge losses and coherence losses. However, we have also developed a multiclass C learning algorithm and a multiclass gentleBoost algorithm using the multiclass C-loss. In this section, we conduct empirical analysis of these algorithms. 6.1. Results of multiclass C learning We present the results of experiments evaluating multiclass C learning (MCL) and comparing it with the multiclass SVM [5], multiclass ψ-learning [15] and penalized logistic regression (PLR) [31]. All the algorithms were implemented in the linear setting. Our first two experiments used the setup presented by Liu and Shen [15]. The first two datasets were generated from three bivariate t-distributions: t((√3, 1)T ,I2), t((− √3, 1)T ,I2) and t((0,−2)T ,I2). Here I2 is the 2×2 identity matrix. In the first dataset, the degree of freedom (df) is equal to 1, while it is equal to 3 in the second dataset. All algorithms were trained using 150 samples and tested using an additional 106 samples. These authors found the multiclass SVM and multiclass ψ-learning to work best on these datasets and we have reported their results for these approaches in the first two columns of Table 2. This table displays the test errors, which were averaged over 100 randomly repeated simulations. We implemented both MCL and PLR, using the same Newton-type method in both cases. The Newton iteration stops when the maximum iteration number (200) is reached or when the difference of successive loss values is less than 0.001. The initial values of a j and bj are set to 0. Adopting the procedure of Liu and Shen [15], our reported results were based on choosing the optimal value of the regularization parameter τ = 2γ n via a simple grid search on [10−3, 103]. As shown in Table 2, ψ-learning has the lowest testing error for the first dataset, slightly outperforming MCL. MCL is best on the second dataset. The third dataset can be obtained from Statlog (http://www.liacc.up.pt/ML/) and it consists of images of the letters “D,” “O” and “Q,” with 805, 753 and 783 cases respectively. 200 of the 2341 letters were randomly selected for training and the rest were retained for testing. The results are summarized in the third column of Table 2, where the test errors were averaged over 10 randomly repeated simulations. We see that MCL has the smallest test error, followed by ψ-learning and PLR. Finally, we also performed experiments on text categorization using the WebKB dataset [6]. This dataset contains web pages gathered from computer science departments in several universities. The pages can be divided into seven categories. In the experiments, we used the four most populous categories, namely, student, faculty, course, and project, resulting in a total of 4192 pages. Based on information gain, 300 features were selected. We then randomly selected 70% of the data for training while the remaining 30% were used for testing. We repeated this procedure 30 times, and reported the final