正在加载图片...

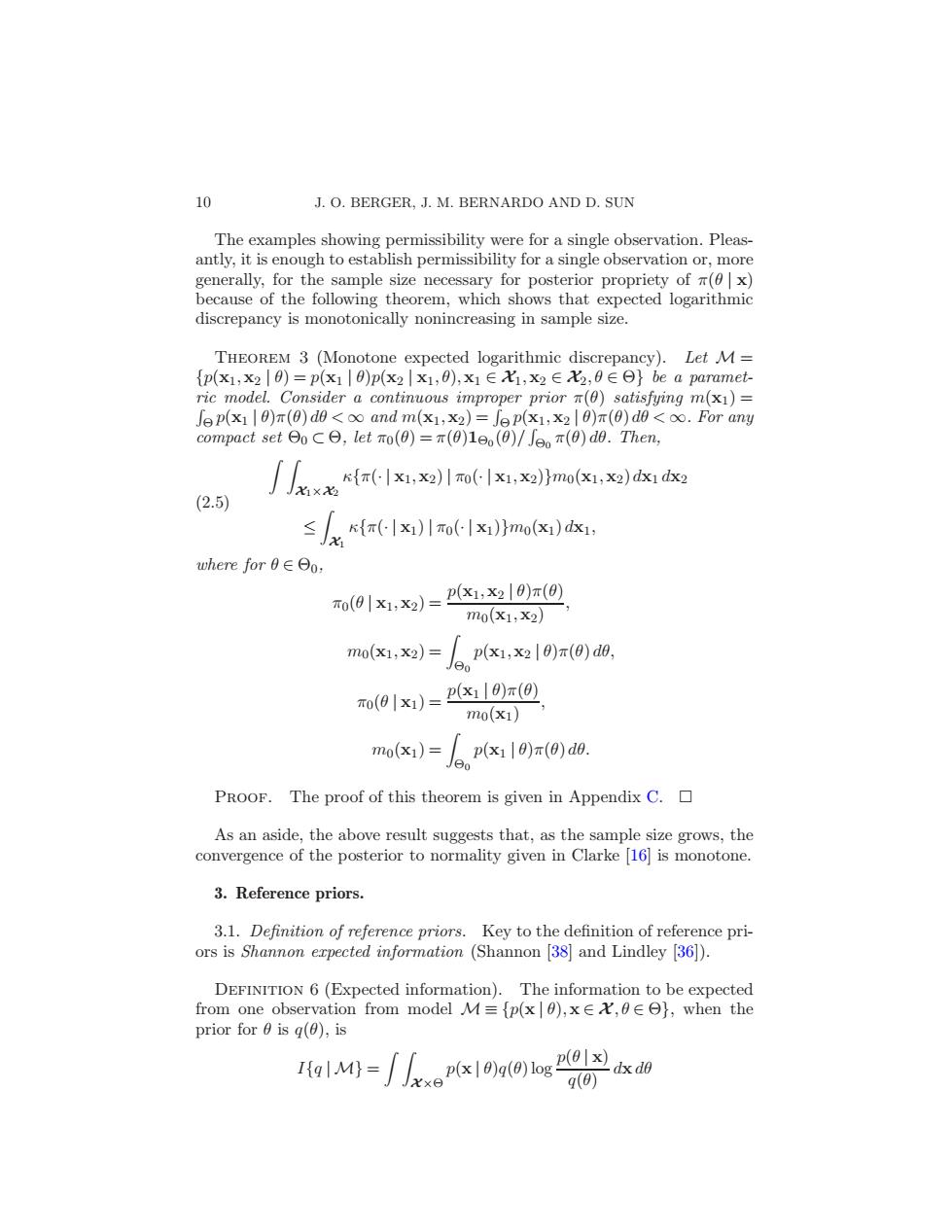

10 J.O.BERGER.J.M.BERNARDO AND D.SUN The examples showing permissibility were for a single observation.Pleas- antly,it is enough to establish permissibility for a single observation or,more generally,for the sample size necessary for posterior propriety of r(x) because of the following theorem,which shows that expected logarithmic discrepancy is monotonically nonincreasing in sample size. THEOREM 3 (Monotone expected logarithmic discrepancy).Let M= {p(x1,x2|0)=p(x1|0)p(x2lx1,0),x1∈1,x2∈X2,8∈Θ}be a paramet- ric model.Consider a continuous improper prior n(0)satisfying m(x1)= fep(x110)T(0)do<oo and m(x1,x2)=Je p(x1,x20)(0)de<oo.For any compact setΘoC日,let o(0)=r(0)1e(0)/J6π(0)d.Them, (2.5) )111.)mo() ≤/k{x)1x)mat)i where for0∈Θo: To(01x1,X2)= p(x1,x2|0)π(0) mo(x1;x2) mo(x1,x2)=px1,x20)r(0)d0, Jeo T0(0|x1)= p(x1|8)π(0) mo(x1) mo(x1)= p(x11θ)π(0)d0. Jeo PROOF.The proof of this theorem is given in Appendix C. As an aside,the above result suggests that,as the sample size grows,the convergence of the posterior to normality given in Clarke [16 is monotone. 3.Reference priors. 3.1.Definition of reference priors.Key to the definition of reference pri- ors is Shannon expected information (Shannon [38]and Lindley [36]). DEFINITION 6(Expected information).The information to be expected from one observation from model M≡{p(x|0),x∈X,0∈Θ},when the prior for 0 is g(),is M)()os q(0)10 J. O. BERGER, J. M. BERNARDO AND D. SUN The examples showing permissibility were for a single observation. Pleasantly, it is enough to establish permissibility for a single observation or, more generally, for the sample size necessary for posterior propriety of π(θ | x) because of the following theorem, which shows that expected logarithmic discrepancy is monotonically nonincreasing in sample size. Theorem 3 (Monotone expected logarithmic discrepancy). Let M = {p(x1,x2 | θ) = p(x1 | θ)p(x2 | x1,θ),x1 ∈ X1,x2 ∈ X2,θ ∈ Θ} be a parametric model. Consider a continuous improper prior π(θ) satisfying m(x1) = R Θ p(x1 | θ)π(θ) dθ < ∞ and m(x1,x2) = R Θ p(x1,x2 | θ)π(θ) dθ < ∞. For any compact set Θ0 ⊂ Θ, let π0(θ) = π(θ)1Θ0 (θ)/ R Θ0 π(θ) dθ. Then, Z Z X1×X2 κ{π(· | x1,x2) | π0(· | x1,x2)}m0(x1,x2) dx1 dx2 (2.5) ≤ Z X1 κ{π(· | x1) | π0(· | x1)}m0(x1) dx1, where for θ ∈ Θ0, π0(θ | x1,x2) = p(x1,x2 | θ)π(θ) m0(x1,x2) , m0(x1,x2) = Z Θ0 p(x1,x2 | θ)π(θ) dθ, π0(θ | x1) = p(x1 | θ)π(θ) m0(x1) , m0(x1) = Z Θ0 p(x1 | θ)π(θ) dθ. Proof. The proof of this theorem is given in Appendix C. As an aside, the above result suggests that, as the sample size grows, the convergence of the posterior to normality given in Clarke [16] is monotone. 3. Reference priors. 3.1. Definition of reference priors. Key to the definition of reference priors is Shannon expected information (Shannon [38] and Lindley [36]). Definition 6 (Expected information). The information to be expected from one observation from model M ≡ {p(x | θ),x ∈ X ,θ ∈ Θ}, when the prior for θ is q(θ), is I{q | M} = Z Z X×Θ p(x | θ)q(θ) log p(θ | x) q(θ) dxdθ�