正在加载图片...

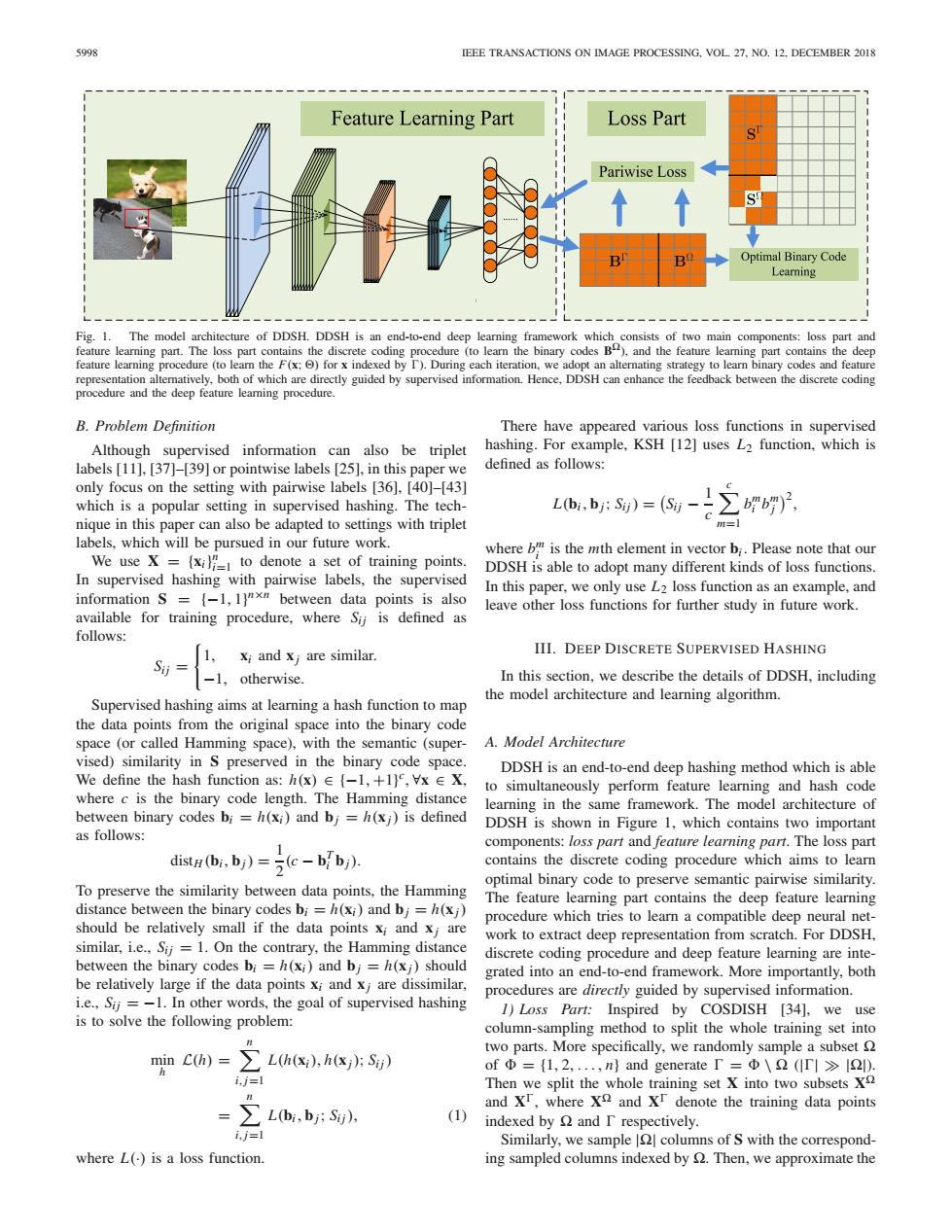

5998 IEEE TRANSACTIONS ON IMAGE PROCESSING,VOL.27.NO.12,DECEMBER 2018 Feature Learning Part Loss Part ! Pariwise Loss 心么 B Optimal Binary Code Learning Fig.1. The model architecture of DDSH.DDSH is an end-to-end deep learning framework which consists of two main components:loss part and feature leaming part.The loss part contains the discrete coding procedure (to learn the binary codes B),and the feature leaming part contains the deep feature learning procedure (to lear the F(x:for x indexed by I).During each iteration,we adopt an alternating strategy to learn binary codes and feature representation alteratively,both of which are directly guided by supervised information.Hence,DDSH can enhance the feedback between the discrete coding procedure and the deep feature learning procedure. B.Problem Definition There have appeared various loss functions in supervised Although supervised information can also be triplet hashing.For example,KSH [12]uses L2 function,which is labels [11],[37]-[39]or pointwise labels [25].in this paper we defined as follows: only focus on the setting with pairwise labels [36].[40]-[43] which is a popular setting in supervised hashing.The tech- L(bi,bj:Sij)=(Si b)2 nique in this paper can also be adapted to settings with triplet labels,which will be pursued in our future work. where b"is the mth element in vector bi.Please note that our We use X =(xi)to denote a set of training points DDSH is able to adopt many different kinds of loss functions. In supervised hashing with pairwise labels,the supervised information S =(-1,1]xn between data points is also In this paper,we only use L2 loss function as an example,and leave other loss functions for further study in future work. available for training procedure,where Sij is defined as follows: xi and xj are similar. III.DEEP DISCRETE SUPERVISED HASHING -1,otherwise. In this section,we describe the details of DDSH,including Supervised hashing aims at learning a hash function to map the model architecture and learning algorithm. the data points from the original space into the binary code space (or called Hamming space),with the semantic (super- A.Model Architecture vised)similarity in S preserved in the binary code space DDSH is an end-to-end deep hashing method which is able We define the hash function as:h(x)(-1,+1),Vx EX, to simultaneously perform feature learning and hash code where c is the binary code length.The Hamming distance learning in the same framework.The model architecture of between binary codes bi=h(xi)and bj=h(xj)is defined DDSH is shown in Figure 1,which contains two important as follows: 1 components:loss part and feature learning part.The loss part distH (bi,bj)=(c-b bj) contains the discrete coding procedure which aims to learn optimal binary code to preserve semantic pairwise similarity. To preserve the similarity between data points,the Hamming The feature learning part contains the deep feature learning distance between the binary codes bi=h(xi)and bj =h(xj) procedure which tries to learn a compatible deep neural net- should be relatively small if the data points xi and xj are work to extract deep representation from scratch.For DDSH, similar,i.e.,Sij=1.On the contrary,the Hamming distance discrete coding procedure and deep feature learning are inte- between the binary codes bi=h(xi)and bj=h(xj)should grated into an end-to-end framework.More importantly,both be relatively large if the data points xi and xi are dissimilar, procedures are directly guided by supervised information. i.e.,Sij =-1.In other words,the goal of supervised hashing 1)Loss Part:Inspired by COSDISH [34],we use is to solve the following problem: column-sampling method to split the whole training set into min C(h)=∑Lh(x),h(x片S) two parts.More specifically,we randomly sample a subset ofΦ={l,2,,n}and generate I=Φ\2(Tl>l2. i.j=l Then we split the whole training set X into two subsets X =∑Lb,b;S), and Xr,where X and Xr denote the training data points (1) indexed by and I respectively. i,j=1 Similarly,we sample |Q columns of S with the correspond- where L()is a loss function. ing sampled columns indexed by Then,we approximate the5998 IEEE TRANSACTIONS ON IMAGE PROCESSING, VOL. 27, NO. 12, DECEMBER 2018 Fig. 1. The model architecture of DDSH. DDSH is an end-to-end deep learning framework which consists of two main components: loss part and feature learning part. The loss part contains the discrete coding procedure (to learn the binary codes B), and the feature learning part contains the deep feature learning procedure (to learn the F(x; ) for x indexed by ). During each iteration, we adopt an alternating strategy to learn binary codes and feature representation alternatively, both of which are directly guided by supervised information. Hence, DDSH can enhance the feedback between the discrete coding procedure and the deep feature learning procedure. B. Problem Definition Although supervised information can also be triplet labels [11], [37]–[39] or pointwise labels [25], in this paper we only focus on the setting with pairwise labels [36], [40]–[43] which is a popular setting in supervised hashing. The technique in this paper can also be adapted to settings with triplet labels, which will be pursued in our future work. We use X = {xi}n i=1 to denote a set of training points. In supervised hashing with pairwise labels, the supervised information S = {−1, 1}n×n between data points is also available for training procedure, where Sij is defined as follows: Sij = 1, xi and x j are similar. −1, otherwise. Supervised hashing aims at learning a hash function to map the data points from the original space into the binary code space (or called Hamming space), with the semantic (supervised) similarity in S preserved in the binary code space. We define the hash function as: h(x) ∈ {−1, +1} c , ∀x ∈ X, where c is the binary code length. The Hamming distance between binary codes bi = h(xi) and bj = h(x j) is defined as follows: distH (bi, bj) = 1 2 (c − bT i bj). To preserve the similarity between data points, the Hamming distance between the binary codes bi = h(xi) and bj = h(x j) should be relatively small if the data points xi and x j are similar, i.e., Sij = 1. On the contrary, the Hamming distance between the binary codes bi = h(xi) and bj = h(x j) should be relatively large if the data points xi and x j are dissimilar, i.e., Sij = −1. In other words, the goal of supervised hashing is to solve the following problem: min h L(h) = n i,j=1 L(h(xi), h(x j); Sij) = n i,j=1 L(bi, bj; Sij), (1) where L(·) is a loss function. There have appeared various loss functions in supervised hashing. For example, KSH [12] uses L2 function, which is defined as follows: L(bi, bj; Sij) = Sij − 1 c c m=1 bm i bm j 2 , where bm i is the mth element in vector bi . Please note that our DDSH is able to adopt many different kinds of loss functions. In this paper, we only use L2 loss function as an example, and leave other loss functions for further study in future work. III. DEEP DISCRETE SUPERVISED HASHING In this section, we describe the details of DDSH, including the model architecture and learning algorithm. A. Model Architecture DDSH is an end-to-end deep hashing method which is able to simultaneously perform feature learning and hash code learning in the same framework. The model architecture of DDSH is shown in Figure 1, which contains two important components: loss part and feature learning part. The loss part contains the discrete coding procedure which aims to learn optimal binary code to preserve semantic pairwise similarity. The feature learning part contains the deep feature learning procedure which tries to learn a compatible deep neural network to extract deep representation from scratch. For DDSH, discrete coding procedure and deep feature learning are integrated into an end-to-end framework. More importantly, both procedures are directly guided by supervised information. 1) Loss Part: Inspired by COSDISH [34], we use column-sampling method to split the whole training set into two parts. More specifically, we randomly sample a subset of = {1, 2,..., n} and generate = \ (||||). Then we split the whole training set X into two subsets X and X, where X and X denote the training data points indexed by and respectively. Similarly, we sample || columns of S with the corresponding sampled columns indexed by . Then, we approximate the��������������