正在加载图片...

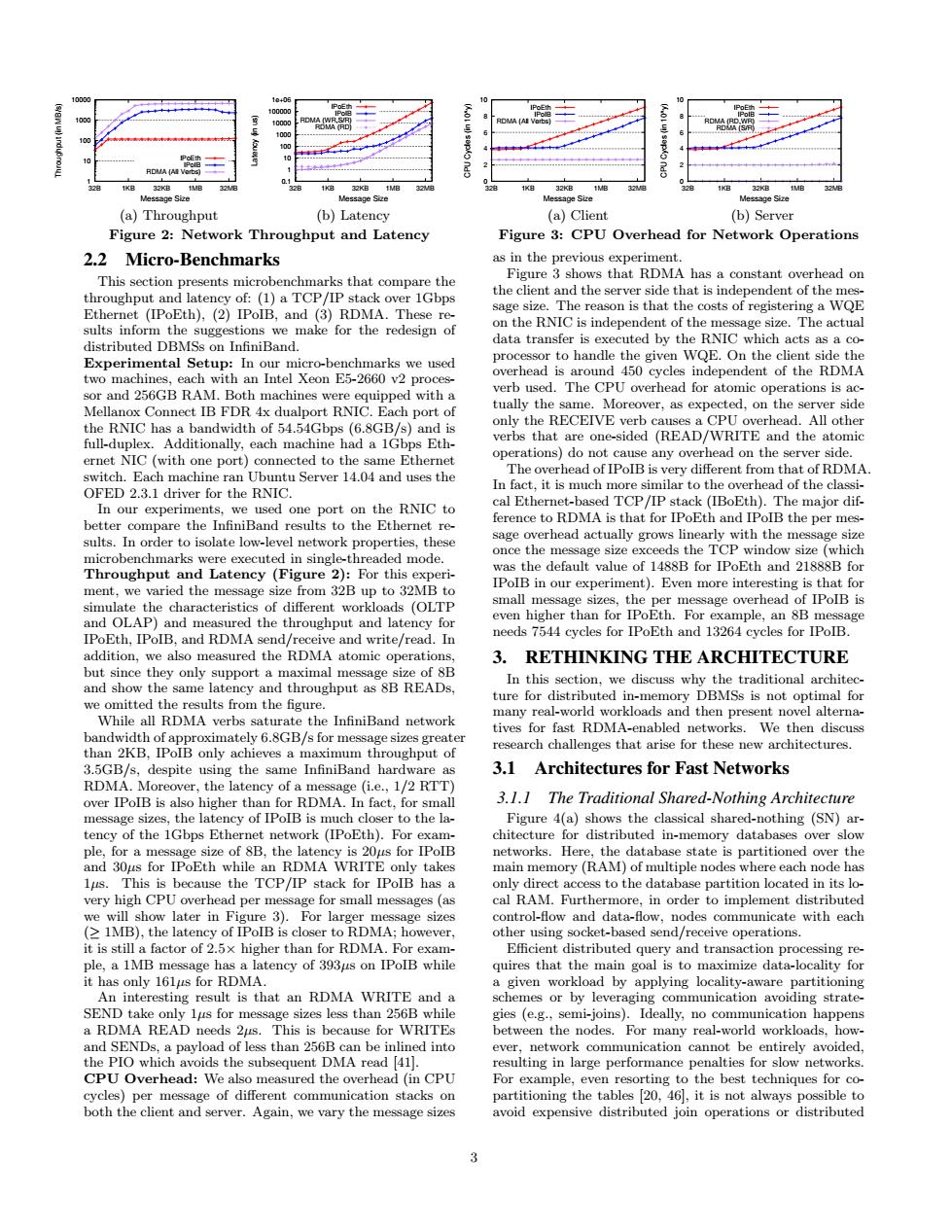

om a)Thr re 2:Network Throughput and Latency ure 3:CPU Overhead for Ne 2.2 Micro-Benchmarks section presents m igure s that RDMA has a constant overhead or size son is that the gawQ正 InfiniBand ameR All other ope ny o ad on the server sic OFED 2.3.1 driver for the RNIC In fact.it is much and r late low-le age overhead actu with the m cy (Figure2 For th the default value of 1488B for IPoEth and 21888B fo m our e n mor nd le the Eth and RDMA write/read.In port a maxima 3. RETHINKING THE ARCHITECTURE sup A research challenges that arise for these new architecture 3.1 Architectures for Fast Networks RDMA 1/2RT 3.1.1 The Traditional Shared-Nothing Architecture the la er to the Figure 4(a)shows the classical shar -nothing (SN)ar of 8B.the (IP at over the This d per n 3).ag for small mes ssages (as cal RA Furth (IMB),the late A:however ple,a 1MB m h latency of 393us on ipolb while 24 quires that the main goal is to maximize data-locality u isthat an RDMA WRITE and a RDMA READ sizes less thar 56B w gies (eg.. f les than 256 cannot CPU Overhead:We also mea suredthe overhead (in CPU For example, even the best techniques for co distributed 1 10 100 1000 10000 32B 1KB 32KB 1MB 32MB Throughput (in MB/s) Message Size IPoEth IPoIB RDMA (All Verbs) (a) Throughput 0.1 1 10 100 1000 10000 100000 1e+06 32B 1KB 32KB 1MB 32MB Latency (in us) Message Size IPoEth IPoIB RDMA (WR,S/R) RDMA (RD) (b) Latency Figure 2: Network Throughput and Latency 2.2 Micro-Benchmarks This section presents microbenchmarks that compare the throughput and latency of: (1) a TCP/IP stack over 1Gbps Ethernet (IPoEth), (2) IPoIB, and (3) RDMA. These results inform the suggestions we make for the redesign of distributed DBMSs on InfiniBand. Experimental Setup: In our micro-benchmarks we used two machines, each with an Intel Xeon E5-2660 v2 processor and 256GB RAM. Both machines were equipped with a Mellanox Connect IB FDR 4x dualport RNIC. Each port of the RNIC has a bandwidth of 54.54Gbps (6.8GB/s) and is full-duplex. Additionally, each machine had a 1Gbps Ethernet NIC (with one port) connected to the same Ethernet switch. Each machine ran Ubuntu Server 14.04 and uses the OFED 2.3.1 driver for the RNIC. In our experiments, we used one port on the RNIC to better compare the InfiniBand results to the Ethernet results. In order to isolate low-level network properties, these microbenchmarks were executed in single-threaded mode. Throughput and Latency (Figure 2): For this experiment, we varied the message size from 32B up to 32MB to simulate the characteristics of different workloads (OLTP and OLAP) and measured the throughput and latency for IPoEth, IPoIB, and RDMA send/receive and write/read. In addition, we also measured the RDMA atomic operations, but since they only support a maximal message size of 8B and show the same latency and throughput as 8B READs, we omitted the results from the figure. While all RDMA verbs saturate the InfiniBand network bandwidth of approximately 6.8GB/s for message sizes greater than 2KB, IPoIB only achieves a maximum throughput of 3.5GB/s, despite using the same InfiniBand hardware as RDMA. Moreover, the latency of a message (i.e., 1/2 RTT) over IPoIB is also higher than for RDMA. In fact, for small message sizes, the latency of IPoIB is much closer to the latency of the 1Gbps Ethernet network (IPoEth). For example, for a message size of 8B, the latency is 20µs for IPoIB and 30µs for IPoEth while an RDMA WRITE only takes 1µs. This is because the TCP/IP stack for IPoIB has a very high CPU overhead per message for small messages (as we will show later in Figure 3). For larger message sizes (≥ 1MB), the latency of IPoIB is closer to RDMA; however, it is still a factor of 2.5× higher than for RDMA. For example, a 1MB message has a latency of 393µs on IPoIB while it has only 161µs for RDMA. An interesting result is that an RDMA WRITE and a SEND take only 1µs for message sizes less than 256B while a RDMA READ needs 2µs. This is because for WRITEs and SENDs, a payload of less than 256B can be inlined into the PIO which avoids the subsequent DMA read [41]. CPU Overhead: We also measured the overhead (in CPU cycles) per message of different communication stacks on both the client and server. Again, we vary the message sizes 0 2 4 6 8 10 32B 1KB 32KB 1MB 32MB CPU Cycles (in 10^y) Message Size IPoEth IPoIB RDMA (All Verbs) (a) Client 0 2 4 6 8 10 32B 1KB 32KB 1MB 32MB CPU Cycles (in 10^y) Message Size IPoEth IPoIB RDMA (RD,WR) RDMA (S/R) (b) Server Figure 3: CPU Overhead for Network Operations as in the previous experiment. Figure 3 shows that RDMA has a constant overhead on the client and the server side that is independent of the message size. The reason is that the costs of registering a WQE on the RNIC is independent of the message size. The actual data transfer is executed by the RNIC which acts as a coprocessor to handle the given WQE. On the client side the overhead is around 450 cycles independent of the RDMA verb used. The CPU overhead for atomic operations is actually the same. Moreover, as expected, on the server side only the RECEIVE verb causes a CPU overhead. All other verbs that are one-sided (READ/WRITE and the atomic operations) do not cause any overhead on the server side. The overhead of IPoIB is very different from that of RDMA. In fact, it is much more similar to the overhead of the classical Ethernet-based TCP/IP stack (IBoEth). The major difference to RDMA is that for IPoEth and IPoIB the per message overhead actually grows linearly with the message size once the message size exceeds the TCP window size (which was the default value of 1488B for IPoEth and 21888B for IPoIB in our experiment). Even more interesting is that for small message sizes, the per message overhead of IPoIB is even higher than for IPoEth. For example, an 8B message needs 7544 cycles for IPoEth and 13264 cycles for IPoIB. 3. RETHINKING THE ARCHITECTURE In this section, we discuss why the traditional architecture for distributed in-memory DBMSs is not optimal for many real-world workloads and then present novel alternatives for fast RDMA-enabled networks. We then discuss research challenges that arise for these new architectures. 3.1 Architectures for Fast Networks 3.1.1 The Traditional Shared-Nothing Architecture Figure 4(a) shows the classical shared-nothing (SN) architecture for distributed in-memory databases over slow networks. Here, the database state is partitioned over the main memory (RAM) of multiple nodes where each node has only direct access to the database partition located in its local RAM. Furthermore, in order to implement distributed control-flow and data-flow, nodes communicate with each other using socket-based send/receive operations. Efficient distributed query and transaction processing requires that the main goal is to maximize data-locality for a given workload by applying locality-aware partitioning schemes or by leveraging communication avoiding strategies (e.g., semi-joins). Ideally, no communication happens between the nodes. For many real-world workloads, however, network communication cannot be entirely avoided, resulting in large performance penalties for slow networks. For example, even resorting to the best techniques for copartitioning the tables [20, 46], it is not always possible to avoid expensive distributed join operations or distributed 3