正在加载图片...

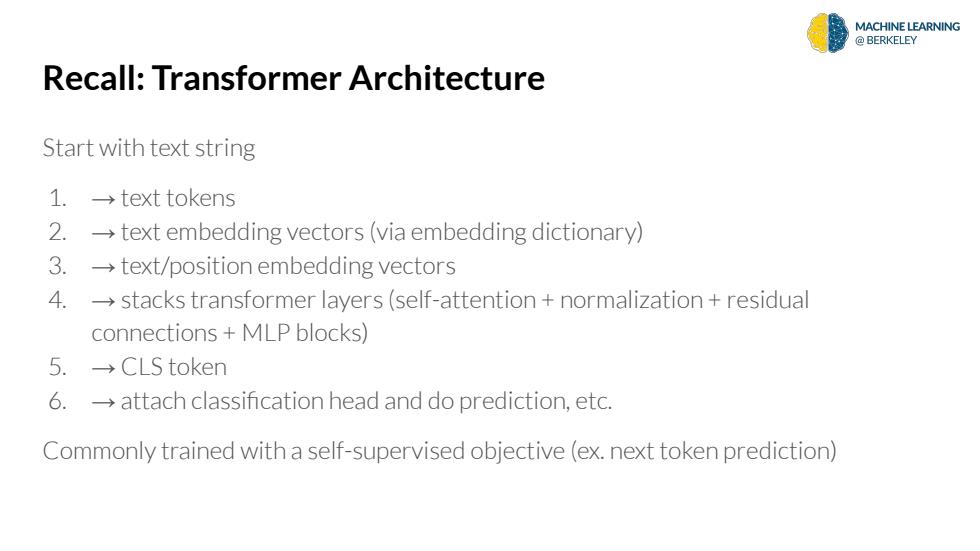

MACHINE LEARNING BERKELEY Recall:Transformer Architecture Start with text string 1.→text tokens 2.-text embedding vectors(via embedding dictionary) 3.text/position embedding vectors 4.stacks transformer layers(self-attention normalization residual connections MLP blocks) 5.→CLS token 6.-attach classification head and do prediction,etc. Commonly trained with a self-supervised objective(ex.next token prediction) Recall: Transformer Architecture Start with text string 1. → text tokens 2. → text embedding vectors (via embedding dictionary) 3. → text/position embedding vectors 4. → stacks transformer layers (self-attention + normalization + residual connections + MLP blocks) 5. → CLS token 6. → attach classification head and do prediction, etc. Commonly trained with a self-supervised objective (ex. next token prediction)