正在加载图片...

Ouery code Database code F(x;;e)E Re.Then,the problem in (1)is transformed to the following problem: 11d 01010 职 Je,V)=∑∑[h(x)Tv-cS22 i=1j= Feature Learning Part Loss Function Part 7 =∑∑[sign((Fx;o)y-cs] i=1j=1 Figure 1:Model architecture of ADSH. s.Lv∈{-1,+1}e,j∈{1,2,,n} function part aims to learn binary hash codes which preserve In(2),we set F(xi;e)to be the output of the CNN-F model the supervised information(similarity)between query points in the feature learning part,and e is the parameter of the and database points.ADSH integrates these two components CNN-F model.Through this way,we seamlessly integrate into the same end-to-end framework.During training proce- the feature learning part and the loss function part into the same framework. dure,each part can give feedback to the other part. There still exists a problem for the formulation in (2), Please note that the feature learning is only performed for query points but not for database points.Furthermore,based which is that we cannot back-propagate the gradient to e on the deep neural network for feature learning,ADSH due to the sign(F(xi;e))function.Hence,in ADSH we adopts a deep hash function to generate hash codes for adopt the following objective function: query points,but the binary hash codes for database points m n are directly learned.Hence,ADSH treats the query points gO,V=∑∑amh(Fx;o)rv-cS]2 and database points in an asymmetric way.This asymmetric i=1j=1 property of ADSH is different from traditional deep super- S.t. VE{-1,+1]nxe, (3) vised hashing methods.Traditional deep supervised hash- ing methods adopt the same deep neural network to perform where we use tanh(.)to approximate the sign()function. feature learning for both query points and database points, In practice,we might be given only a set of database and then use the same deep hash function to generate binary points Y=fyj without query points.In this case. codes for both query points and database points. we can randomly sample m data points from database to Feature Learning Part In this paper,we adopt a convo- construct the query set.More specifically,we set X=Y where Y denotes the database points indexed by Here, lutional neural network(CNN)model from (Chatfield et al. 2014),i.e.,CNN-F model,to perform feature learning.This we use I ={1,2,...,n}to denote the indices of all the database points and fi1,i2,...,im}I to denote CNN-F model has also been adopted in DPSH (Li,Wang, the indices of the sampled query points.Accordingly,we set and Kang 2016)for feature learning.The CNN-F model S=S,where S{-1,+1)nxn denotes the supervised contains five convolutional layers and three fully-connected information(similarity)between pairs of all database points layers,the details of which can be found at(Chatfield et al. and SE{-1,+1)mxn denotes the sub-matrix formed by 2014;Li,Wang,and Kang 2016).In ADSH,the last layer of the rows of S indexed by Then,we can rewrite J(,V) CNN-F model is replaced by a fully-connected layer which can project the output of the first seven layers into Re space. as follows: Please note that the framework of ADSH is general enough a聘J(6,V)=∑∑[tanh(Fy;o)Tv,-cS]2 to adopt other deep neural networks to replace the CNN-F ien jer model for feature learning.Here,we just adopt CNN-F for illustration. s.tV∈{-1,+1}mxc (4 Loss Function Part To learn the hash codes which can Because C T,there are two representations for yi. preserve the similarity between query points and database Vi E One is the binary hash code vi in database,and points,one common way is to minimize the L2 loss between the other is the query representation tanh(F(yi;))We ad- the supervised information (similarity)and inner product of d an extra constraint to keep vi and tanh(F(yi;e))as close query-database binary code pairs.This can be formulated as as possible,Vi E 1.This is intuitively reasonable,because follows: tanh(F(yi;e))is the approximation of the binary code of yi.Then we get the final formulation of ADSH with only 映J(U,V)=∑∑(uy-c5)2 database points Y for training: =1j=1 J6,V)=∑∑anh(Fy:6)7y-cS2 st.Ue{-1,+1}mxc,V∈{-1,+1}nxc, ien jer u=h(x),i∈{1,2,,m} +y∑v:-tanh(Fy;Θ)2 (5) However,it is difficult to learn h(x;)due to the dis- crete output.We can set h(xi)=sign(F(xi;e)),where s.t.V∈{-1,+1}nxc,

h

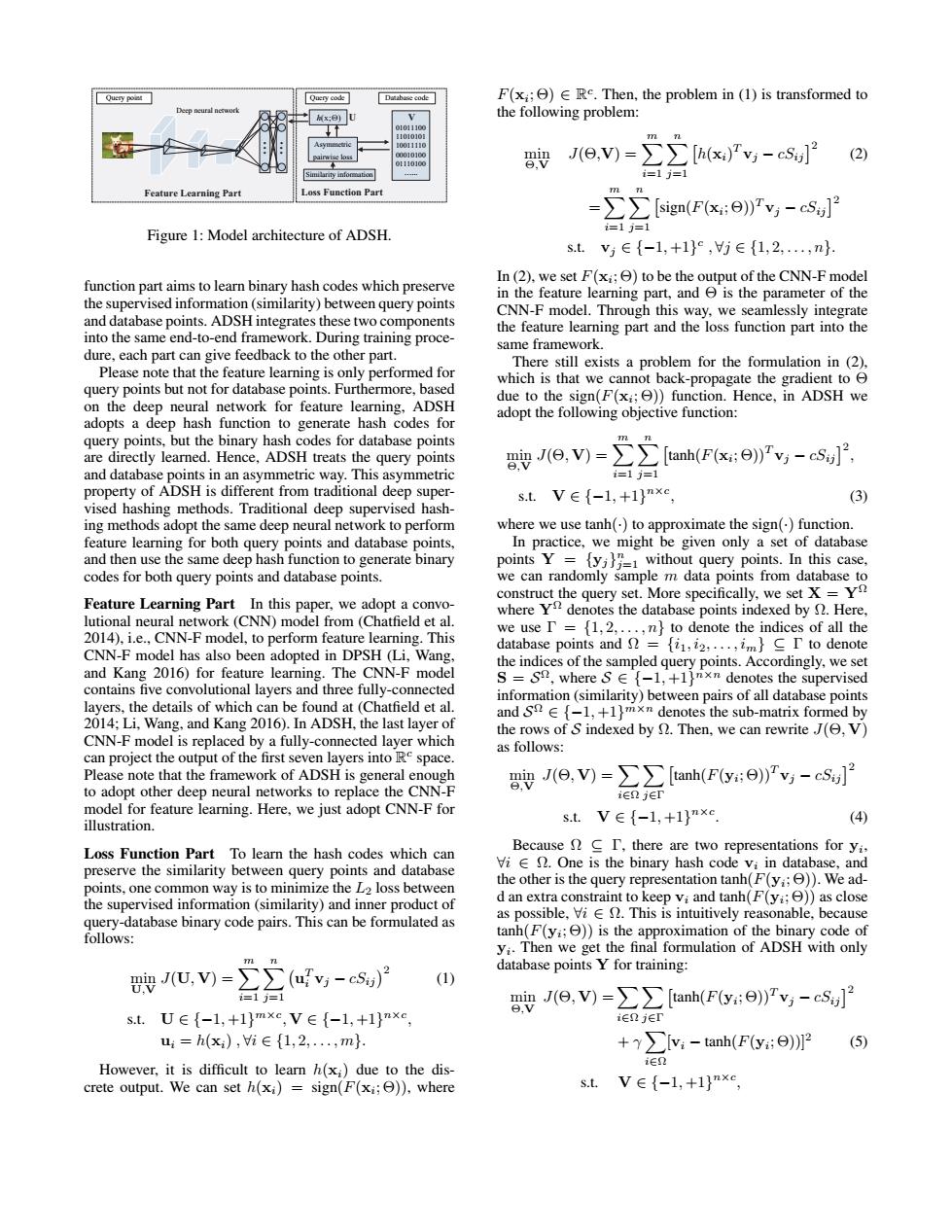

! Figure 1: Model architecture of ADSH. function part aims to learn binary hash codes which preserve the supervised information (similarity) between query points and database points. ADSH integrates these two components into the same end-to-end framework. During training procedure, each part can give feedback to the other part. Please note that the feature learning is only performed for query points but not for database points. Furthermore, based on the deep neural network for feature learning, ADSH adopts a deep hash function to generate hash codes for query points, but the binary hash codes for database points are directly learned. Hence, ADSH treats the query points and database points in an asymmetric way. This asymmetric property of ADSH is different from traditional deep supervised hashing methods. Traditional deep supervised hashing methods adopt the same deep neural network to perform feature learning for both query points and database points, and then use the same deep hash function to generate binary codes for both query points and database points. Feature Learning Part In this paper, we adopt a convolutional neural network (CNN) model from (Chatfield et al. 2014), i.e., CNN-F model, to perform feature learning. This CNN-F model has also been adopted in DPSH (Li, Wang, and Kang 2016) for feature learning. The CNN-F model contains five convolutional layers and three fully-connected layers, the details of which can be found at (Chatfield et al. 2014; Li, Wang, and Kang 2016). In ADSH, the last layer of CNN-F model is replaced by a fully-connected layer which can project the output of the first seven layers into R c space. Please note that the framework of ADSH is general enough to adopt other deep neural networks to replace the CNN-F model for feature learning. Here, we just adopt CNN-F for illustration. Loss Function Part To learn the hash codes which can preserve the similarity between query points and database points, one common way is to minimize the L2 loss between the supervised information (similarity) and inner product of query-database binary code pairs. This can be formulated as follows: min U,V J(U, V) = Xm i=1 Xn j=1 u T i vj − cSij 2 (1) s.t. U ∈ {−1, +1} m×c , V ∈ {−1, +1} n×c , ui = h(xi) , ∀i ∈ {1, 2, . . . , m}. However, it is difficult to learn h(xi) due to the discrete output. We can set h(xi) = sign(F(xi ; Θ)), where F(xi ; Θ) ∈ R c . Then, the problem in (1) is transformed to the following problem: min Θ,V J(Θ,V) = Xm i=1 Xn j=1 h(xi) T vj − cSij 2 (2) = Xm i=1 Xn j=1 sign(F(xi ; Θ))T vj − cSij 2 s.t. vj ∈ {−1, +1} c , ∀j ∈ {1, 2, . . . , n}. In (2), we set F(xi ; Θ) to be the output of the CNN-F model in the feature learning part, and Θ is the parameter of the CNN-F model. Through this way, we seamlessly integrate the feature learning part and the loss function part into the same framework. There still exists a problem for the formulation in (2), which is that we cannot back-propagate the gradient to Θ due to the sign(F(xi ; Θ)) function. Hence, in ADSH we adopt the following objective function: min Θ,V J(Θ, V) = Xm i=1 Xn j=1 tanh(F(xi ; Θ))T vj − cSij 2 , s.t. V ∈ {−1, +1} n×c , (3) where we use tanh(·) to approximate the sign(·) function. In practice, we might be given only a set of database points Y = {yj} n j=1 without query points. In this case, we can randomly sample m data points from database to construct the query set. More specifically, we set X = YΩ where YΩ denotes the database points indexed by Ω. Here, we use Γ = {1, 2, . . . , n} to denote the indices of all the database points and Ω = {i1, i2, . . . , im} ⊆ Γ to denote the indices of the sampled query points. Accordingly, we set S = S Ω, where S ∈ {−1, +1} n×n denotes the supervised information (similarity) between pairs of all database points and S Ω ∈ {−1, +1} m×n denotes the sub-matrix formed by the rows of S indexed by Ω. Then, we can rewrite J(Θ, V) as follows: min Θ,V J(Θ, V) = X i∈Ω X j∈Γ tanh(F(yi ; Θ))T vj − cSij 2 s.t. V ∈ {−1, +1} n×c . (4) Because Ω ⊆ Γ, there are two representations for yi , ∀i ∈ Ω. One is the binary hash code vi in database, and the other is the query representation tanh(F(yi ; Θ)). We add an extra constraint to keep vi and tanh(F(yi ; Θ)) as close as possible, ∀i ∈ Ω. This is intuitively reasonable, because tanh(F(yi ; Θ)) is the approximation of the binary code of yi . Then we get the final formulation of ADSH with only database points Y for training: min Θ,V J(Θ, V) =X i∈Ω X j∈Γ tanh(F(yi ; Θ))T vj − cSij 2 + γ X i∈Ω [vi − tanh(F(yi ; Θ))]2 (5) s.t. V ∈ {−1, +1} n×c ,�������������������