正在加载图片...

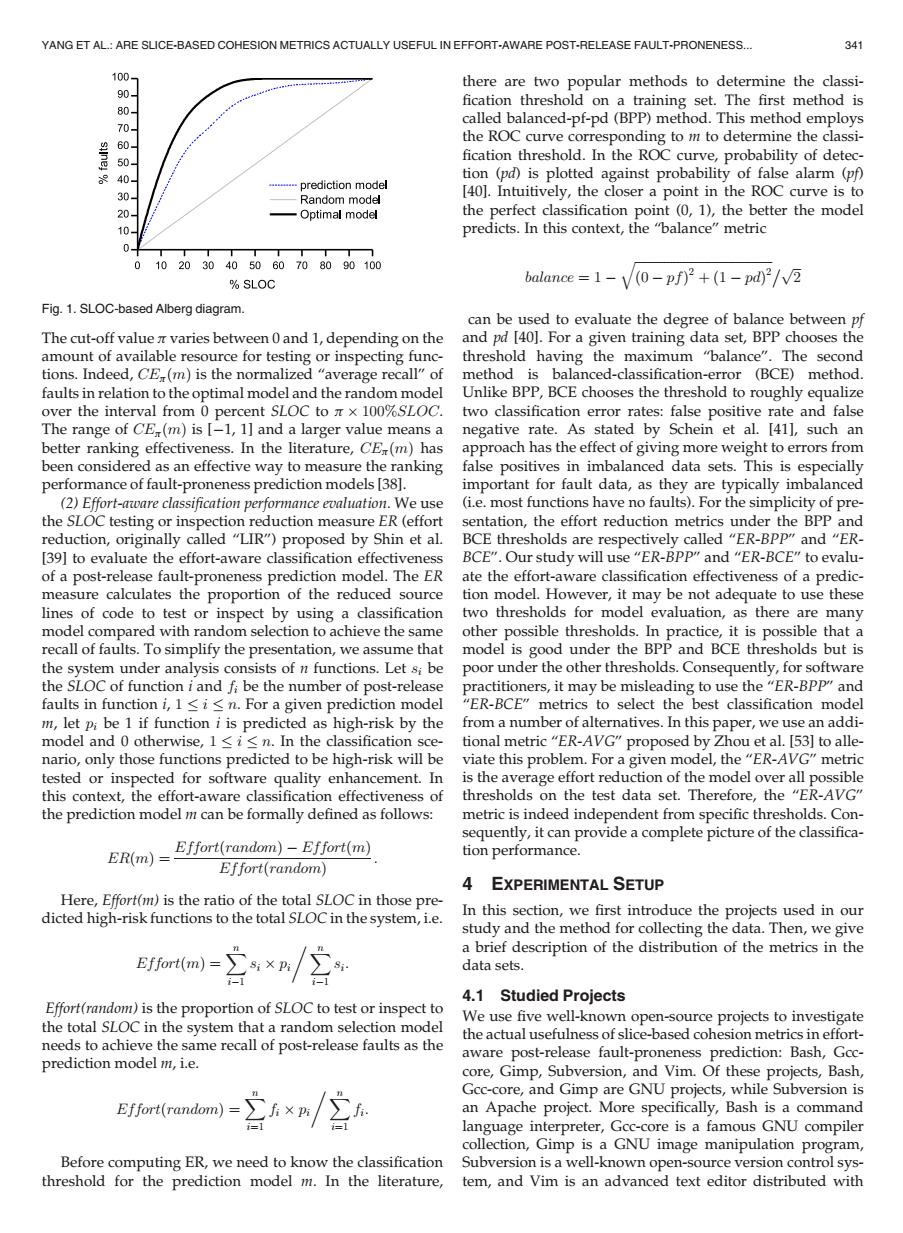

YANG ET AL:ARE SLICE-BASED COHESION METRICS ACTUALLY USEFUL IN EFFORT-AWARE POST-RELEASE FAULT-PRONENESS.. 341 100 % there are two popular methods to determine the classi- fication threshold on a training set.The first method is 0 called balanced-pf-pd(BPP)method.This method employs 60 the ROC curve corresponding to m to determine the classi- 50- fication threshold.In the ROC curve,probability of detec- 40 tion (pd)is plotted against probability of false alarm (pf) prediction model 30 [401.Intuitively,the closer a point in the ROC curve is to Random model 20 Optimal model the perfect classification point (0,1),the better the model 0 predicts.In this context,the "balance"metric o 0 102030405060708090100 SLOC balance=1-(0-pf)2+(1-pd)2 Fig.1.SLOC-based Alberg diagram can be used to evaluate the degree of balance between pf The cut-off value x varies between 0 and 1,depending on the and pd [40].For a given training data set,BPP chooses the amount of available resource for testing or inspecting func- threshold having the maximum "balance".The second tions.Indeed,CE(m)is the normalized "average recall"of method is balanced-classification-error (BCE)method. faults in relation to the optimal model and the random model Unlike BPP,BCE chooses the threshold to roughly equalize over the interval from 0 percent SLOC to xx 100%SLOC. two classification error rates:false positive rate and false The range of CE(m)is [-1,1]and a larger value means a negative rate.As stated by Schein et al.[411,such an better ranking effectiveness.In the literature,CEr(m)has approach has the effect of giving more weight to errors from been considered as an effective way to measure the ranking false positives in imbalanced data sets.This is especially performance of fault-proneness prediction models [38]. important for fault data,as they are typically imbalanced (2)Effort-aware classification performance evaluation.We use (i.e.most functions have no faults).For the simplicity of pre- the SLOC testing or inspection reduction measure ER(effort sentation,the effort reduction metrics under the BPP and reduction,originally called "LIR")proposed by Shin et al. BCE thresholds are respectively called "ER-BPp"and "ER- [39]to evaluate the effort-aware classification effectiveness BCE".Our study will use"ER-BPP"and "ER-BCE"to evalu- of a post-release fault-proneness prediction model.The ER ate the effort-aware classification effectiveness of a predic- measure calculates the proportion of the reduced source tion model.However,it may be not adequate to use these lines of code to test or inspect by using a classification two thresholds for model evaluation,as there are many model compared with random selection to achieve the same other possible thresholds.In practice,it is possible that a recall of faults.To simplify the presentation,we assume that model is good under the BPP and BCE thresholds but is the system under analysis consists of n functions.Let s;be poor under the other thresholds.Consequently,for software the SLOC of function i and fi be the number of post-release practitioners,it may be misleading to use the "ER-BPp"and faults in function i,1 i<n.For a given prediction model "ER-BCE"metrics to select the best classification model m,let pi be 1 if function i is predicted as high-risk by the from a number of alternatives.In this paper,we use an addi- model and 0 otherwise,1<i<n.In the classification sce- tional metric"ER-AVG"proposed by Zhou et al.[53]to alle- nario,only those functions predicted to be high-risk will be viate this problem.For a given model,the "ER-AVG"metric tested or inspected for software quality enhancement.In is the average effort reduction of the model over all possible this context,the effort-aware classification effectiveness of thresholds on the test data set.Therefore,the "ER-AVG" the prediction model m can be formally defined as follows: metric is indeed independent from specific thresholds.Con- sequently,it can provide a complete picture of the classifica- Effort(random)-Effort(m) ER(m)= tion performance. Effort(random) 4 EXPERIMENTAL SETUP Here,Effort(m)is the ratio of the total SLOC in those pre- dicted high-risk functions to the total SLOC in the system,i.e. In this section,we first introduce the projects used in our study and the method for collecting the data.Then,we give a brief description of the distribution of the metrics in the Effort(m data sets. 4.1 Studied Projects Effort(random)is the proportion of SLOC to test or inspect to We use five well-known open-source projects to investigate the total SLOC in the system that a random selection model the actual usefulness of slice-based cohesion metrics in effort- needs to achieve the same recall of post-release faults as the prediction model m,i.e. aware post-release fault-proneness prediction:Bash,Gcc- core,Gimp,Subversion,and Vim.Of these projects,Bash, Gcc-core,and Gimp are GNU projects,while Subversion is Effort(random an Apache project.More specifically,Bash is a command language interpreter,Gcc-core is a famous GNU compiler collection,Gimp is a GNU image manipulation program, Before computing ER,we need to know the classification Subversion is a well-known open-source version control sys- threshold for the prediction model m.In the literature, tem,and Vim is an advanced text editor distributed withThe cut-off value p varies between 0 and 1, depending on the amount of available resource for testing or inspecting functions. Indeed, CEpðmÞ is the normalized “average recall” of faults in relation to the optimal model and the random model over the interval from 0 percent SLOC to p 100%SLOC. The range of CEpðmÞ is [1, 1] and a larger value means a better ranking effectiveness. In the literature, CEpðmÞ has been considered as an effective way to measure the ranking performance of fault-proneness prediction models [38]. (2) Effort-aware classification performance evaluation. We use the SLOC testing or inspection reduction measure ER (effort reduction, originally called “LIR”) proposed by Shin et al. [39] to evaluate the effort-aware classification effectiveness of a post-release fault-proneness prediction model. The ER measure calculates the proportion of the reduced source lines of code to test or inspect by using a classification model compared with random selection to achieve the same recall of faults. To simplify the presentation, we assume that the system under analysis consists of n functions. Let si be the SLOC of function i and fi be the number of post-release faults in function i, 1 i n. For a given prediction model m, let pi be 1 if function i is predicted as high-risk by the model and 0 otherwise, 1 i n. In the classification scenario, only those functions predicted to be high-risk will be tested or inspected for software quality enhancement. In this context, the effort-aware classification effectiveness of the prediction model m can be formally defined as follows: ERðmÞ ¼ EffortðrandomÞ EffortðmÞ EffortðrandomÞ : Here, Effort(m) is the ratio of the total SLOC in those predicted high-risk functions to the total SLOC in the system, i.e. EffortðmÞ ¼ Xn i1 si pi Xn i1 si: Effort(random) is the proportion of SLOC to test or inspect to the total SLOC in the system that a random selection model needs to achieve the same recall of post-release faults as the prediction model m, i.e. EffortðrandomÞ ¼ Xn i¼1 fi pi Xn i¼1 fi: Before computing ER, we need to know the classification threshold for the prediction model m. In the literature, there are two popular methods to determine the classi- fication threshold on a training set. The first method is called balanced-pf-pd (BPP) method. This method employs the ROC curve corresponding to m to determine the classi- fication threshold. In the ROC curve, probability of detection (pd) is plotted against probability of false alarm (pf) [40]. Intuitively, the closer a point in the ROC curve is to the perfect classification point (0, 1), the better the model predicts. In this context, the “balance” metric balance ¼ 1 ffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffi ð0 pfÞ 2 þ ð1 pdÞ 2 q ffiffiffi 2 p can be used to evaluate the degree of balance between pf and pd [40]. For a given training data set, BPP chooses the threshold having the maximum “balance”. The second method is balanced-classification-error (BCE) method. Unlike BPP, BCE chooses the threshold to roughly equalize two classification error rates: false positive rate and false negative rate. As stated by Schein et al. [41], such an approach has the effect of giving more weight to errors from false positives in imbalanced data sets. This is especially important for fault data, as they are typically imbalanced (i.e. most functions have no faults). For the simplicity of presentation, the effort reduction metrics under the BPP and BCE thresholds are respectively called “ER-BPP” and “ERBCE”. Our study will use “ER-BPP” and “ER-BCE” to evaluate the effort-aware classification effectiveness of a prediction model. However, it may be not adequate to use these two thresholds for model evaluation, as there are many other possible thresholds. In practice, it is possible that a model is good under the BPP and BCE thresholds but is poor under the other thresholds. Consequently, for software practitioners, it may be misleading to use the “ER-BPP” and “ER-BCE” metrics to select the best classification model from a number of alternatives. In this paper, we use an additional metric “ER-AVG” proposed by Zhou et al. [53] to alleviate this problem. For a given model, the “ER-AVG” metric is the average effort reduction of the model over all possible thresholds on the test data set. Therefore, the “ER-AVG” metric is indeed independent from specific thresholds. Consequently, it can provide a complete picture of the classification performance. 4 EXPERIMENTAL SETUP In this section, we first introduce the projects used in our study and the method for collecting the data. Then, we give a brief description of the distribution of the metrics in the data sets. 4.1 Studied Projects We use five well-known open-source projects to investigate the actual usefulness of slice-based cohesion metrics in effortaware post-release fault-proneness prediction: Bash, Gcccore, Gimp, Subversion, and Vim. Of these projects, Bash, Gcc-core, and Gimp are GNU projects, while Subversion is an Apache project. More specifically, Bash is a command language interpreter, Gcc-core is a famous GNU compiler collection, Gimp is a GNU image manipulation program, Subversion is a well-known open-source version control system, and Vim is an advanced text editor distributed with Fig. 1. SLOC-based Alberg diagram. YANG ET AL.: ARE SLICE-BASED COHESION METRICS ACTUALLY USEFUL IN EFFORT-AWARE POST-RELEASE FAULT-PRONENESS... 341���