正在加载图片...

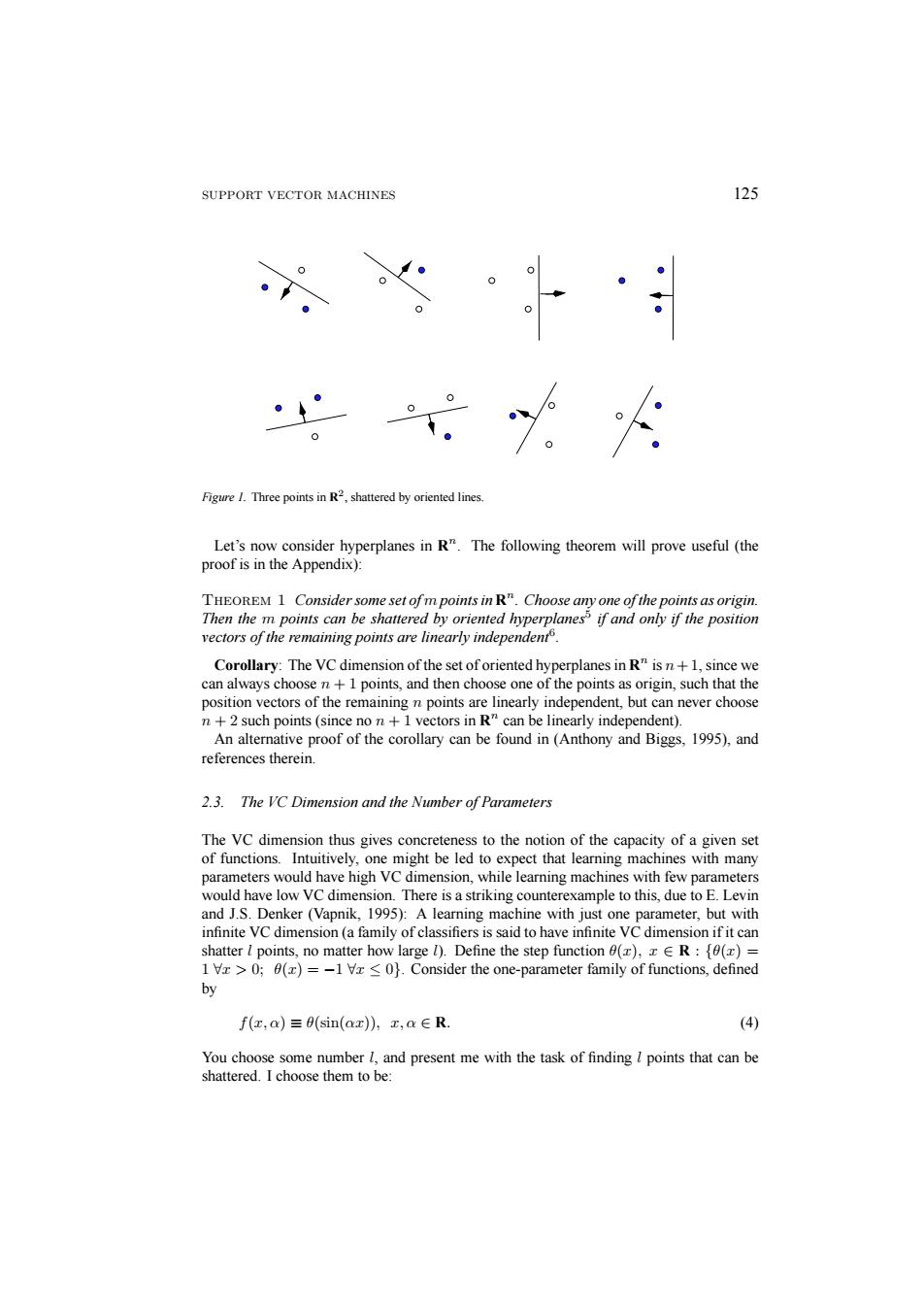

SUPPORT VECTOR MACHINES 125 Figure /Three points in R,shattered by oriented lines. Let's now consider hyperplanes in R".The following theorem will prove useful(the proof is in the Appendix): THEOREM 1 Consider some set ofm points in R".Choose any one of the points as origin. Then the m points can be shattered by oriented hyperplanes if and only if the position vectors of the remaining points are linearly independent Corollary:The VC dimension of the set of oriented hyperplanes in R"is n+1,since we can always choose n+1 points,and then choose one of the points as origin,such that the position vectors of the remaining n points are linearly independent,but can never choose n+2 such points(since no n+1 vectors in R"can be linearly independent). An alternative proof of the corollary can be found in (Anthony and Biggs,1995),and references therein. 2.3.The VC Dimension and the Number of Parameters The VC dimension thus gives concreteness to the notion of the capacity of a given set of functions.Intuitively,one might be led to expect that learning machines with many parameters would have high VC dimension,while learning machines with few parameters would have low VC dimension.There is a striking counterexample to this,due to E.Levin and J.S.Denker(Vapnik,1995):A learning machine with just one parameter,but with infinite VC dimension (a family of classifiers is said to have infinite VC dimension if it can shatter I points,no matter how large l).Define the step function (z),xER:{0()= 1 Vz >0;0(z)=-1Vz <0}.Consider the one-parameter family of functions,defined by f(x,a)≡(sin(ax),x,a∈R (4) You choose some number l,and present me with the task of finding l points that can be shattered.I choose them to be:SUPPORT VECTOR MACHINES 125 Figure 1. Three points in R2, shattered by oriented lines. Let’s now consider hyperplanes in Rn. The following theorem will prove useful (the proof is in the Appendix): Theorem 1 Consider some set of m points in Rn. Choose any one of the points as origin. Then the m points can be shattered by oriented hyperplanes5 if and only if the position vectors of the remaining points are linearly independent6. Corollary: The VC dimension of the set of oriented hyperplanes in Rn is n+ 1, since we can always choose n + 1 points, and then choose one of the points as origin, such that the position vectors of the remaining n points are linearly independent, but can never choose n + 2 such points (since no n + 1 vectors in Rn can be linearly independent). An alternative proof of the corollary can be found in (Anthony and Biggs, 1995), and references therein. 2.3. The VC Dimension and the Number of Parameters The VC dimension thus gives concreteness to the notion of the capacity of a given set of functions. Intuitively, one might be led to expect that learning machines with many parameters would have high VC dimension, while learning machines with few parameters would have low VC dimension. There is a striking counterexample to this, due to E. Levin and J.S. Denker (Vapnik, 1995): A learning machine with just one parameter, but with infinite VC dimension (a family of classifiers is said to have infinite VC dimension if it can shatter l points, no matter how large l). Define the step function θ(x), x ∈ R : {θ(x) = 1 ∀x > 0; θ(x) = −1 ∀x ≤ 0}. Consider the one-parameter family of functions, defined by f(x, α) ≡ θ(sin(αx)), x, α ∈ R. (4) You choose some number l, and present me with the task of finding l points that can be shattered. I choose them to be: