正在加载图片...

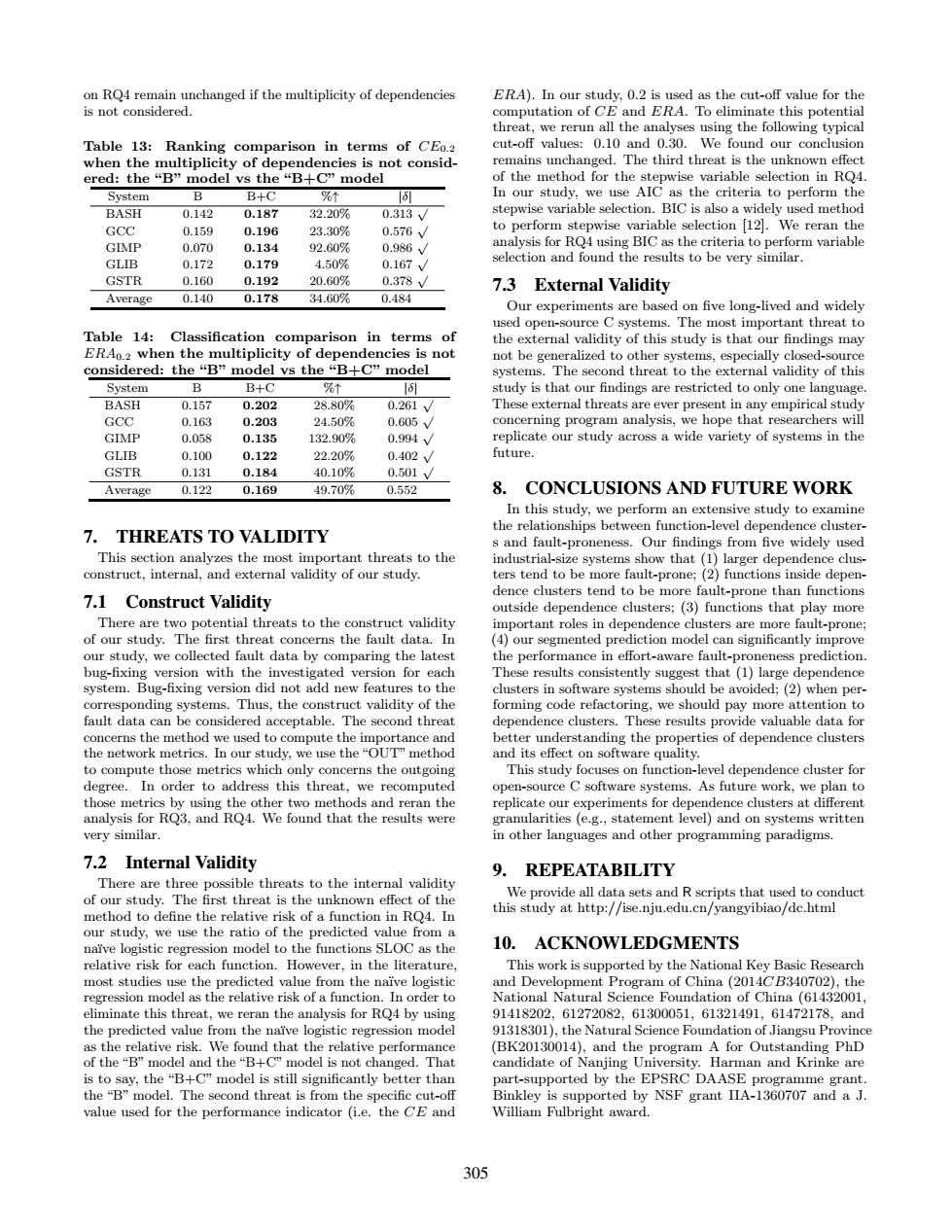

on RQ4 remain unchanged if the multiplicity of dependencies ERA).In our study,0.2 is used as the cut-off value for the is not considered. computation of CE and ERA.To eliminate this potential threat,we rerun all the analyses using the following typical Table 13: Ranking comparison in terms of CEo.2 cut-off values:0.10 and 0.30.We found our conclusion when the multiplicity of dependencies is not consid- remains unchanged.The third threat is the unknown effect ered:the“B”model vs the“B+C”model of the method for the stepwise variable selection in RQ4 System B B+C %↑ In our study,we use AIC as the criteria to perform the BASH 0.142 0.187 32.20% 0.313vV stepwise variable selection.BIC is also a widely used method GCC 0.159 0.196 23.30% 0.576/ to perform stepwise variable selection [12.We reran the GIMP 0.070 0.134 92.60% 0.986 analysis for RQ4 using BIC as the criteria to perform variable GLIB 0.172 0.179 4.50% 0.167 selection and found the results to be very similar. GSTR 0.160 0.192 20.60% 0.378/ 7.3 External Validity Average 0.140 0.178 34.60% 0.484 Our experiments are based on five long-lived and widely used open-source C systems.The most important threat to Table 14: Classification comparison in terms of the external validity of this study is that our findings may ERA0.2 when the multiplicity of dependencies is not not be generalized to other systems,especially closed-source considered:the“B”model vs the“B+C"nodel systems.The second threat to the external validity of this System B B+C %↑ 1 study is that our findings are restricted to only one language BASH 0.157 0.202 28.80% 0.261√ These external threats are ever present in any empirical study GCC 0.163 0.203 24.50% 0.605√/ concerning program analysis,we hope that researchers will GIMP 0.058 0.135 132.90% 0.994√ replicate our study across a wide variety of systems in the GLIB 0.100 0.122 22.20% 0.402/ future. GSTR 0.131 0.184 40.10% 0.501√ Average 0.122 0.169 49.70% 0.552 8.CONCLUSIONS AND FUTURE WORK In this study,we perform an extensive study to examine the relationships between function-level dependence cluster- 7.THREATS TO VALIDITY s and fault-proneness.Our findings from five widely used This section analyzes the most important threats to the industrial-size systems show that (1)larger dependence clus- construct,internal,and external validity of our study. ters tend to be more fault-prone;(2)functions inside depen- dence clusters tend to be more fault-prone than functions 7.1 Construct Validity outside dependence clusters;(3)functions that play more There are two potential threats to the construct validity important roles in dependence clusters are more fault-prone; of our study.The first threat concerns the fault data.In (4)our segmented prediction model can significantly improve our study,we collected fault data by comparing the latest the performance in effort-aware fault-proneness prediction. bug-fixing version with the investigated version for each These results consistently suggest that (1)large dependence system.Bug-fixing version did not add new features to the clusters in software systems should be avoided;(2)when per- corresponding systems.Thus,the construct validity of the forming code refactoring,we should pay more attention to fault data can be considered acceptable.The second threat dependence clusters.These results provide valuable data for concerns the method we used to compute the importance and better understanding the properties of dependence clusters the network metrics.In our study,we use the "OUT"method and its effect on software quality. to compute those metrics which only concerns the outgoing This study focuses on function-level dependence cluster for degree.In order to address this threat,we recomputed open-source C software systems.As future work,we plan to those metrics by using the other two methods and reran the replicate our experiments for dependence clusters at different analysis for RQ3.and RQ4.We found that the results were granularities (e.g.,statement level)and on systems written very similar. in other languages and other programming paradigms. 7.2 Internal Validity 9.REPEATABILITY There are three possible threats to the internal validity of our study.The first threat is the unknown effect of the We provide all data sets and R scripts that used to conduct method to define the relative risk of a function in RQ4.In this study at http://ise.nju.edu.cn/yangyibiao/dc.html our study,we use the ratio of the predicted value from a naive logistic regression model to the functions SLOC as the 10. ACKNOWLEDGMENTS relative risk for each function.However,in the literature, This work is supported by the National Key Basic Research most studies use the predicted value from the naive logistic and Development Program of China (2014CB340702),the regression model as the relative risk of a function.In order to National Natural Science Foundation of China (61432001 eliminate this threat,we reran the analysis for RQ4 by using 91418202,61272082.61300051,61321491,61472178,and the predicted value from the naive logistic regression model 91318301),the Natural Science Foundation of Jiangsu Province as the relative risk.We found that the relative performance (BK20130014),and the program A for Outstanding PhD of the "B"model and the"B+C"model is not changed.That candidate of Nanjing University.Harman and Krinke are is to say,the "B+C"model is still significantly better than part-supported by the EPSRC DAASE programme grant. the "B"model.The second threat is from the specific cut-off Binkley is supported by NSF grant IIA-1360707 and a J. value used for the performance indicator (i.e.the CE and William Fulbright award. 305on RQ4 remain unchanged if the multiplicity of dependencies is not considered. Table 13: Ranking comparison in terms of CE0.2 when the multiplicity of dependencies is not considered: the “B” model vs the “B+C” model System B B+C %↑ |δ| BASH 0.142 0.187 32.20% 0.313 √ GCC 0.159 0.196 23.30% 0.576 √ GIMP 0.070 0.134 92.60% 0.986 √ GLIB 0.172 0.179 4.50% 0.167 √ GSTR 0.160 0.192 20.60% 0.378 √ Average 0.140 0.178 34.60% 0.484 Table 14: Classification comparison in terms of ERA0.2 when the multiplicity of dependencies is not considered: the “B” model vs the “B+C” model System B B+C %↑ |δ| BASH 0.157 0.202 28.80% 0.261 √ GCC 0.163 0.203 24.50% 0.605 √ GIMP 0.058 0.135 132.90% 0.994 √ GLIB 0.100 0.122 22.20% 0.402 √ GSTR 0.131 0.184 40.10% 0.501 √ Average 0.122 0.169 49.70% 0.552 7. THREATS TO VALIDITY This section analyzes the most important threats to the construct, internal, and external validity of our study. 7.1 Construct Validity There are two potential threats to the construct validity of our study. The first threat concerns the fault data. In our study, we collected fault data by comparing the latest bug-fixing version with the investigated version for each system. Bug-fixing version did not add new features to the corresponding systems. Thus, the construct validity of the fault data can be considered acceptable. The second threat concerns the method we used to compute the importance and the network metrics. In our study, we use the “OUT” method to compute those metrics which only concerns the outgoing degree. In order to address this threat, we recomputed those metrics by using the other two methods and reran the analysis for RQ3, and RQ4. We found that the results were very similar. 7.2 Internal Validity There are three possible threats to the internal validity of our study. The first threat is the unknown effect of the method to define the relative risk of a function in RQ4. In our study, we use the ratio of the predicted value from a na¨ıve logistic regression model to the functions SLOC as the relative risk for each function. However, in the literature, most studies use the predicted value from the na¨ıve logistic regression model as the relative risk of a function. In order to eliminate this threat, we reran the analysis for RQ4 by using the predicted value from the na¨ıve logistic regression model as the relative risk. We found that the relative performance of the “B” model and the “B+C” model is not changed. That is to say, the “B+C” model is still significantly better than the “B” model. The second threat is from the specific cut-off value used for the performance indicator (i.e. the CE and ERA). In our study, 0.2 is used as the cut-off value for the computation of CE and ERA. To eliminate this potential threat, we rerun all the analyses using the following typical cut-off values: 0.10 and 0.30. We found our conclusion remains unchanged. The third threat is the unknown effect of the method for the stepwise variable selection in RQ4. In our study, we use AIC as the criteria to perform the stepwise variable selection. BIC is also a widely used method to perform stepwise variable selection [12]. We reran the analysis for RQ4 using BIC as the criteria to perform variable selection and found the results to be very similar. 7.3 External Validity Our experiments are based on five long-lived and widely used open-source C systems. The most important threat to the external validity of this study is that our findings may not be generalized to other systems, especially closed-source systems. The second threat to the external validity of this study is that our findings are restricted to only one language. These external threats are ever present in any empirical study concerning program analysis, we hope that researchers will replicate our study across a wide variety of systems in the future. 8. CONCLUSIONS AND FUTURE WORK In this study, we perform an extensive study to examine the relationships between function-level dependence clusters and fault-proneness. Our findings from five widely used industrial-size systems show that (1) larger dependence clusters tend to be more fault-prone; (2) functions inside dependence clusters tend to be more fault-prone than functions outside dependence clusters; (3) functions that play more important roles in dependence clusters are more fault-prone; (4) our segmented prediction model can significantly improve the performance in effort-aware fault-proneness prediction. These results consistently suggest that (1) large dependence clusters in software systems should be avoided; (2) when performing code refactoring, we should pay more attention to dependence clusters. These results provide valuable data for better understanding the properties of dependence clusters and its effect on software quality. This study focuses on function-level dependence cluster for open-source C software systems. As future work, we plan to replicate our experiments for dependence clusters at different granularities (e.g., statement level) and on systems written in other languages and other programming paradigms. 9. REPEATABILITY We provide all data sets and R scripts that used to conduct this study at http://ise.nju.edu.cn/yangyibiao/dc.html 10. ACKNOWLEDGMENTS This work is supported by the National Key Basic Research and Development Program of China (2014CB340702), the National Natural Science Foundation of China (61432001, 91418202, 61272082, 61300051, 61321491, 61472178, and 91318301), the Natural Science Foundation of Jiangsu Province (BK20130014), and the program A for Outstanding PhD candidate of Nanjing University. Harman and Krinke are part-supported by the EPSRC DAASE programme grant. Binkley is supported by NSF grant IIA-1360707 and a J. William Fulbright award. 305