正在加载图片...

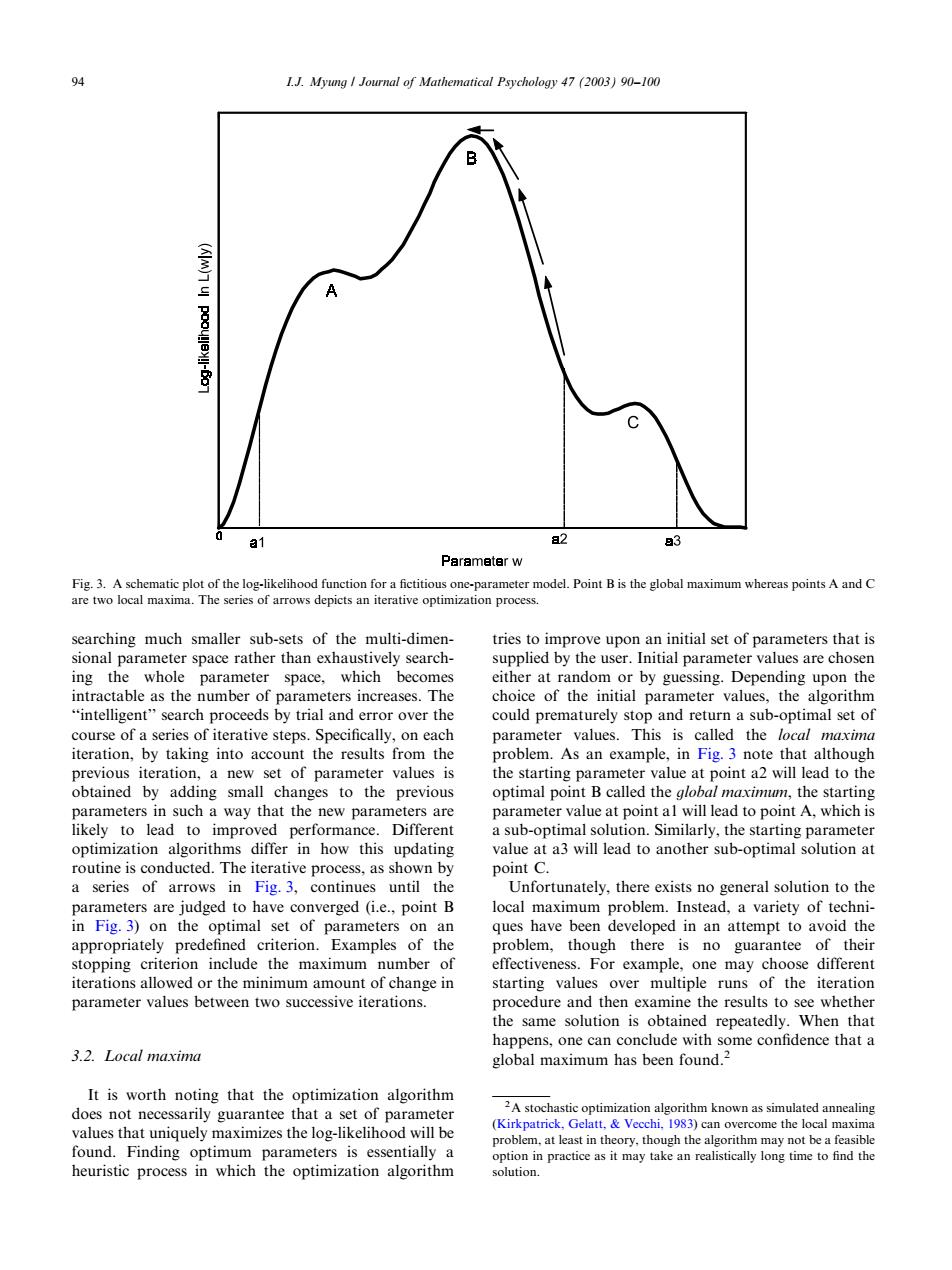

94 I.J.Myung Journal of Mathematical Psychology 47 (2003)90-100 a1 a2 83 Parameter w Fig.3.A schematic plot of the log-likelihood function for a fictitious one-parameter model.Point B is the global maximum whereas points A and C are two local maxima.The series of arrows depicts an iterative optimization process searching much smaller sub-sets of the multi-dimen- tries to improve upon an initial set of parameters that is sional parameter space rather than exhaustively search- supplied by the user.Initial parameter values are chosen ing the whole parameter space,which becomes either at random or by guessing.Depending upon the intractable as the number of parameters increases.The choice of the initial parameter values,the algorithm "intelligent"search proceeds by trial and error over the could prematurely stop and return a sub-optimal set of course of a series of iterative steps.Specifically,on each parameter values.This is called the local maxima iteration,by taking into account the results from the problem.As an example,in Fig.3 note that although previous iteration,a new set of parameter values is the starting parameter value at point a2 will lead to the obtained by adding small changes to the previous optimal point B called the global maximum,the starting parameters in such a way that the new parameters are parameter value at point al will lead to point A,which is likely to lead to improved performance.Different a sub-optimal solution.Similarly,the starting parameter optimization algorithms differ in how this updating value at a3 will lead to another sub-optimal solution at routine is conducted.The iterative process,as shown by point C. a series of arrows in Fig.3,continues until the Unfortunately,there exists no general solution to the parameters are judged to have converged (i.e.,point B local maximum problem.Instead,a variety of techni- in Fig.3)on the optimal set of parameters on an ques have been developed in an attempt to avoid the appropriately predefined criterion.Examples of the problem,though there is no guarantee of their stopping criterion include the maximum number of effectiveness.For example,one may choose different iterations allowed or the minimum amount of change in starting values over multiple runs of the iteration parameter values between two successive iterations. procedure and then examine the results to see whether the same solution is obtained repeatedly.When that happens,one can conclude with some confidence that a 3.2.Local maxima global maximum has been found.2 It is worth noting that the optimization algorithm does not necessarily guarantee that a set of parameter 2A stochastic optimization algorithm known as simulated annealing values that uniquely maximizes the log-likelihood will be (Kirkpatrick,Gelatt,Vecchi.1983)can overcome the local maxima problem,at least in theory,though the algorithm may not be a feasible found.Finding optimum parameters is essentially a option in practice as it may take an realistically long time to find the heuristic process in which the optimization algorithm solution.searching much smaller sub-sets of the multi-dimensional parameter space rather than exhaustively searching the whole parameter space, which becomes intractable as the number of parameters increases. The ‘‘intelligent’’ search proceeds by trial and error over the course of a series of iterative steps. Specifically, on each iteration, by taking into account the results from the previous iteration, a new set of parameter values is obtained by adding small changes to the previous parameters in such a way that the new parameters are likely to lead to improved performance. Different optimization algorithms differ in how this updating routine is conducted. The iterative process, as shown by a series of arrows in Fig. 3, continues until the parameters are judged to have converged (i.e., point B in Fig. 3) on the optimal set of parameters on an appropriately predefined criterion. Examples of the stopping criterion include the maximum number of iterations allowed or the minimum amount of change in parameter values between two successive iterations. 3.2. Local maxima It is worth noting that the optimization algorithm does not necessarily guarantee that a set of parameter values that uniquely maximizes the log-likelihood will be found. Finding optimum parameters is essentially a heuristic process in which the optimization algorithm tries to improve upon an initial set of parameters that is supplied by the user. Initial parameter values are chosen either at random or by guessing. Depending upon the choice of the initial parameter values, the algorithm could prematurely stop and return a sub-optimal set of parameter values. This is called the local maxima problem. As an example, in Fig. 3 note that although the starting parameter value at point a2 will lead to the optimal point B called the global maximum, the starting parameter value at point a1 will lead to point A, which is a sub-optimal solution. Similarly, the starting parameter value at a3 will lead to another sub-optimal solution at point C. Unfortunately, there exists no general solution to the local maximum problem. Instead, a variety of techniques have been developed in an attempt to avoid the problem, though there is no guarantee of their effectiveness. For example, one may choose different starting values over multiple runs of the iteration procedure and then examine the results to see whether the same solution is obtained repeatedly. When that happens, one can conclude with some confidence that a global maximum has been found.2 Fig. 3. A schematic plot of the log-likelihood function for a fictitious one-parameter model. Point B is the global maximum whereas points A and C are two local maxima. The series of arrows depicts an iterative optimization process. 2A stochastic optimization algorithm known as simulated annealing (Kirkpatrick, Gelatt, & Vecchi, 1983) can overcome the local maxima problem, at least in theory, though the algorithm may not be a feasible option in practice as it may take an realistically long time to find the solution. 94 I.J. Myung / Journal of Mathematical Psychology 47 (2003) 90–100