正在加载图片...

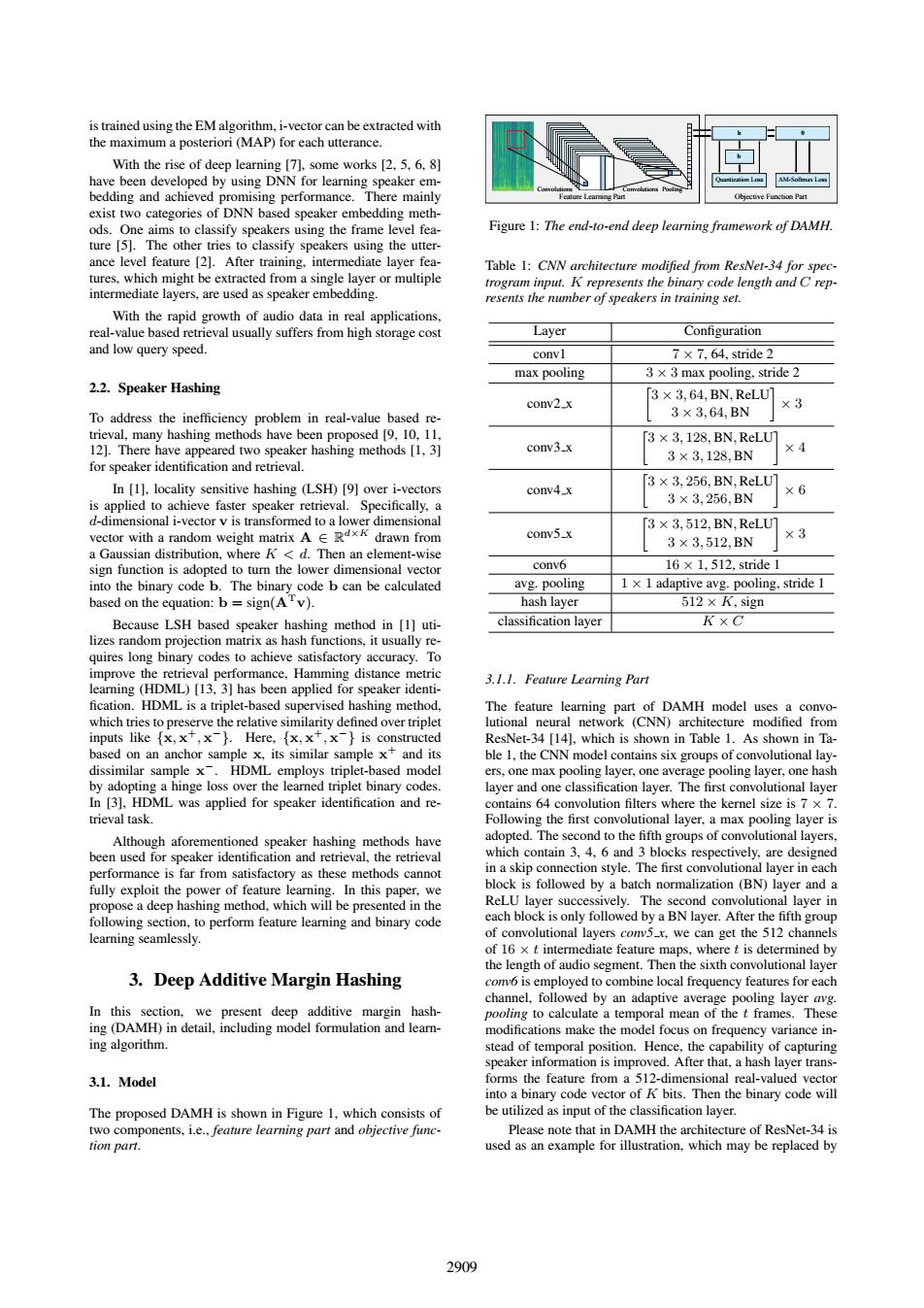

is trained using the EM algorithm,i-vector can be extracted with the maximum a posteriori (MAP)for each utterance. With the rise of deep learning [7].some works [2,5,6,8] have been developed by using DNN for learning speaker em- Lro M-Sotaat Le bedding and achieved promising performance.There mainly exist two categories of DNN based speaker embedding meth- ods.One aims to classify speakers using the frame level fea- Figure 1:The end-to-end deep learning framework of DAMH ture [5].The other tries to classify speakers using the utter- ance level feature [2].After training,intermediate layer fea- Table 1:CNN architecture modified from ResNet-34 for spec- tures,which might be extracted from a single layer or multiple trogram input.K represents the binary code length and C rep- intermediate layers,are used as speaker embedding. resents the number of speakers in training set. With the rapid growth of audio data in real applications, real-value based retrieval usually suffers from high storage cost Layer Configuration and low query speed. convl 7×7,64,stride2 max pooling 3 x 3 max pooling,stride 2 2.2.Speaker Hashing 3×3,64,BN.ReLU1 conv2x ×3 To address the inefficiency problem in real-value based re- 3×3,64,BN trieval,many hashing methods have been proposed [9,10.11. T3×3.128,BN,ReLU1 12].There have appeared two speaker hashing methods [1.3] conv3_x ×4 3×3,128,BN for speaker identification and retrieval. 3×3.256.BN,ReLU In [1].locality sensitive hashing (LSH)[9]over i-vectors conv4x ×6 is applied to achieve faster speaker retrieval.Specifically,a 3×3,256,BN d-dimensional i-vector v is transformed to a lower dimensional T3×3,512,BN,ReLU vector with a random weight matrix A Rxk drawn from convs x ×3 3×3,512,BN a Gaussian distribution,where K<d.Then an element-wise sign function is adopted to turn the lower dimensional vector conv6 16×1,512,stride1 into the binary code b.The binary code b can be calculated avg.pooling 1 x 1 adaptive avg.pooling,stride 1 based on the equation:b=sign(Av). hash layer 512×K,sign Because LSH based speaker hashing method in [1]uti- classification layer KXC☑ lizes random projection matrix as hash functions,it usually re- quires long binary codes to achieve satisfactory accuracy.To improve the retrieval performance,Hamming distance metric 3.1.1.Feature Learning Part learning(HDML)[13,3]has been applied for speaker identi- fication.HDML is a triplet-based supervised hashing method The feature learning part of DAMH model uses a convo- which tries to preserve the relative similarity defined over triplet lutional neural network (CNN)architecture modified from inputs like {x,x,x).Here,{x,x,x}is constructed ResNet-34 [14].which is shown in Table 1.As shown in Ta- based on an anchor sample x,its similar sample x and its ble 1,the CNN model contains six groups of convolutional lay- dissimilar sample x.HDML employs triplet-based model ers,one max pooling layer,one average pooling layer,one hash by adopting a hinge loss over the learned triplet binary codes. layer and one classification layer.The first convolutional layer In [3],HDML was applied for speaker identification and re- contains 64 convolution filters where the kernel size is 7 x 7. trieval task. Following the first convolutional layer.a max pooling layer is Although aforementioned speaker hashing methods have adopted.The second to the fifth groups of convolutional layers been used for speaker identification and retrieval,the retrieval which contain 3,4,6 and 3 blocks respectively,are designed performance is far from satisfactory as these methods cannot in a skip connection style.The first convolutional layer in each fully exploit the power of feature learning.In this paper,we block is followed by a batch normalization (BN)layer and a propose a deep hashing method,which will be presented in the ReLU layer successively.The second convolutional layer in following section,to perform feature learning and binary code each block is only followed by a BN layer.After the fifth group learning seamlessly. of convolutional layers com5_r,we can get the 512 channels of 16 x t intermediate feature maps,where t is determined by the length of audio segment.Then the sixth convolutional layer 3.Deep Additive Margin Hashing com6 is employed to combine local frequency features for each channel.followed by an adaptive average pooling layer avg In this section,we present deep additive margin hash- pooling to calculate a temporal mean of the t frames.These ing (DAMH)in detail,including model formulation and learn- modifications make the model focus on frequency variance in- ing algorithm. stead of temporal position.Hence,the capability of capturing speaker information is improved.After that,a hash layer trans- 3.1.Model forms the feature from a 512-dimensional real-valued vector into a binary code vector of K bits.Then the binary code will The proposed DAMH is shown in Figure 1,which consists of be utilized as input of the classification layer. two components,i.e.,feature learning part and objective func- Please note that in DAMH the architecture of ResNet-34 is tion part. used as an example for illustration,which may be replaced by 2909is trained using the EM algorithm, i-vector can be extracted with the maximum a posteriori (MAP) for each utterance. With the rise of deep learning [7], some works [2, 5, 6, 8] have been developed by using DNN for learning speaker embedding and achieved promising performance. There mainly exist two categories of DNN based speaker embedding methods. One aims to classify speakers using the frame level feature [5]. The other tries to classify speakers using the utterance level feature [2]. After training, intermediate layer features, which might be extracted from a single layer or multiple intermediate layers, are used as speaker embedding. With the rapid growth of audio data in real applications, real-value based retrieval usually suffers from high storage cost and low query speed. 2.2. Speaker Hashing To address the inefficiency problem in real-value based retrieval, many hashing methods have been proposed [9, 10, 11, 12]. There have appeared two speaker hashing methods [1, 3] for speaker identification and retrieval. In [1], locality sensitive hashing (LSH) [9] over i-vectors is applied to achieve faster speaker retrieval. Specifically, a d-dimensional i-vector v is transformed to a lower dimensional vector with a random weight matrix A ∈ R d×K drawn from a Gaussian distribution, where K < d. Then an element-wise sign function is adopted to turn the lower dimensional vector into the binary code b. The binary code b can be calculated based on the equation: b = sign(ATv). Because LSH based speaker hashing method in [1] utilizes random projection matrix as hash functions, it usually requires long binary codes to achieve satisfactory accuracy. To improve the retrieval performance, Hamming distance metric learning (HDML) [13, 3] has been applied for speaker identi- fication. HDML is a triplet-based supervised hashing method, which tries to preserve the relative similarity defined over triplet inputs like {x, x +, x −}. Here, {x, x +, x −} is constructed based on an anchor sample x, its similar sample x + and its dissimilar sample x −. HDML employs triplet-based model by adopting a hinge loss over the learned triplet binary codes. In [3], HDML was applied for speaker identification and retrieval task. Although aforementioned speaker hashing methods have been used for speaker identification and retrieval, the retrieval performance is far from satisfactory as these methods cannot fully exploit the power of feature learning. In this paper, we propose a deep hashing method, which will be presented in the following section, to perform feature learning and binary code learning seamlessly. 3. Deep Additive Margin Hashing In this section, we present deep additive margin hashing (DAMH) in detail, including model formulation and learning algorithm. 3.1. Model The proposed DAMH is shown in Figure 1, which consists of two components, i.e., feature learning part and objective function part. AM-Softmax Loss θ Quantization Loss b h Convolutions Convolutions Pooling Feature Learning Part Objective Function Part Figure 1: The end-to-end deep learning framework of DAMH. Table 1: CNN architecture modified from ResNet-34 for spectrogram input. K represents the binary code length and C represents the number of speakers in training set. Layer Configuration conv1 7 × 7, 64, stride 2 max pooling 3 × 3 max pooling, stride 2 conv2 x 3 × 3, 64, BN, ReLU 3 × 3, 64, BN × 3 conv3 x 3 × 3, 128, BN, ReLU 3 × 3, 128, BN × 4 conv4 x 3 × 3, 256, BN, ReLU 3 × 3, 256, BN × 6 conv5 x 3 × 3, 512, BN, ReLU 3 × 3, 512, BN × 3 conv6 16 × 1, 512, stride 1 avg. pooling 1 × 1 adaptive avg. pooling, stride 1 hash layer 512 × K, sign classification layer K × C 3.1.1. Feature Learning Part The feature learning part of DAMH model uses a convolutional neural network (CNN) architecture modified from ResNet-34 [14], which is shown in Table 1. As shown in Table 1, the CNN model contains six groups of convolutional layers, one max pooling layer, one average pooling layer, one hash layer and one classification layer. The first convolutional layer contains 64 convolution filters where the kernel size is 7 × 7. Following the first convolutional layer, a max pooling layer is adopted. The second to the fifth groups of convolutional layers, which contain 3, 4, 6 and 3 blocks respectively, are designed in a skip connection style. The first convolutional layer in each block is followed by a batch normalization (BN) layer and a ReLU layer successively. The second convolutional layer in each block is only followed by a BN layer. After the fifth group of convolutional layers conv5 x, we can get the 512 channels of 16 × t intermediate feature maps, where t is determined by the length of audio segment. Then the sixth convolutional layer conv6 is employed to combine local frequency features for each channel, followed by an adaptive average pooling layer avg. pooling to calculate a temporal mean of the t frames. These modifications make the model focus on frequency variance instead of temporal position. Hence, the capability of capturing speaker information is improved. After that, a hash layer transforms the feature from a 512-dimensional real-valued vector into a binary code vector of K bits. Then the binary code will be utilized as input of the classification layer. Please note that in DAMH the architecture of ResNet-34 is used as an example for illustration, which may be replaced by 2909