正在加载图片...

and Ie is the conditional expected information matrir defined as Ie(0:vb.)=Eg I(0:y)yobs EM Variants i.Generalized EM Algorithm (GEM) Recall that in the M-step we maximize Q(,0()i.e.,find a+)s.t. Q(0+:0o)≥Q(0:0) for all 0.In the generalized version of the EM Algorithm we will require only that a(+1)be chosen such that Q(0+;θ9))≥Q(09;8o holds,i.e.,)is chosen to increase Q(()over its value at (at each iteration t.This is sufficient to ensure that (0+;y≥(e9;y)》 at each iteration,so GEM sequence of iterates also converges to a local maximum. ii.GEM Algorithm based on a single N-R step We use GEM-type algorithms when a global maximizer of Q(0;0())does not exist in closed form.In this case,possibly an iterative method is required to accomplish the M-step,which might prove to be a computationally infeasible procedure.Since it is not essential to actually maximize Q in a GEM,but only increase the likelihood,we may replace the M-step with a step that achieves that.One possibilty of such a step is a single iteration of the Newton-Raphson(N-R)algorithm,which we know is a descent method. Let θt+)=g+a6⊙where6= a2Q0:091-1 8Q(0:0 a0a8]0=09 ]0=09 i.e.,6()is the N-R direction at (and 0<a()<1.If a()=1 this will define an exact N-R step.Here we will choose a()so that this defines a GEM sequence.This will be achieved if a()<2 as too. 14and Ic is the conditional expected information matrix defined as Ic(θ; yobs) = Eθ h I(θ; y)

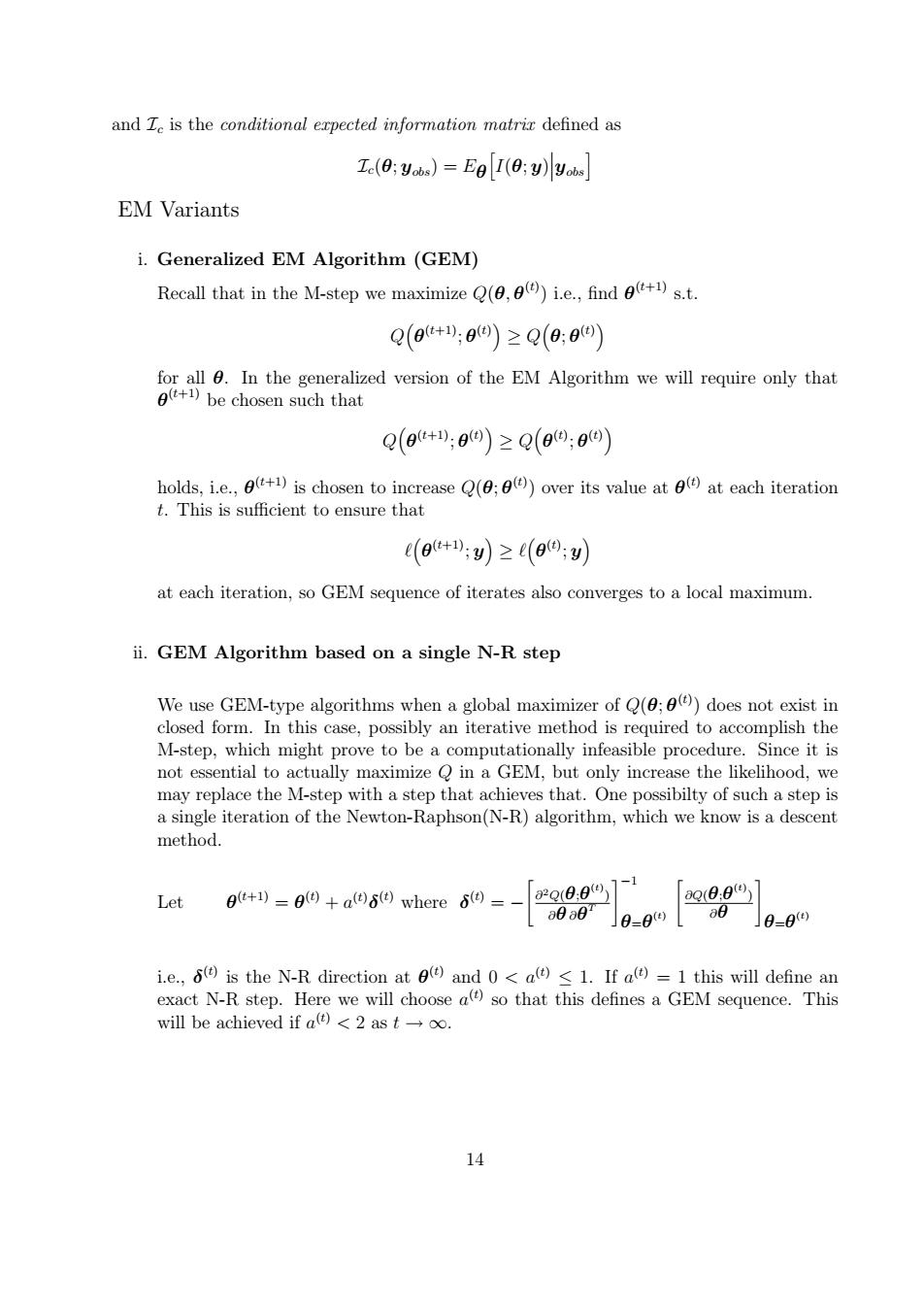

yobsi EM Variants i. Generalized EM Algorithm (GEM) Recall that in the M-step we maximize Q(θ, θ (t) ) i.e., find θ (t+1) s.t. Q θ (t+1); θ (t) ≥ Q θ; θ (t) for all θ. In the generalized version of the EM Algorithm we will require only that θ (t+1) be chosen such that Q θ (t+1); θ (t) ≥ Q θ (t) ; θ (t) holds, i.e., θ (t+1) is chosen to increase Q(θ; θ (t) ) over its value at θ (t) at each iteration t. This is sufficient to ensure that ` θ (t+1); y ≥ ` θ (t) ; y at each iteration, so GEM sequence of iterates also converges to a local maximum. ii. GEM Algorithm based on a single N-R step We use GEM-type algorithms when a global maximizer of Q(θ; θ (t) ) does not exist in closed form. In this case, possibly an iterative method is required to accomplish the M-step, which might prove to be a computationally infeasible procedure. Since it is not essential to actually maximize Q in a GEM, but only increase the likelihood, we may replace the M-step with a step that achieves that. One possibilty of such a step is a single iteration of the Newton-Raphson(N-R) algorithm, which we know is a descent method. Let θ (t+1) = θ (t) + a (t)δ (t) where δ (t) = − " ∂ 2Q(θ;θ (t) ) ∂θ ∂θ T #−1 θ=θ (t) " ∂Q(θ;θ (t) ) ∂θ # θ=θ (t) i.e., δ (t) is the N-R direction at θ (t) and 0 < a (t) ≤ 1. If a (t) = 1 this will define an exact N-R step. Here we will choose a (t) so that this defines a GEM sequence. This will be achieved if a (t) < 2 as t → ∞. 14