正在加载图片...

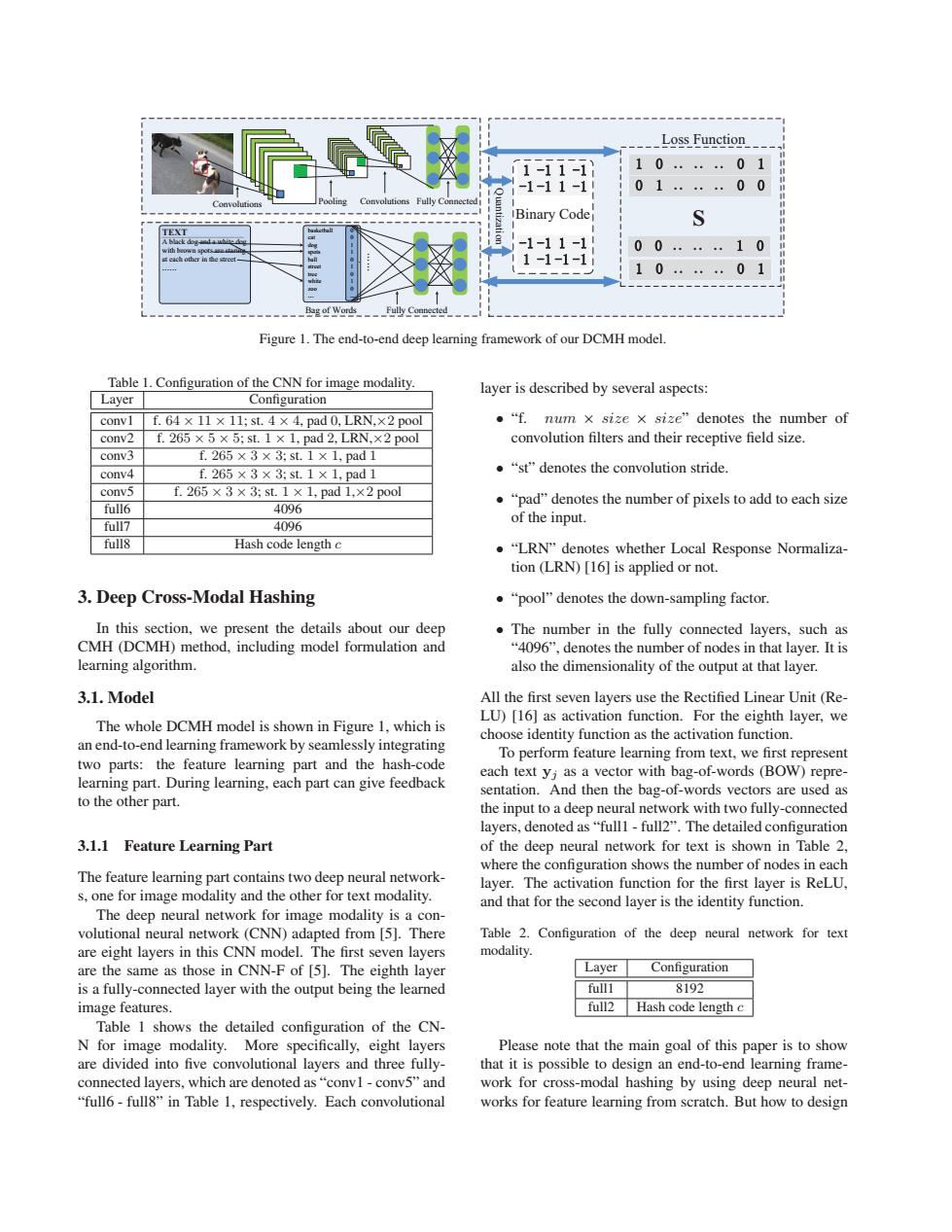

Loss Function 1-11-1 10.... 01 -1-11-1 01……… 00 Binary Codej S -1-11-1 00...10 1-1-1-1 0.…….01 Bag of Words Figure 1.The end-to-end deep learning framework of our DCMH model. Table 1.Configuration of the CNN for image modality. layer is described by several aspects: Layer Configuration conv1f.64×11×11;st.4×4,pad0,LRN,×2pool ●“f.num×size×size”denotes the number of conv2 f.265×5×5;st.1×1,pad2,LRN,×2pool convolution filters and their receptive field size. conv3 f.265×3×3:st.1×1,pad1 conv4 f.265×3×3:st1×1,pad1 ●“st"denotes the convolution stride. conv5 f.265×3×3:st.1×1,pad1,×2p0ol ●“pad”denotes the number of pixels to add to each size full6 4096 of the input. full7 4096 full8 Hash code length c ."LRN"denotes whether Local Response Normaliza- tion (LRN)[16]is applied or not. 3.Deep Cross-Modal Hashing ●“pool”'denotes the down-sampling factor. In this section,we present the details about our deep The number in the fully connected layers.such as CMH(DCMH)method,including model formulation and "4096",denotes the number of nodes in that layer.It is learning algorithm. also the dimensionality of the output at that layer. 3.1.Model All the first seven layers use the Rectified Linear Unit(Re- The whole DCMH model is shown in Figure 1,which is LU)[16]as activation function.For the eighth layer,we choose identity function as the activation function. an end-to-end learning framework by seamlessly integrating To perform feature learning from text,we first represent two parts:the feature learning part and the hash-code each text y;as a vector with bag-of-words (BOW)repre- learning part.During learning,each part can give feedback sentation.And then the bag-of-words vectors are used as to the other part. the input to a deep neural network with two fully-connected layers,denoted as"fulll-full2".The detailed configuration 3.1.1 Feature Learning Part of the deep neural network for text is shown in Table 2, where the configuration shows the number of nodes in each The feature learning part contains two deep neural network- layer.The activation function for the first layer is ReLU, s,one for image modality and the other for text modality. and that for the second layer is the identity function. The deep neural network for image modality is a con- volutional neural network (CNN)adapted from [5].There Table 2.Configuration of the deep neural network for text are eight layers in this CNN model.The first seven layers modality. are the same as those in CNN-F of [5].The eighth layer Layer Configuration is a fully-connected layer with the output being the learned fulll 8192 image features. full2 Hash code length c Table 1 shows the detailed configuration of the CN- N for image modality.More specifically,eight layers Please note that the main goal of this paper is to show are divided into five convolutional layers and three fully- that it is possible to design an end-to-end learning frame- connected layers,which are denoted as"convI-conv5"and work for cross-modal hashing by using deep neural net- "full6-full8"in Table 1,respectively.Each convolutional works for feature learning from scratch.But how to design Binary Code Loss Function S Convolutions Pooling Convolutions Fully Connected Quantization TEXT A black dog and a white dog with brown spots are staring at each other in the street …… Fully Connected basketball cat dog spots ball street tree white zoo … 0 0 1 1 0 1 0 1 0 ... Bag of Words 噯 噯 . Figure 1. The end-to-end deep learning framework of our DCMH model. Table 1. Configuration of the CNN for image modality. Layer Configuration conv1 f. 64 × 11 × 11; st. 4 × 4, pad 0, LRN,×2 pool conv2 f. 265 × 5 × 5; st. 1 × 1, pad 2, LRN,×2 pool conv3 f. 265 × 3 × 3; st. 1 × 1, pad 1 conv4 f. 265 × 3 × 3; st. 1 × 1, pad 1 conv5 f. 265 × 3 × 3; st. 1 × 1, pad 1,×2 pool full6 4096 full7 4096 full8 Hash code length 𝑐 3. Deep Cross-Modal Hashing In this section, we present the details about our deep CMH (DCMH) method, including model formulation and learning algorithm. 3.1. Model The whole DCMH model is shown in Figure 1, which is an end-to-end learning framework by seamlessly integrating two parts: the feature learning part and the hash-code learning part. During learning, each part can give feedback to the other part. 3.1.1 Feature Learning Part The feature learning part contains two deep neural networks, one for image modality and the other for text modality. The deep neural network for image modality is a convolutional neural network (CNN) adapted from [5]. There are eight layers in this CNN model. The first seven layers are the same as those in CNN-F of [5]. The eighth layer is a fully-connected layer with the output being the learned image features. Table 1 shows the detailed configuration of the CNN for image modality. More specifically, eight layers are divided into five convolutional layers and three fullyconnected layers, which are denoted as “conv1 - conv5” and “full6 - full8” in Table 1, respectively. Each convolutional layer is described by several aspects: ∙ “f. 𝑛𝑢𝑚 × 𝑠𝑖𝑧𝑒 × 𝑠𝑖𝑧𝑒” denotes the number of convolution filters and their receptive field size. ∙ “st” denotes the convolution stride. ∙ “pad” denotes the number of pixels to add to each size of the input. ∙ “LRN” denotes whether Local Response Normalization (LRN) [16] is applied or not. ∙ “pool” denotes the down-sampling factor. ∙ The number in the fully connected layers, such as “4096”, denotes the number of nodes in that layer. It is also the dimensionality of the output at that layer. All the first seven layers use the Rectified Linear Unit (ReLU) [16] as activation function. For the eighth layer, we choose identity function as the activation function. To perform feature learning from text, we first represent each text y𝑗 as a vector with bag-of-words (BOW) representation. And then the bag-of-words vectors are used as the input to a deep neural network with two fully-connected layers, denoted as “full1 - full2”. The detailed configuration of the deep neural network for text is shown in Table 2, where the configuration shows the number of nodes in each layer. The activation function for the first layer is ReLU, and that for the second layer is the identity function. Table 2. Configuration of the deep neural network for text modality. Layer Configuration full1 8192 full2 Hash code length 𝑐 Please note that the main goal of this paper is to show that it is possible to design an end-to-end learning framework for cross-modal hashing by using deep neural networks for feature learning from scratch. But how to design