正在加载图片...

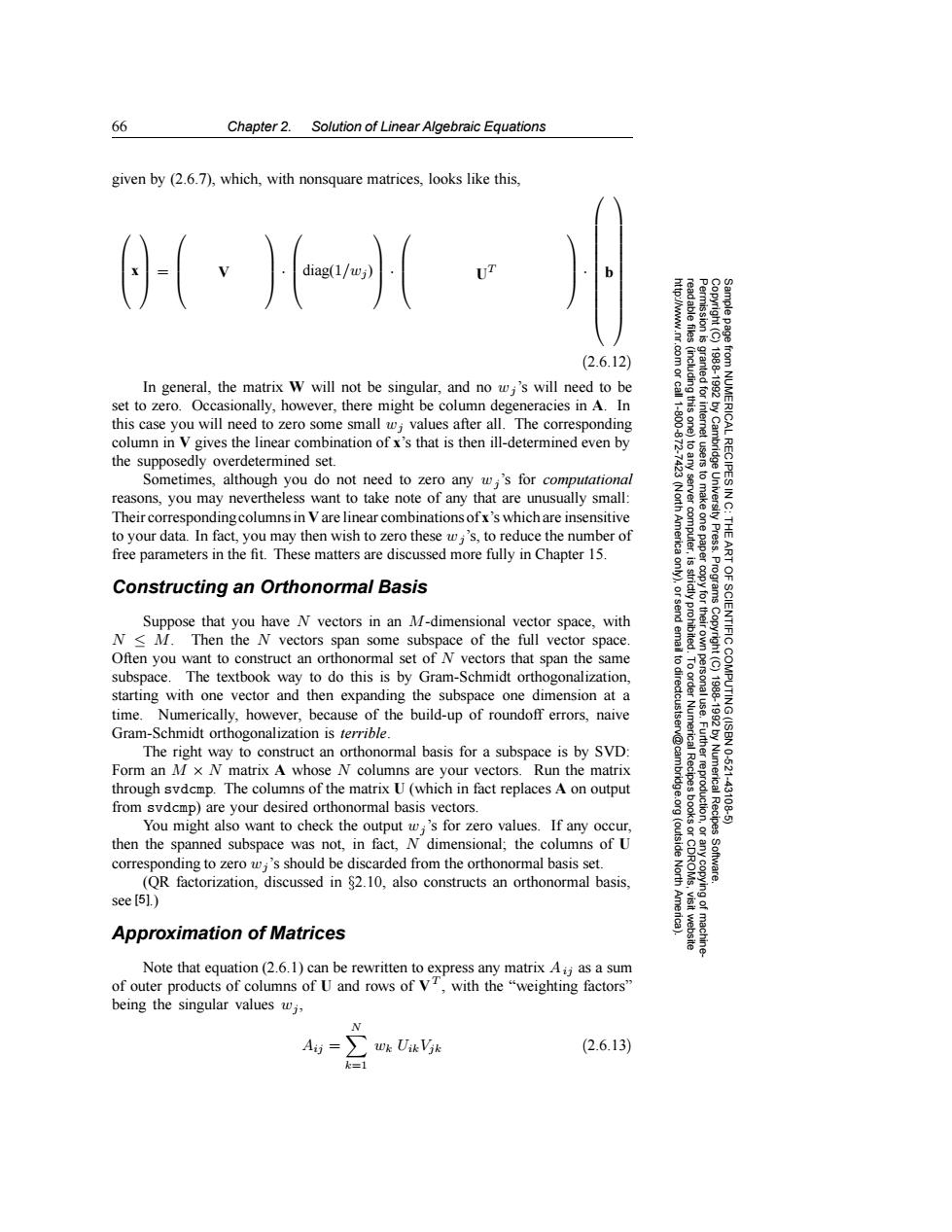

66 Chapter 2.Solution of Linear Algebraic Equations given by (2.6.7),which,with nonsquare matrices,looks like this, 0(· (2.6.12) In general,the matrix W will not be singular,and no wi's will need to be nted for set to zero.Occasionally,however,there might be column degeneracies in A.In this case you will need to zero some small w;values after all.The corresponding column in V gives the linear combination of x's that is then ill-determined even by the supposedly overdetermined set. Sometimes,although you do not need to zero any wi's for computational reasons,you may nevertheless want to take note of any that are unusually small: 8 Their corresponding columns in V are linear combinations ofx's which are insensitive to your data.In fact,you may then wish to zero these wi's,to reduce the number of Press. free parameters in the fit.These matters are discussed more fully in Chapter 15. Constructing an Orthonormal Basis Suppose that you have N vectors in an M-dimensional vector space,with OF SCIENTIFIC N<M.Then the N vectors span some subspace of the full vector space. Often you want to construct an orthonormal set of N vectors that span the same 可 subspace.The textbook way to do this is by Gram-Schmidt orthogonalization, starting with one vector and then expanding the subspace one dimension at a time.Numerically,however,because of the build-up of roundoff errors,naive Gram-Schmidt orthogonalization is terrible. The right way to construct an orthonormal basis for a subspace is by SVD: Form an M x N matrix A whose N columns are your vectors.Run the matrix 10.621 through svdcmp.The columns of the matrix U(which in fact replaces A on output Fuurggoglrion from svdcmp)are your desired orthonormal basis vectors. Numerical Recipes 43106 You might also want to check the output w;'s for zero values.If any occur. then the spanned subspace was not,in fact,N dimensional;the columns of U (outside corresponding to zero wi's should be discarded from the orthonormal basis set. (QR factorization,discussed in $2.10,also constructs an orthonormal basis, North Software. see [51.) Approximation of Matrices Note that equation(2.6.1)can be rewritten to express any matrix Aij as a sum of outer products of columns of U and rows of v,with the"weighting factors" being the singular values wj, N Ai=J (2.6.13)66 Chapter 2. Solution of Linear Algebraic Equations Permission is granted for internet users to make one paper copy for their own personal use. Further reproduction, or any copyin Copyright (C) 1988-1992 by Cambridge University Press. Programs Copyright (C) 1988-1992 by Numerical Recipes Software. Sample page from NUMERICAL RECIPES IN C: THE ART OF SCIENTIFIC COMPUTING (ISBN 0-521-43108-5) g of machinereadable files (including this one) to any server computer, is strictly prohibited. To order Numerical Recipes books or CDROMs, visit website http://www.nr.com or call 1-800-872-7423 (North America only), or send email to directcustserv@cambridge.org (outside North America). given by (2.6.7), which, with nonsquare matrices, looks like this, x = V · diag(1/wj) · UT · b (2.6.12) In general, the matrix W will not be singular, and no wj ’s will need to be set to zero. Occasionally, however, there might be column degeneracies in A. In this case you will need to zero some small wj values after all. The corresponding column in V gives the linear combination of x’s that is then ill-determined even by the supposedly overdetermined set. Sometimes, although you do not need to zero any wj ’s for computational reasons, you may nevertheless want to take note of any that are unusually small: Their correspondingcolumns in V are linear combinations of x’s which are insensitive to your data. In fact, you may then wish to zero these wj ’s, to reduce the number of free parameters in the fit. These matters are discussed more fully in Chapter 15. Constructing an Orthonormal Basis Suppose that you have N vectors in an M-dimensional vector space, with N ≤ M. Then the N vectors span some subspace of the full vector space. Often you want to construct an orthonormal set of N vectors that span the same subspace. The textbook way to do this is by Gram-Schmidt orthogonalization, starting with one vector and then expanding the subspace one dimension at a time. Numerically, however, because of the build-up of roundoff errors, naive Gram-Schmidt orthogonalization is terrible. The right way to construct an orthonormal basis for a subspace is by SVD: Form an M × N matrix A whose N columns are your vectors. Run the matrix through svdcmp. The columns of the matrix U (which in fact replaces A on output from svdcmp) are your desired orthonormal basis vectors. You might also want to check the output wj ’s for zero values. If any occur, then the spanned subspace was not, in fact, N dimensional; the columns of U corresponding to zero wj ’s should be discarded from the orthonormal basis set. (QR factorization, discussed in §2.10, also constructs an orthonormal basis, see [5].) Approximation of Matrices Note that equation (2.6.1) can be rewritten to express any matrix Aij as a sum of outer products of columns of U and rows of VT , with the “weighting factors” being the singular values wj , Aij = N k=1 wk UikVjk (2.6.13)