正在加载图片...

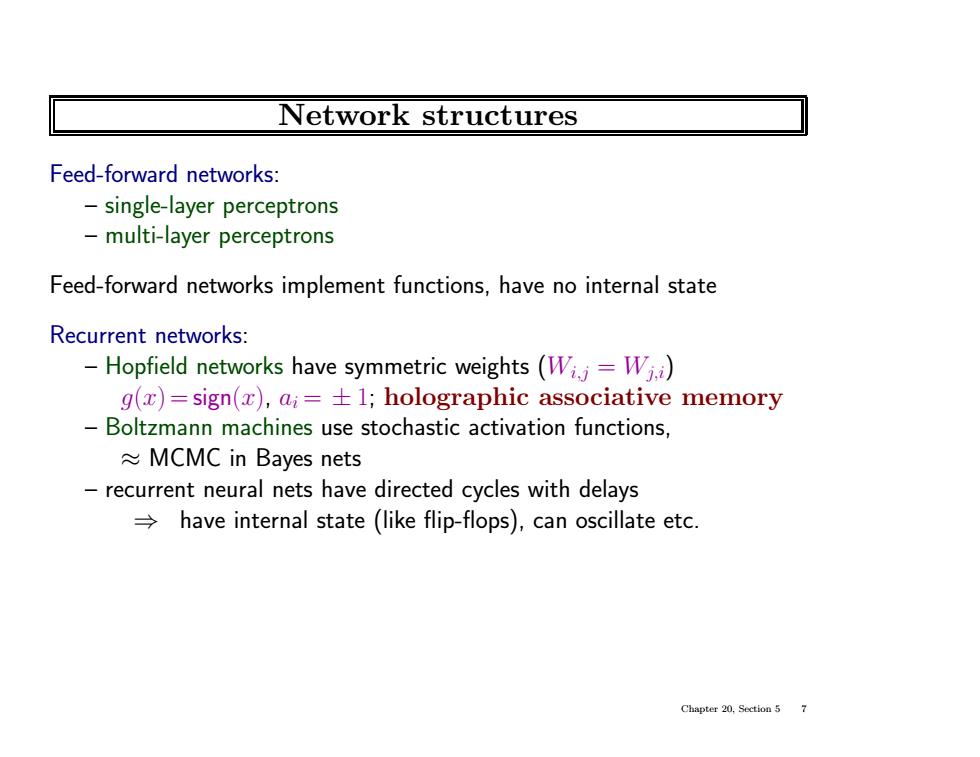

Network structures Feed-forward networks: single-layer perceptrons multi-layer perceptrons Feed-forward networks implement functions,have no internal state Recurrent networks: -Hopfield networks have symmetric weights(Wij-Wj) g(x)=sign(x),ai=+1;holographic associative memory Boltzmann machines use stochastic activation functions, ≈MCMC in Bayes nets recurrent neural nets have directed cycles with delays have internal state (like flip-flops),can oscillate etc. Chapter 20.Section 5 7Network structures Feed-forward networks: – single-layer perceptrons – multi-layer perceptrons Feed-forward networks implement functions, have no internal state Recurrent networks: – Hopfield networks have symmetric weights (Wi,j = Wj,i) g(x) = sign(x), ai = ± 1; holographic associative memory – Boltzmann machines use stochastic activation functions, ≈ MCMC in Bayes nets – recurrent neural nets have directed cycles with delays ⇒ have internal state (like flip-flops), can oscillate etc. Chapter 20, Section 5 7